dbt unit testing best practices

Learn how (and why) to implement dbt unit tests for your dbt project to test niche and complex business logic.

In speaking with data practitioners, many companies use either unit tests or data tests in their dbt projects as their differences (and limitations) are not well understood. (And that’s if they test their data at all!)

If you want a robust data quality strategy, you need to have several types of dbt tests in play as they have different purposes and compensate for each other’s weaknesses.

In this post, I’ll explore how using recently available dbt unit testing functionality can help prevent data quality issues resulting from complex SQL logic and look at which scenarios they’re most useful for. I’ll share a practical example of how you can implement dbt unit tests for complex business logic in dbt.

If you’re already familiar with dbt unit tests, scroll to the end where I rank the three common dbt tests–unit tests, data tests, and data diffs–based on their effectiveness in catching data quality issues based on implementation efforts, code coverage, scalability, and other factors. Together, the three tests ensure that every inch of your project’s data quality is covered, from critical business logic to the long tail of "unknown unknowns."

What is dbt unit testing

dbt unit tests are dbt tests that validate the model logic with predefined inputs and outputs.

Unlike a "classic" dbt data test, which runs an assertion against a model built with database data, unit tests require the developer to define "test cases" with input data and expected output. Thus, they allow more granular testing and isolate the validation of source data from the validation of the model code itself.

Why dbt data tests are usually not enough

One of the big leaps that dbt made relative to prior data orchestrators is having a native data testing framework – dbt tests. dbt’s generic tests allow developers to execute assertions such as that all values in a given column are unique, non-null, or conform to a particular set. While easy to define, dbt data tests have a few disadvantages at scale.

dbt tests compare one version of your data

Because dbt data tests run assertions on "live" data (which is prone to change over time and with code changes), it creates two challenges:

- Tests can be noisy because they react to changes in both code and data, and it can be hard to separate those two effects.

- Tests can be costly in terms of time and compute, especially for larger datasets with many tested columns and/or rows.

Because dbt unit tests use fixed input and output data, they run extremely fast, are highly specific, and not prone to noise due to changing data.

When dbt unit testing became available

Quite recently, in dbt core 1.8!

Some background: The initial proposal for unit testing appeared four years ago, and this year after an extensive design discussion with the community unit testing was finally implemented in dbt in May 2024!

dbt data tests vs. dbt unit tests

In a typical dbt data test, we define assertions (or expectations) about the built model and its columns, i.e., "column X should look like this," e.g., your primary key should never be null, so you would add a not_null test for it. In a data test, we can’t say, "Given input X, the output should be Y." In a data test, the input and expected output are not strictly defined, whereas in a unit test, they are.

dbt unit testing best practices

Unit tests can be beneficial in the following example scenarios:

- Parsing strings with regular expressions or string functions, e.g., extracting UTM parameters from a URL or validating emails

- Complex CASE WHEN statements

- Formulas involving arithmetic expressions and dates

- Anything with high business criticality, e.g., key metric or payout calculations.

In such scenarios, validating the model logic with a data test can be very hard but easily done with a unit test.

Another rule of thumb is to use dbt unit tests as "prospective" or "retrospective" tests:

- Retrospective: Add unit tests to the parts of code where bugs have been reported previously.

- Prospective: Add unit tests to cover edge cases that don’t yet exist in the current data but are important to handle properly—another technique impossible with data tests!

It’s important to mention that unlike dbt data tests, which can be run during both development and each dbt run in production, dbt unit tests are relevant only to the development process – when changes to the dbt code are introduced. Including dbt unit tests in your dbt CI is a great idea to test changes during the pull/merge request review stage.

However, running dbt unit tests in production is pointless and will only increase the execution time of your dbt run.

How to implement dbt unit tests in your dbt project

In the following example, we’re adding a unit test to the "dim_employees" model that calculates appropriate seniority and department for each employee based on their title:

As we can see, the logic for "seniority" and "department" mappings is quite complex and can easily lead to unexpected results if not properly tested. We can define a simple test (test_employee_seniority_and_department) to check for a few expected permutations:

The test can be invoked with the following command:

The above command will run all of the unit tests on dim_employees. Note that to execute a unit test successfully, its direct parents (“stg_employees” in our example) need to exist – but can be empty since they are mocked by the unit test.

Limitations of dbt unit tests

Scalability

Despite their many powers, dbt unit tests have one serious limitation: they require much more effort to write since the developer needs to define the input and output data for every test case. It’s best to use dbt unit tests tactically for the most complex, error-prone, and business-critical parts of your dbt code base.

Impact analysis

dbt unit tests are laser-focused by design, which is extremely effective for testing specific parts in the code. However, this approach still poses a challenge for impact analysis during deployment and pull request review: if a test fails, what downstream models, dashboards, data products, and stakeholders would be affected?

Data Diff: The scalable, automated testing approach that complements unit tests

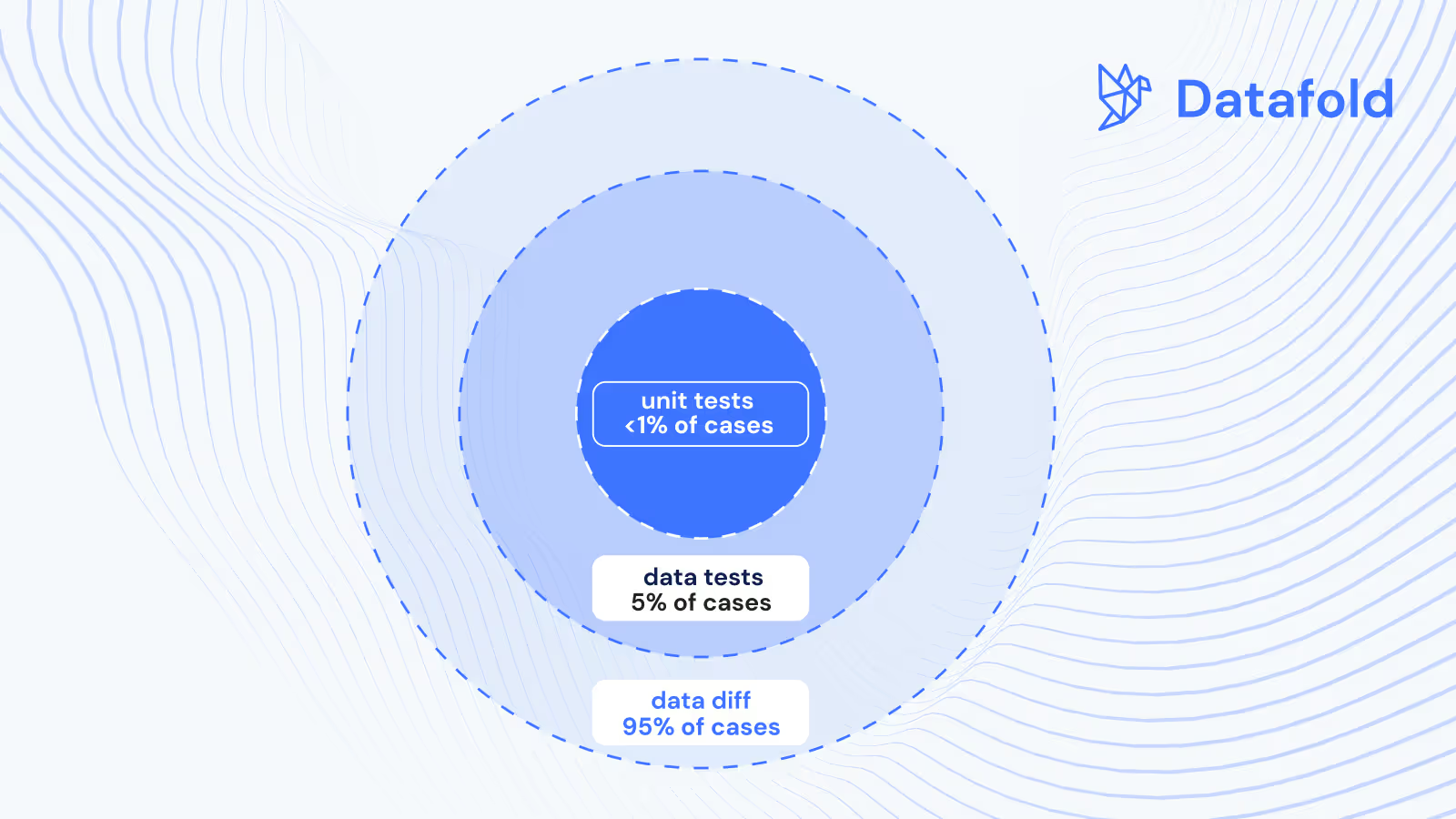

Given the high friction of creating dbt unit tests, it is reasonable to expect ~1% of columns to be covered with unit tests. Furthermore, the % of covered possible data errors can be 10-100x smaller given the complexity of both data and code. So, what do you do about the remaining 99.99% of potential bugs not covered with unit tests?

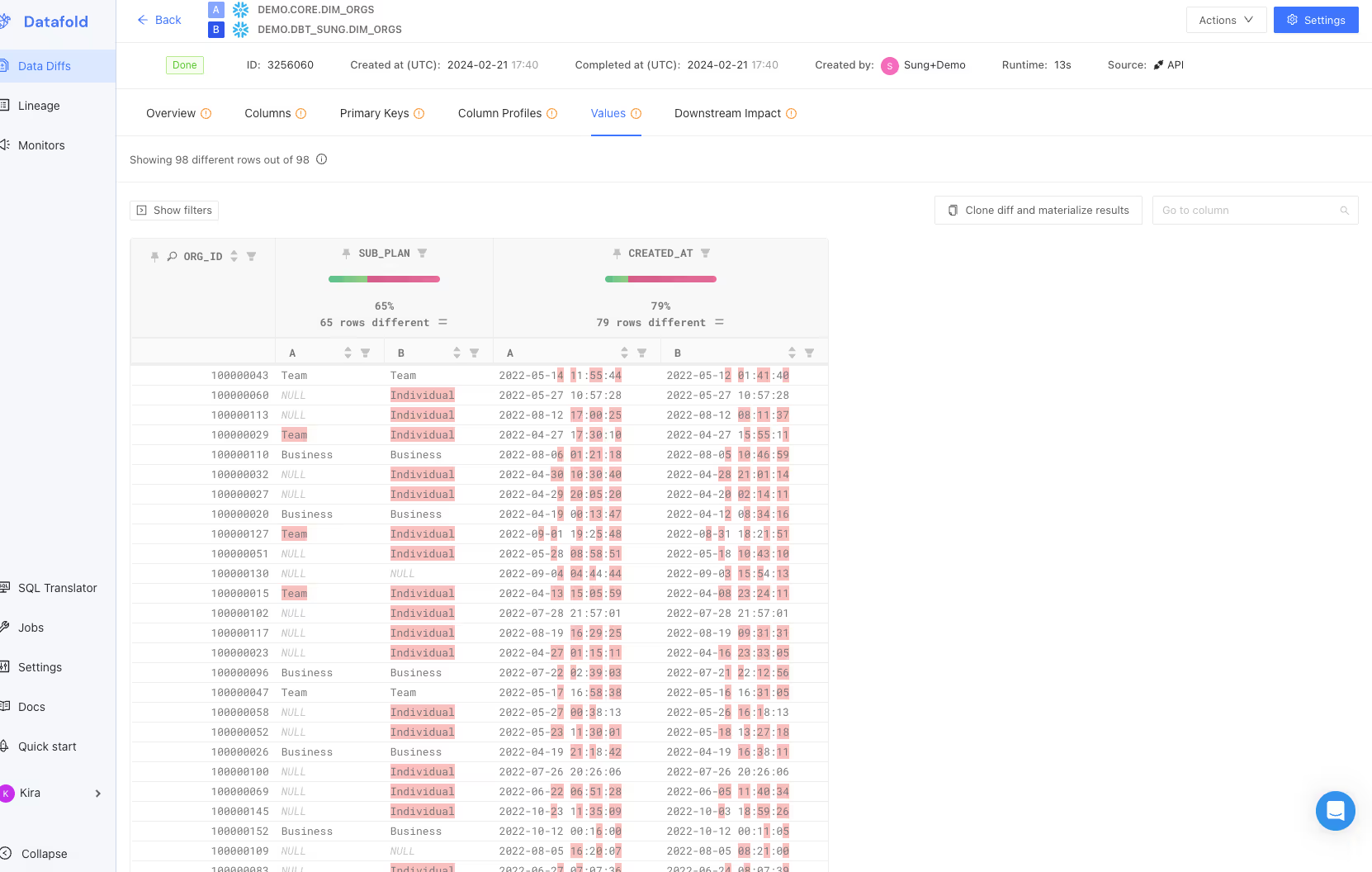

What is data diffing?

This is where data diffing – an automated strategy for dbt testing can be very helpful.

Data diff is a value-level and statistical comparison of datasets. For your dbt project, data diffing can be leveraged to compare the data in production (the ground truth) and development/staging, allowing the developer and the code reviewer to fully assess the nature and impact of changes to the business logic.

Implemented in CI and coupled with column-level lineage that extends to BI integrations, data diff safeguards against unforeseen changes, reducing the time needed to review and test the code and accelerating the overall team velocity.

How dbt data, unit tests, and data diff create bulletproof data quality

A strong data quality strategy will use several test types and includes unit tests for a small share of critical/complex business logic, data tests for standard quality checks, and data diff for the vast majority of "unknown unknowns" that make up data quality issues:

Putting it all together, an effective strategy utilizes all three types of dbt testing to maximize each method’s impact and ROI.

.avif)

%2520(2).avif)