.avif)

Datafold product updates

September 9, 2025

September 9, 2025

Simplified cross-database diffing

We've streamlined the cross-database diffing experience by removing the algorithm selection step. Previously, you had to choose between "hashdiff" and "in-memory" algorithms when setting up cross-database comparisons.

Now, all cross-database diffs automatically use our optimized in-memory algorithm, which provides more consistent and performant results across all database combinations and data types. All existing cross-database diffs and monitors have been automatically upgraded to use the unified algorithm.

The setup process is now more straightforward: simply select your source and target databases, choose your datasets, and run your diff. No action required - your existing configurations will continue working as before.

May 28, 2025

May 28, 2025

May 2025 Changelog

Datafold Migration Agent now supports all major ETL tools

We’re making migrations easier and faster. The Datafold Migration Agent (DMA) now supports all major ETL tools, including Informatica, Talend, IBM DataStage, SSIS, Matillion, and Alteryx. This means you can translate even the most complex legacy ETL logic into modern code for Snowflake, Databricks, BigQuery, Redshift, dbt, and Coalesce. DMA goes beyond simple syntax conversion, delivering fully refactored code with guaranteed data parity.

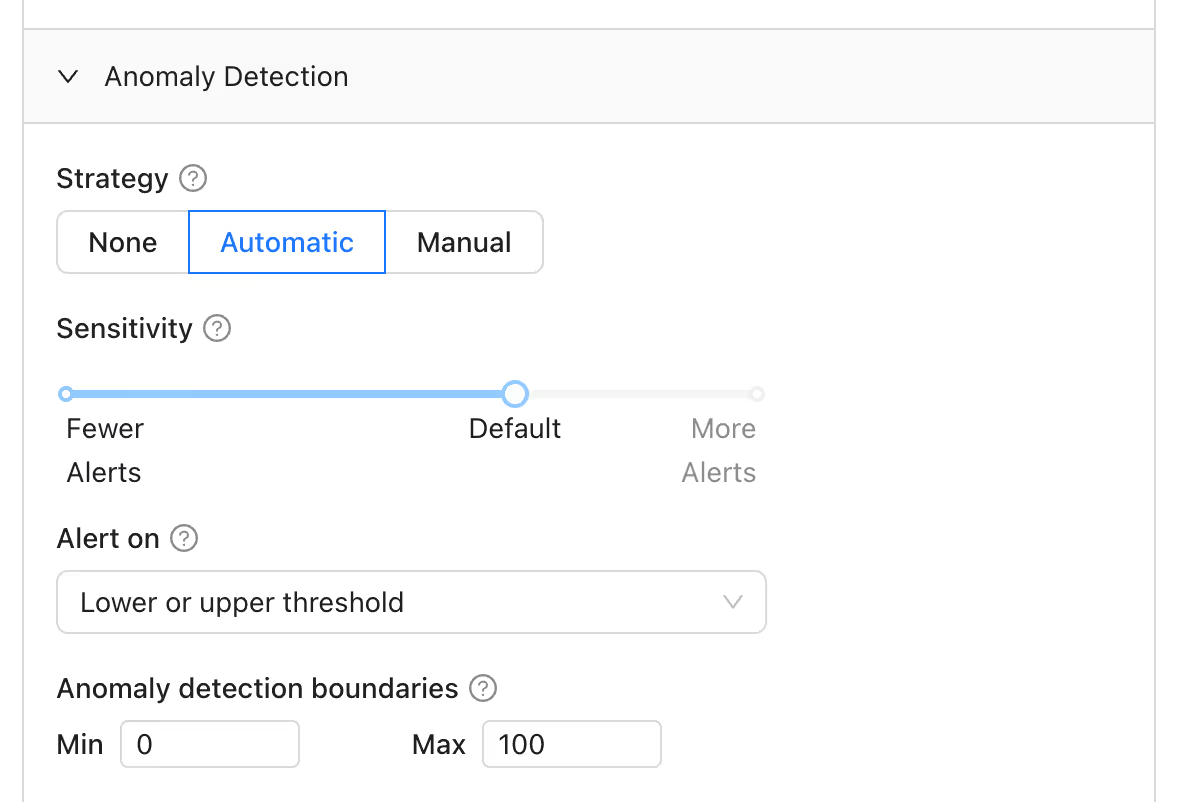

Bounded anomaly detection and flexible alert thresholds

Anomaly detection just got smarter and more focused with custom boundaries and alerts.

Custom Boundaries: This feature allows users to define minimum and maximum values for metrics, ensuring the anomaly detection algorithm only predicts values within these specified bounds. For instance, if a metric should always be positive, the system will never suggest an anomaly range that dips below zero.

Custom Alert Criteria: Users can tailor alert conditions by specifying whether to be notified when a metric exceeds the expected range, falls below it, or both. This customization helps reduce unnecessary alerts—for example, alerting only on sudden drops in website traffic while ignoring unexpected spikes.

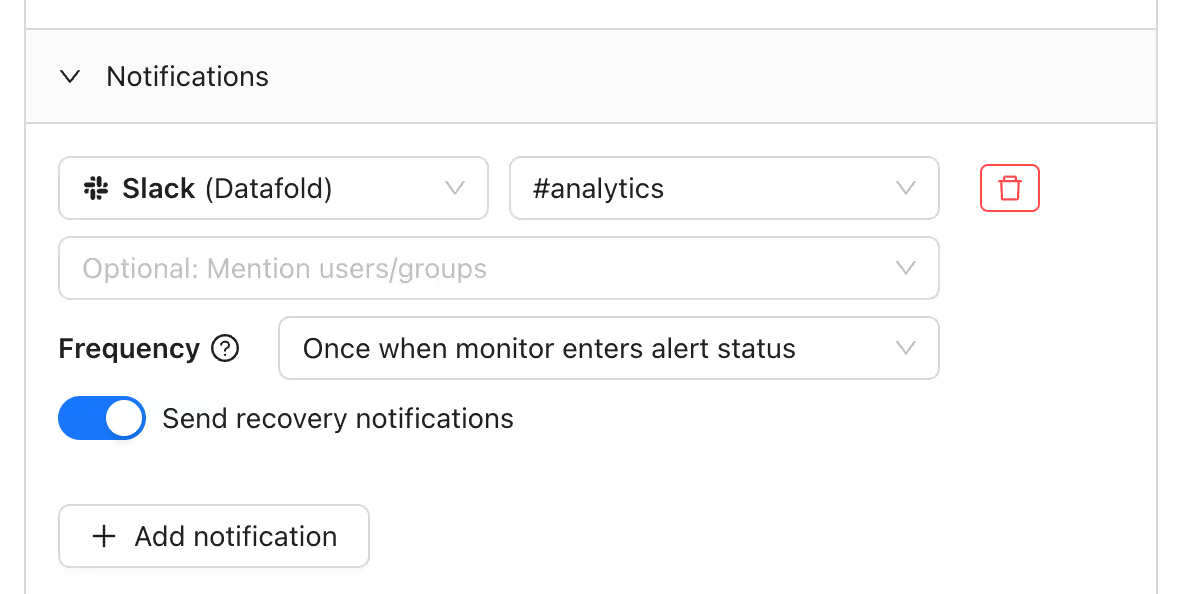

Enhanced monitor controls and freshness checks

We’re introducing new ways to stay on top of your data while cutting down on noise. Freshness checks now support monitoring timestamp columns, making it easier to catch stale data and keep your dashboards accurate.

You can also choose whether to receive recovery notifications when a monitor returns to a healthy state. This optional setting helps reduce unnecessary Slack pings and email notifications, so you can focus on the issues that truly need your attention.

These updates are all about delivering clearer signals and reducing clutter so you can work more efficiently and confidently.

Happy diffing!

April 25, 2025

April 25, 2025

April 2025 Changelog

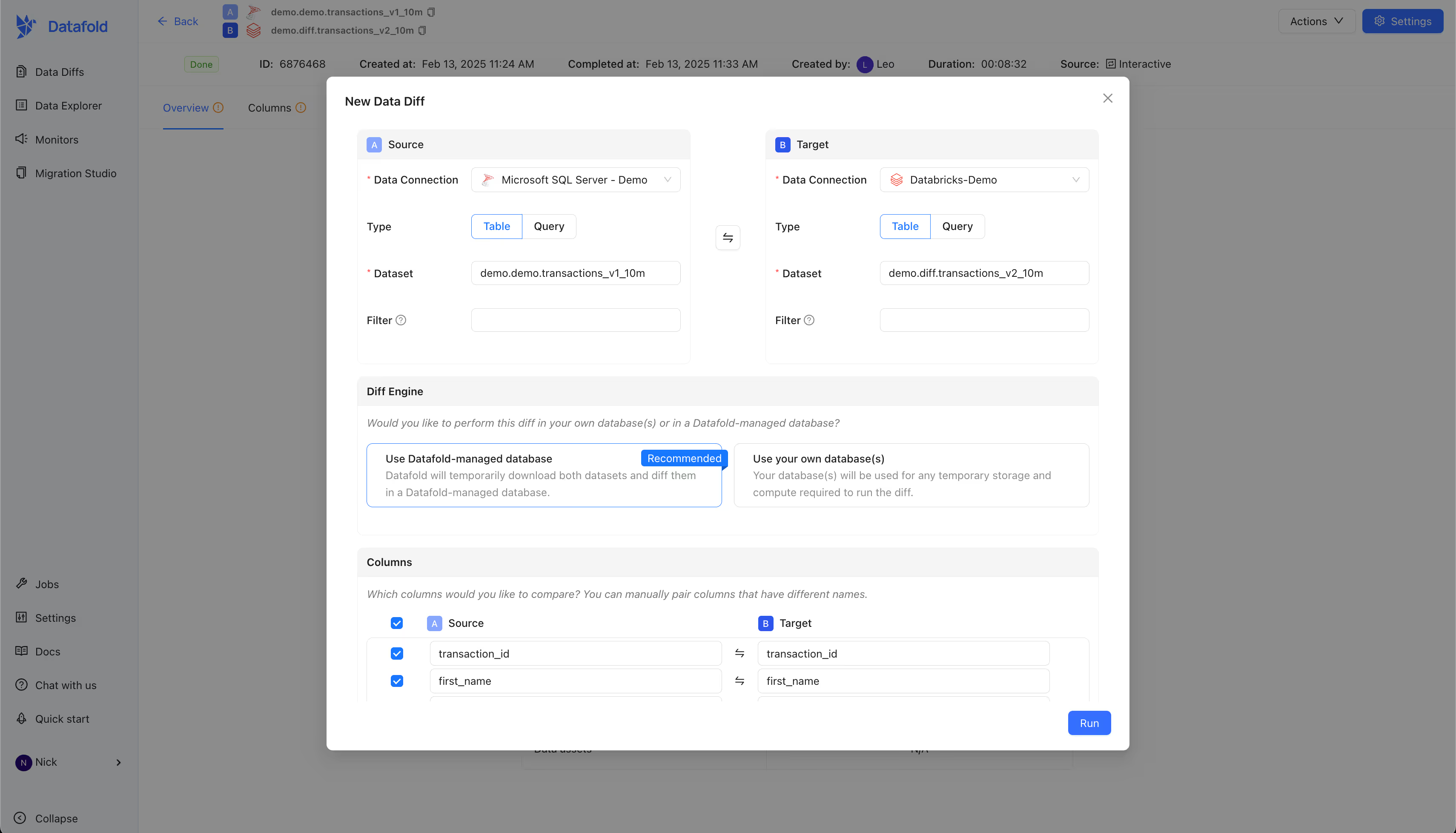

A sleeker, faster way to Data Diff is here!

Whether you’re sanity-checking staging vs. production or reconciling source and target tables during a migration, spinning up a diff in Datafold is now easier than ever.

How it works

- Side-by-side datasets: Configure exactly what you’re diffing at a glance.

- Unified column configuration: Select, map & rename columns in one streamlined view.

- Single-page layout: All settings on one screen means zero tab-hopping.

- Built-in guidance: Inline tips help you make the right call, fast.

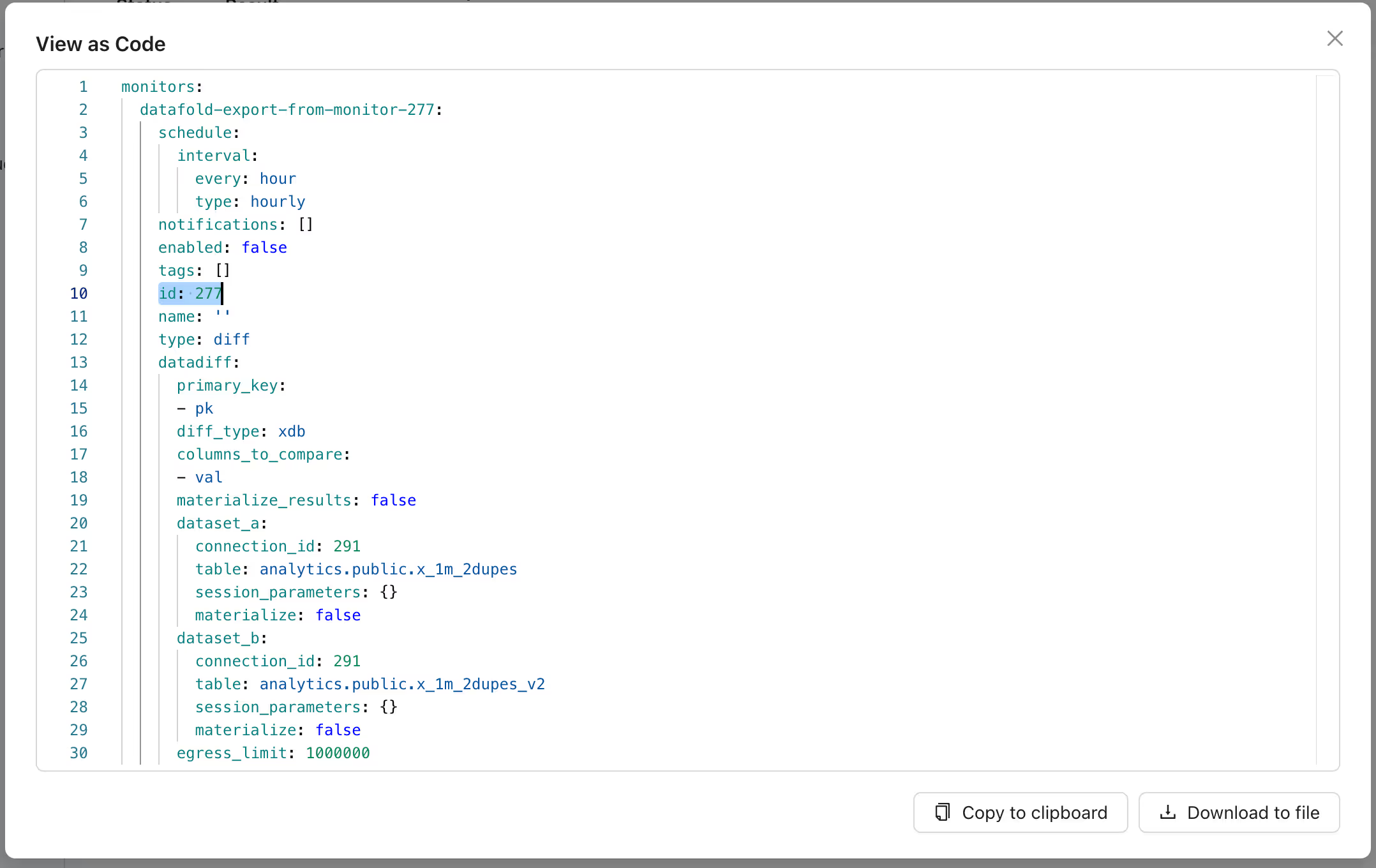

Export Monitors to YAML

You can now export monitors created in the UI directly to YAML—making it easier to adopt a code-based workflow without rebuilding your monitors from scratch. This bridges the gap for teams that start with the UI but want the flexibility of managing monitors in version control. Simply click “View as Code” on any monitor to generate the exact YAML configuration, ready to use in Git.

How it works

- Export to YAML: Instantly generate YAML for any UI-created monitor

- Version control your monitors: Store YAML files in Git, review changes, and manage updates alongside dbt models

- Deploy with the CLI: Apply YAML-based monitors using the Datafold CLI

- Automate with CI/CD: Integrate monitor deployment into your DevOps pipeline

Check out the docs for full instructions and example YAML files.

Happy diffing!

March 27, 2025

March 27, 2025

March 2025 Changelog

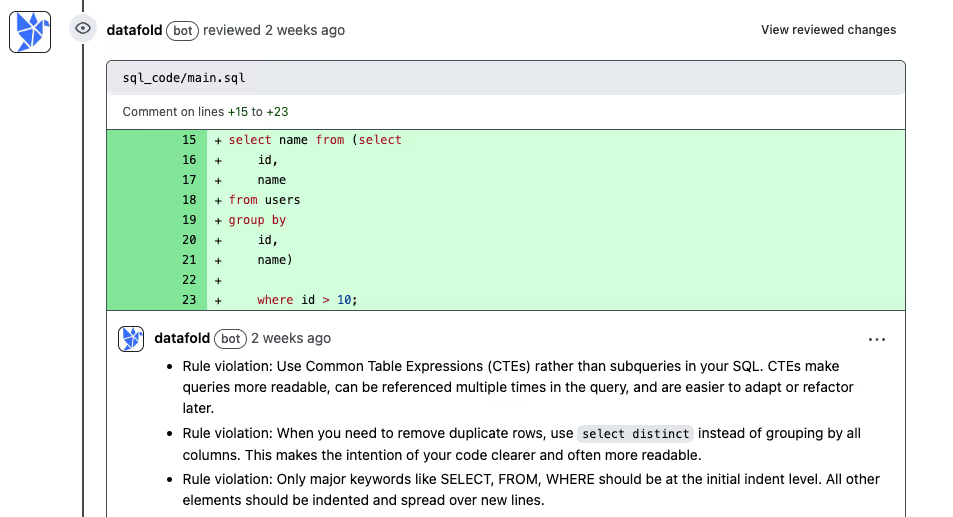

Introducing Automated Code Review - Custom Rules (beta)

We're excited to announce the beta release of Automated Code Review with Custom Rules for select customers. This expands on our AI-Powered Code Review capabilities, enabling teams to automatically enforce their organization-specific SQL standards across repositories, reducing manual review time and ensuring consistent code quality.

Initial feedback from early access customers has been positive and we're gradually expanding access to continue to refine the experience.

How it works

Automated Code Review analyzes each pull request against your team’s custom SQL standards and delivers targeted, inline suggestions when violations are detected.

Here’s what it can do:

- Uses your organization's custom SQL coding rules and best practices

- Automatically scans new PRs for violations

- Posts actionable, targeted suggestions directly in the PR when violations are found

- Supports scoping rules to specific repositories, file types, or directories

Getting started

If you're interested in participating in the beta, please just get in touch with your Datafold rep. We’ll help you implement your ruleset and enable Automated Code Review for your organization. Please note that only GitHub and GitLab repositories are supported at this time.

February 24, 2025

February 24, 2025

February 2025 Changelog

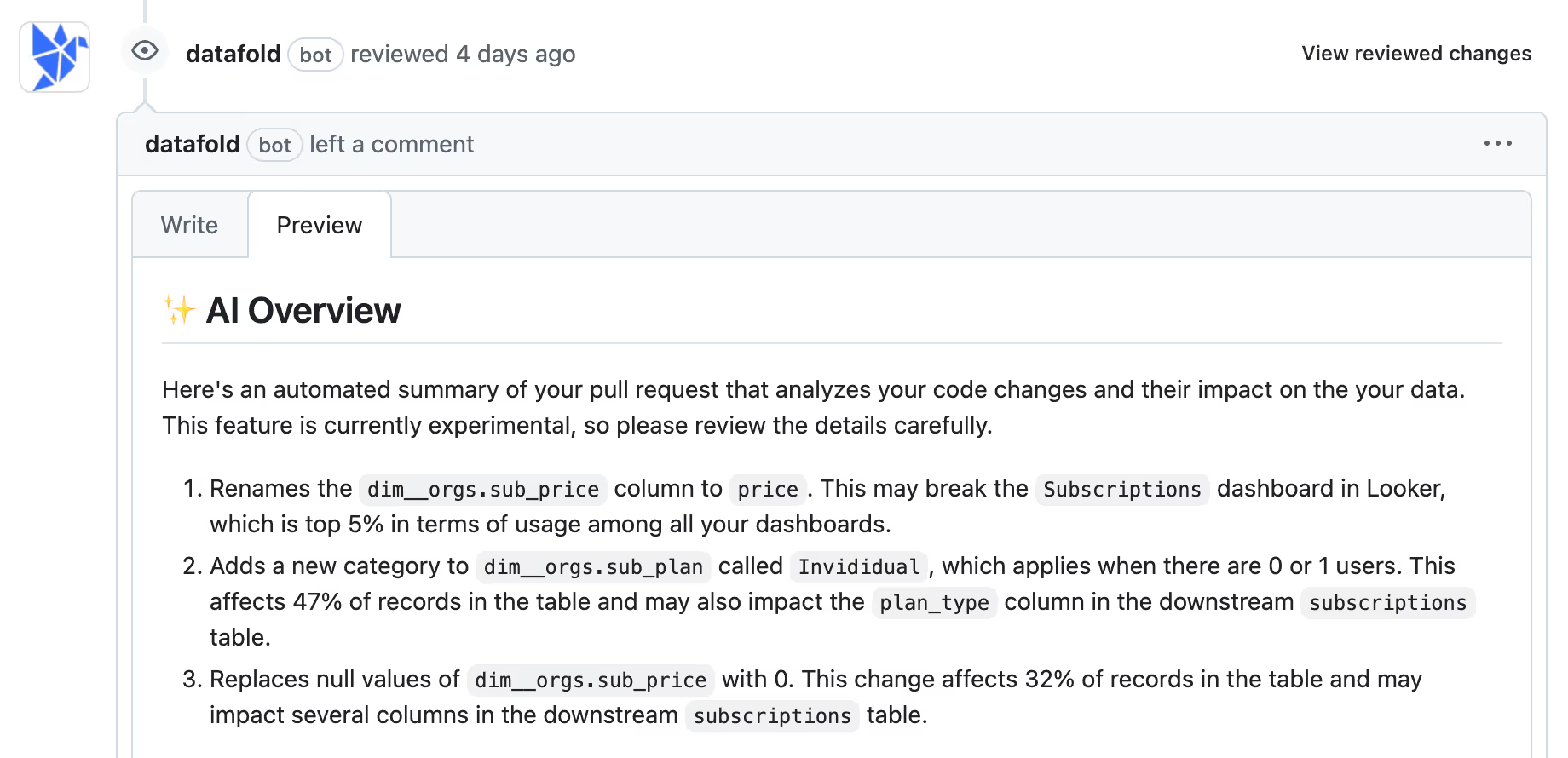

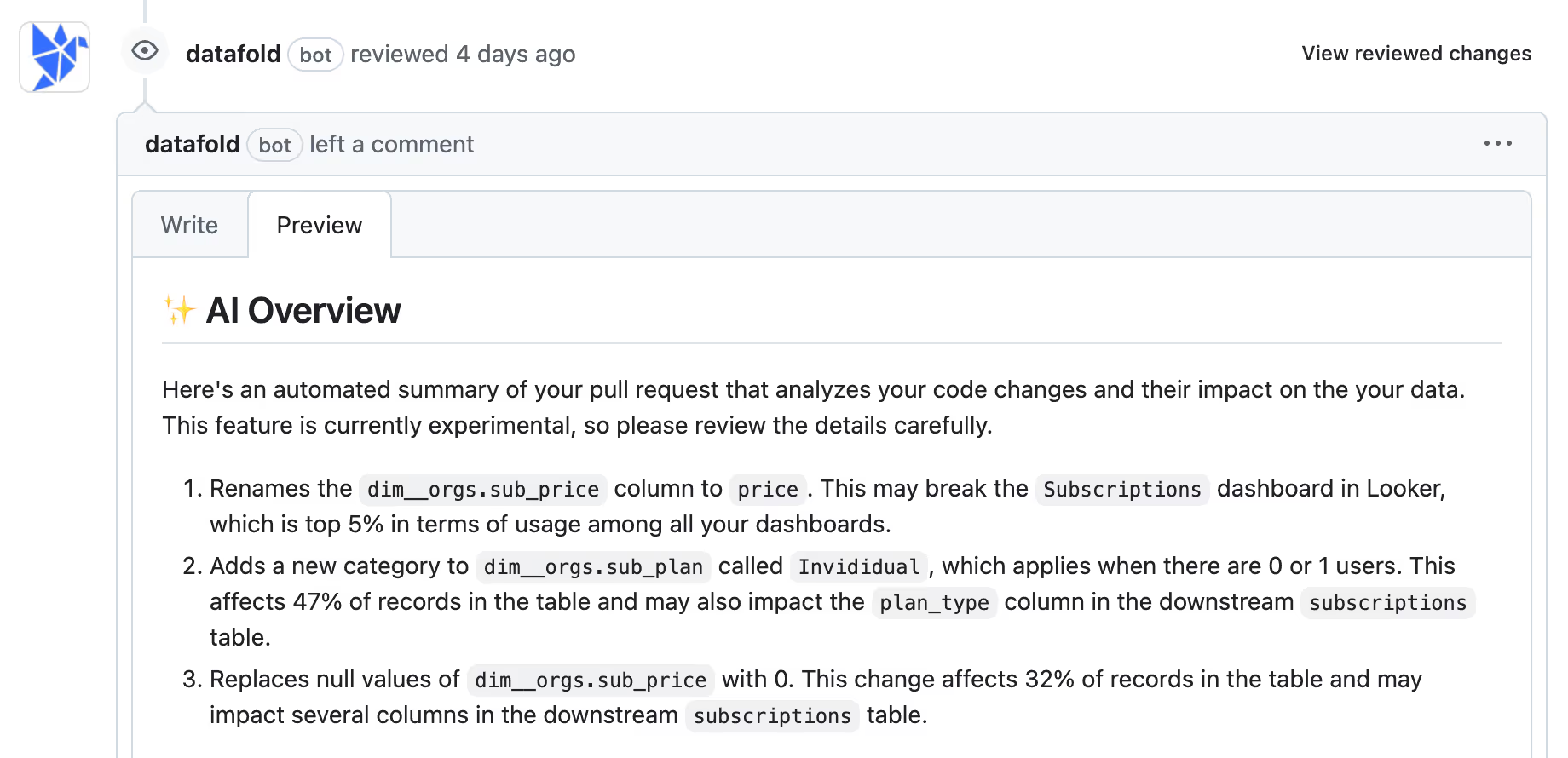

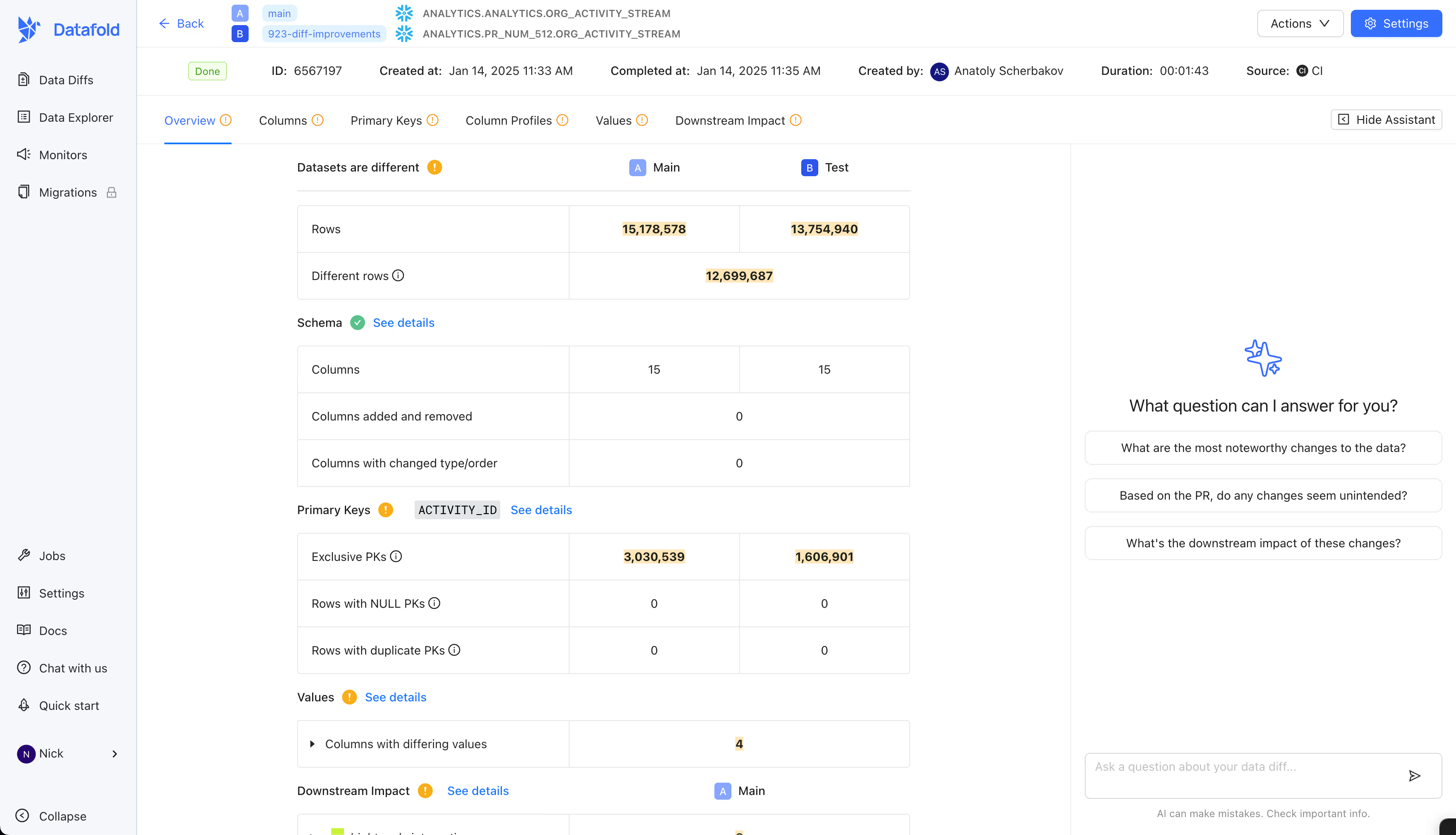

✨ Generally Available: AI analysis for code reviews

Code review in data engineering can be somewhat of a Pandora’s Box. Often, its more time-consuming and tedious than the process of actually writing code—especially if your tests are failing and you’re not quite sure why.With AI analysis for code review, now available to all customers, we’re setting out to crush unnecessary toil in this critical workflow, combining the power of AI with the insights of data diffing. Here’s what you can do:

- Get up to speed quickly with automated PR summaries: Every PR now comes with an AI-powered overview that highlights what changed, why, and the downstream impacts. Focus your time on what matters most with automated analysis of the most significant changes.

- Connect the dots with RCA for data diffs: Save hours of manual investigation when data changes unexpectedly. Hover over any change in your data diff to instantly see the exact code changes responsible, automatically connecting data changes to their source.

- Investigate changes with context-aware chat: Dig deeper into complex changes by asking questions in plain English. Get detailed answers drawing from your codebase, lineage, and data changes to quickly understand what's happening in your PR or data diff.

Please note AI-Powered Code Reviews are only available to US-based multi-tenant deployments today. For teams on single tenant deployments, please reach out to our team (hello@datafold.com) to learn more about getting started.

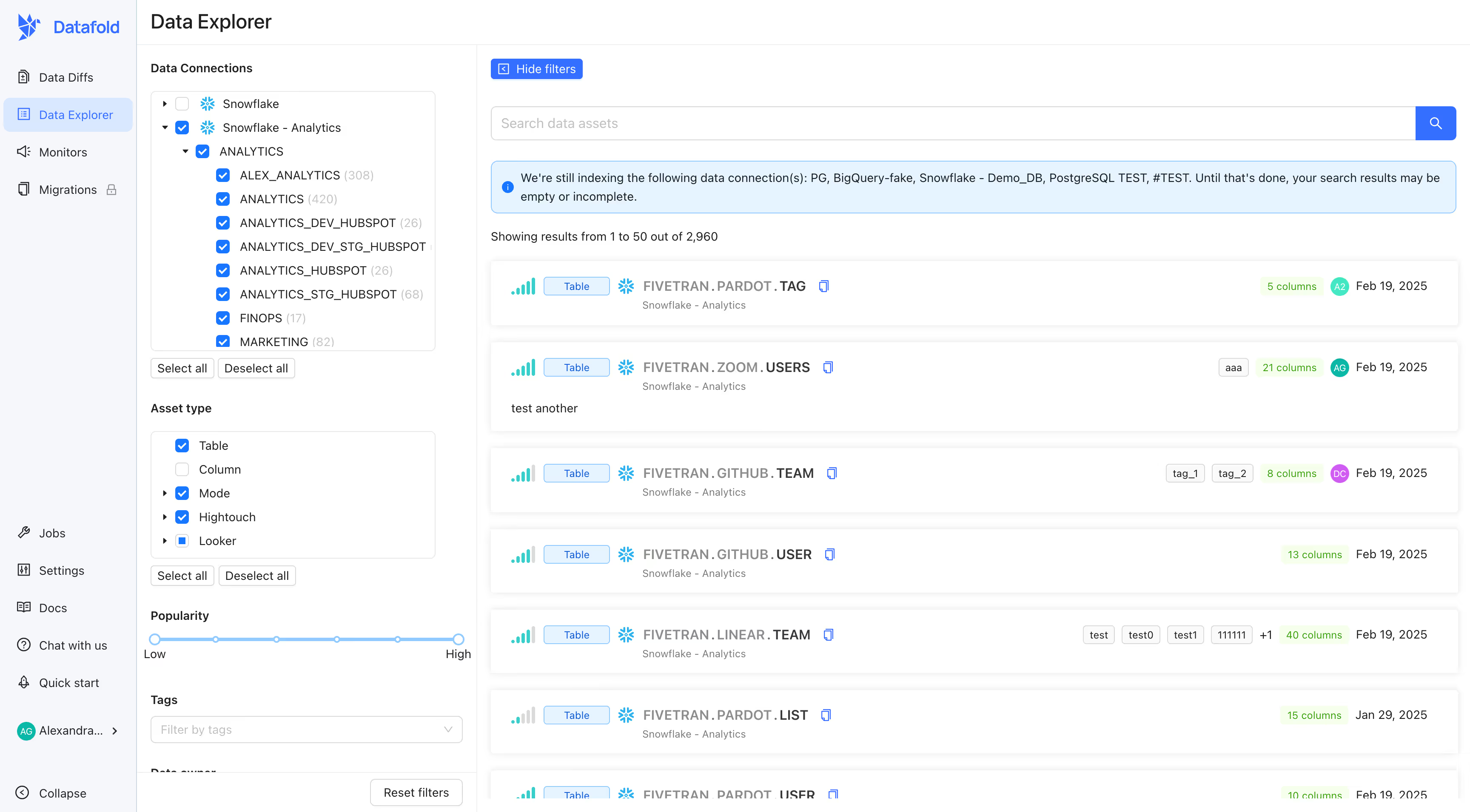

🍿 Coming Soon: Popularity

Data assets in your warehouse have different levels of impact on your business. Our new popularity feature helps you identify your most valuable tables by measuring how frequently they're used. Whether you're assessing the impact of code changes or exploring data relationships, popularity metrics make it easy to focus on what matters most.

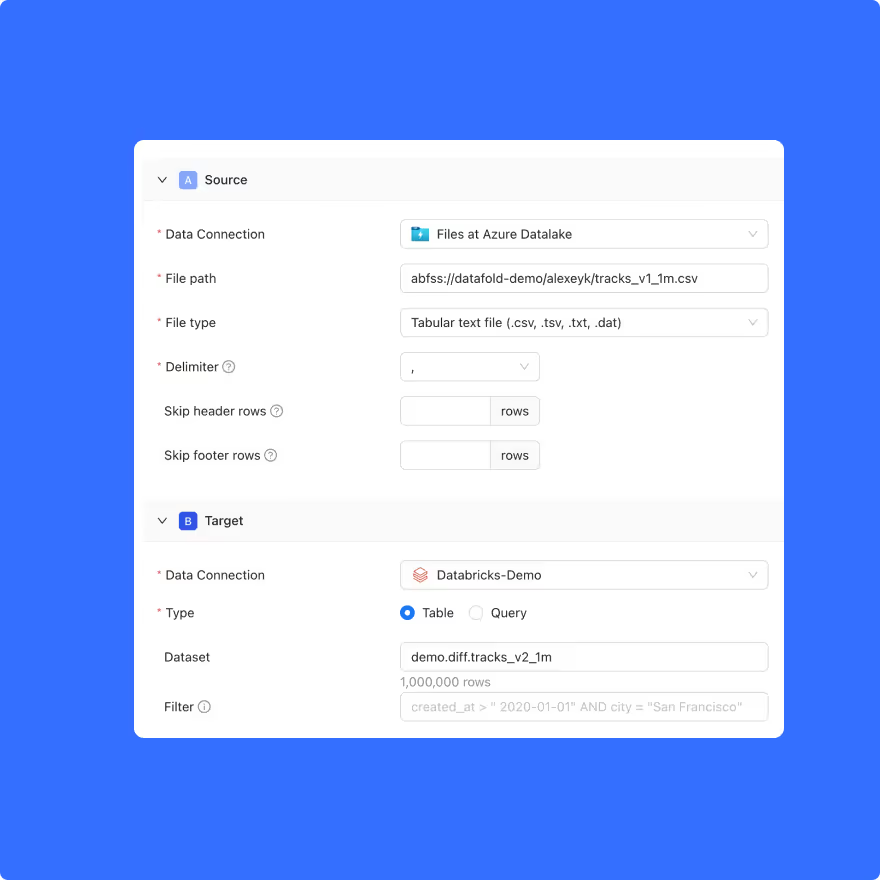

📁 File diffing support for AWS and GCP

In addition to diffing data in tables, views, and SQL queries, Datafold now allows you to diff data in files hosted in cloud storage, including Azure, AWS, and GCP. For example, you can diff between an CSV file stored in S3 and a table in Snowflake, or between a CSV file and an Excel file.

January 27, 2025

January 27, 2025

Introducing AI-Powered Tools to Supercharge Your PR Reviews

When you use Datafold in CI, we already provide deep insights into every pull request—how your data will change if merged, what downstream impacts it might have on BI dashboards, and more. But for larger PRs, it’s not always easy to pinpoint what’s most important or detect unintentional changes. And even when you spot unexpected data issues, tracing them back to the specific code changes can be a real challenge.

That’s why we’re thrilled to introduce AI-powered tools designed to make your PR reviews faster, clearer, and more actionable. Here’s what they can do:

- Surface the most critical insights about each PR, so you know what requires attention at a glance.

- Ask and answer questions in a convenient chat interface to dig deeper into changes or impacts.

These tools leverage a wealth of context—PR code changes, the source code of the modified files, resulting data diffs, and column-level lineage—to help you understand the full picture of your changes and their potential impact.

We’re just getting started, but we’re excited about helping you ship better code with fewer bugs and less effort. If you’d like to be among the first to try these features, please reach out to support@datafold.com—we’d love to hear from you!

January 22, 2025

January 22, 2025

Introducing NoSQL diffing

Datafold announces support for file diffing and a MongoDB integration to introduce NoSQL diffing capabilities.

File diffing

Datafold now supports the ability to diff files (e.g. CSV, Excel, Parquet, etc.) in a similar way to how you diff tables.

In addition to diffing data in tables, views, and SQL queries, Datafold allows you to diff data in files hosted in cloud storage. For example, you can diff between an Excel file and a Snowflake table, or between a CSV file and an Excel file.

Today, Datafold supports Azure Blob Storage and Azure Data Lake Storage (ADLS) as cloud storage options.

To learn more about file diffing, please check out the documentation.

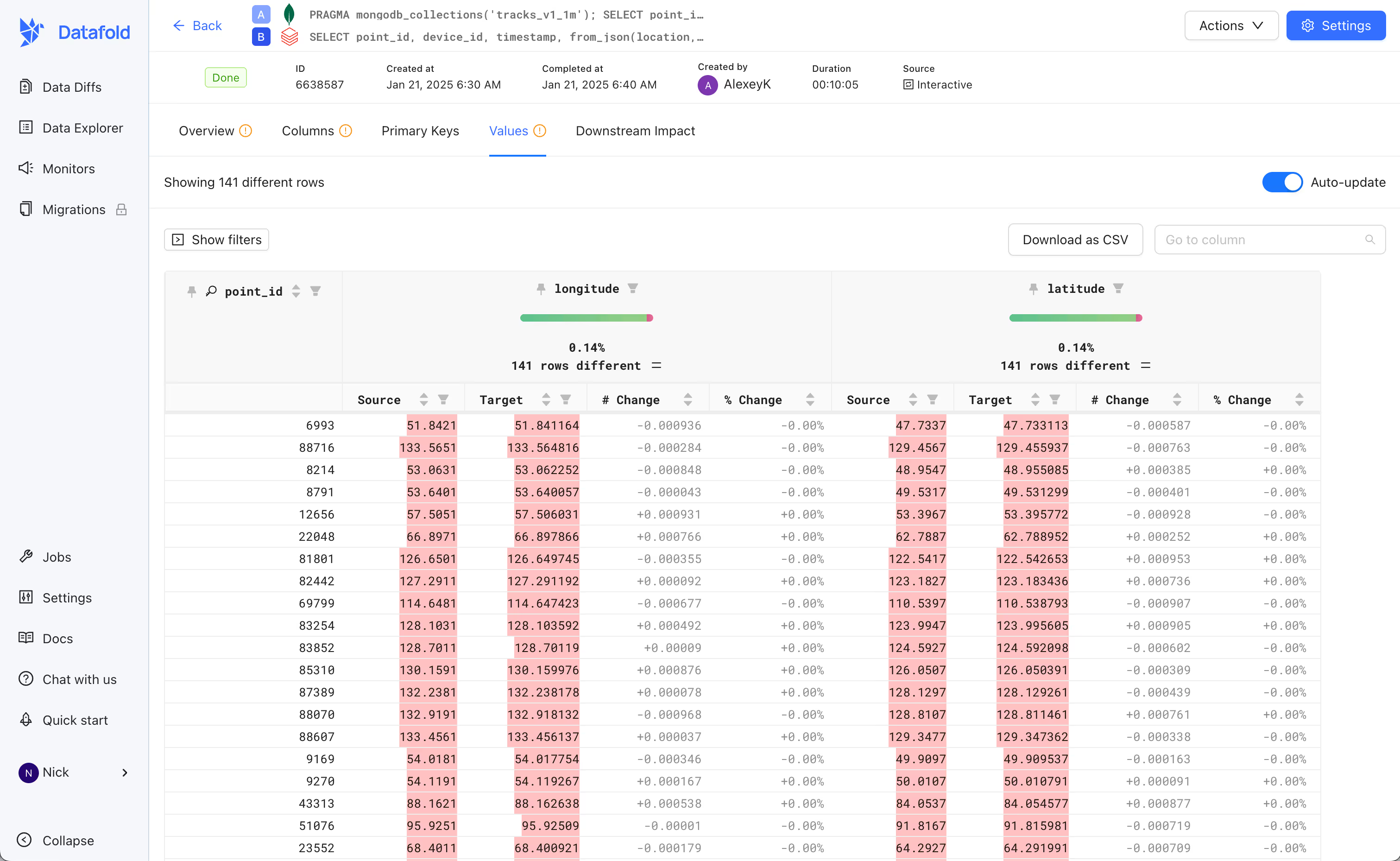

MongoDB integration

Datafold now supports a MongoDB integration, so you can diff MongoDB objects (Documents, JSON) within or across MongoDB, enabling your data to be tested and compared wherever (and however) it’s stored.

The MongoDB integration works similar to how normal data diffs work in Datafold; however, because the schema can vary widely across documents in a collection, Datafold will now output a table where the columns of the table are the union of all fields Datafold observe across all documents. Datafold will still identify all the values that differ across your diffed documents, but present them in a flat, unnested table format.

December 18, 2024

December 18, 2024

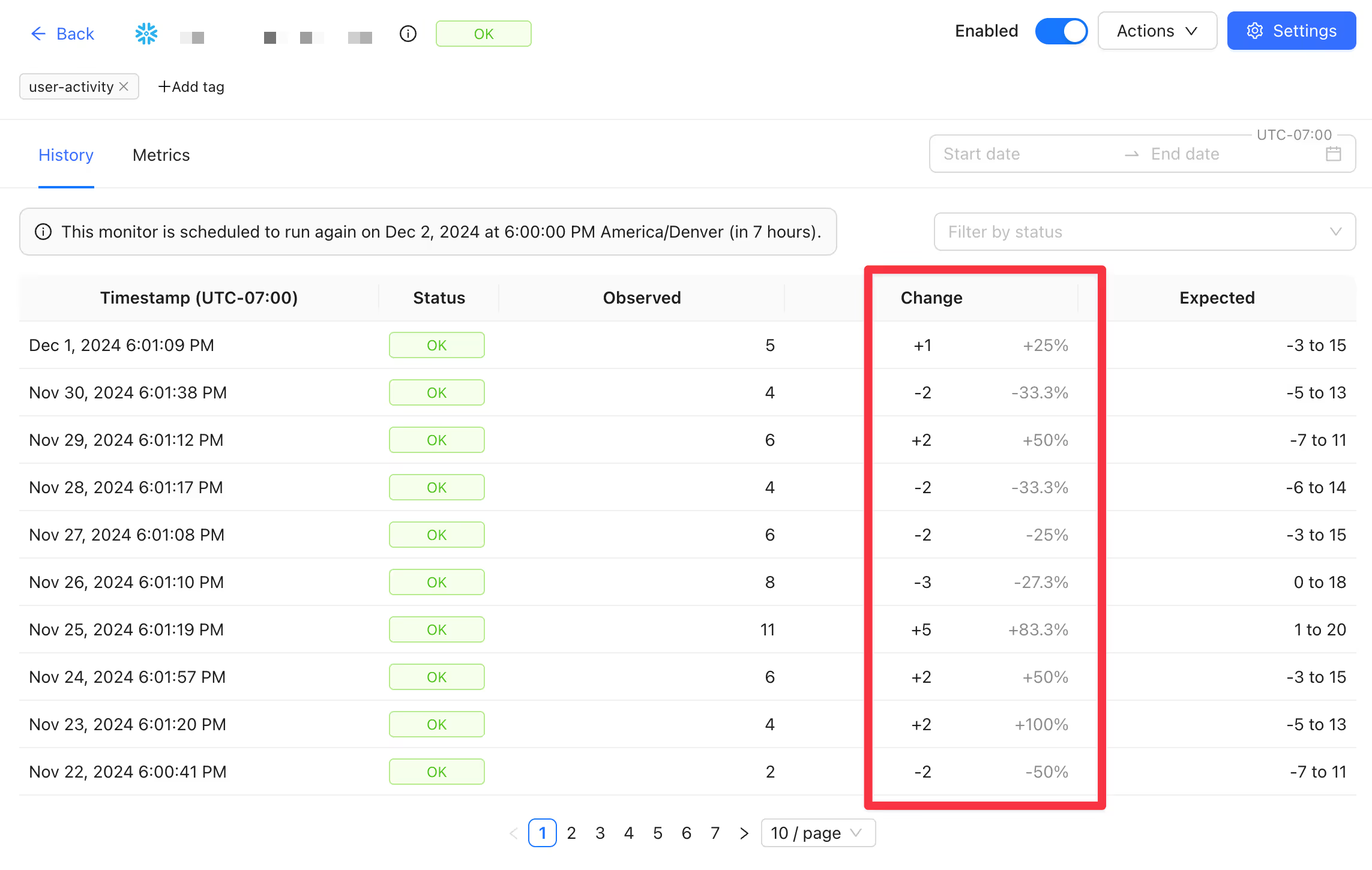

👁 Monitors enhancements

Here are some of the enhancements we’ve shipped to Monitors in the past month:

- More granular filtering: We've introduced more granular filtering so you can easily find specific subtypes of metric monitors (e.g. freshness, row count, custom, etc.).

- Absolute and relative change for observed metric values: For metric monitors, we now display the change in observed value(s) from one run to the next, both in absolute and relative (%) terms.

- [Coming soon] Standard Data Tests: Datafold will soon support out-of-the-box—no SQL required—Data Test monitors. For example, you’ll be able to quickly check for data that fail assumptions around uniqueness, nullness, accepted values, and/or referential integrity, all without writing any code.

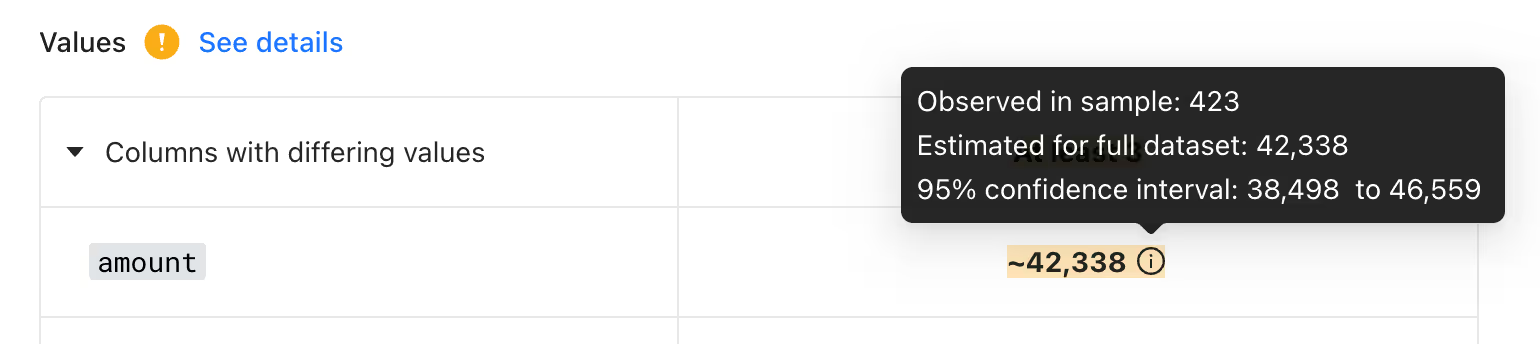

🌱 Diff sampling update

When diffing very large datasets, it’s often help to compare a subset of the data rather than comparing every record. While we’ve supported this for a while, we now provide estimates for the full datasets based on differences found in the samples (along with confidence intervals), rather than reporting results on the samples themselves.

November 19, 2024

November 19, 2024

Monitors enhancements

It’s been a few weeks since we announced the launch of our newest product—Datafold Monitors. We’ve been working hard to improve the product based on customer feedback. Below are some of the enhancements we’ve shipped.

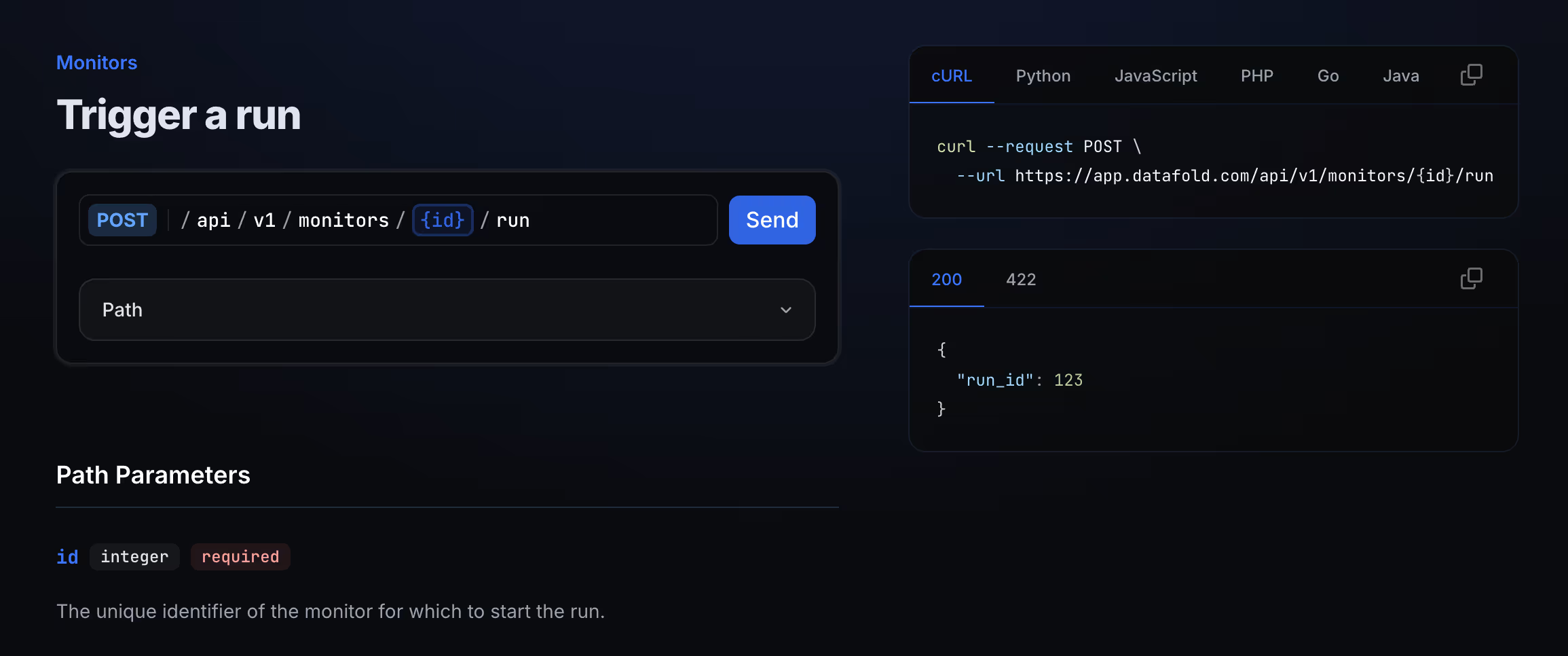

REST API

It’s now possible to create, manage, and run monitors entirely via our new REST API. Anything that’s possible in the UI can be done programmatically as well. Check out the documentation and let us know if you have any feedback.

Organizational tools

For customers with 100s or 1000s of monitors, it’s important to have good tools for staying organized. To that end, we now support custom names, descriptions, and tags for all monitor types.

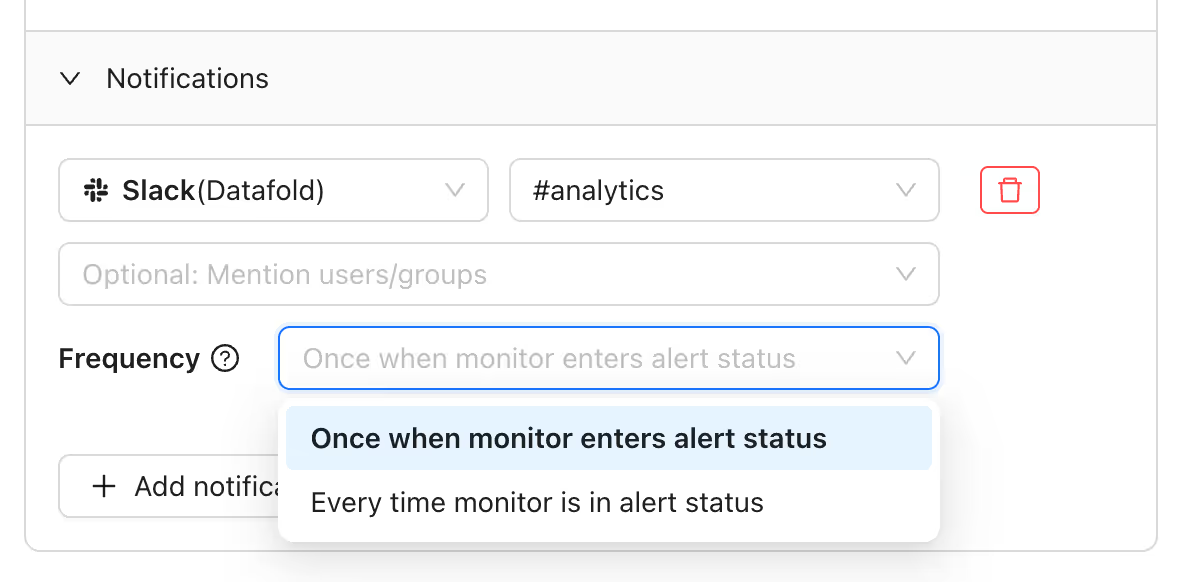

Notifications

We now automatically send recovery notifications when a monitor goes from an alert state back to OK. Additionally, you have more control over the frequency of notifications—i.e. whether to receive a notification every time a monitor alerts, or only the first time it enters alert state.

Attach CSV of failed records to Data Test notifications

Data Tests allow you to make assertions about your data, then get alerted when specific records fails those assertions. But when a test fails, you want to understand why as quickly as possible. That’s why we now allow attaching a CSV of failed records directly to notifications—so you don’t have to go digging around in your warehouse for problematic data.

Date/times in your local timezone

We get it—just because you’re a data person doesn’t mean you want to do math on timezones 😜 As of this week, you’re now able to set your timezone in the app—or keep everything in UTC if you prefer. Visit Settings > Account to configure this.

November 12, 2024

November 12, 2024

Our new Power BI integration lets you trace column-level lineage from your source data all the way through to Tables, Reports, and Dashboards. This is extremely powerful for:

- Exploring column-level lineage in Datafold’s Data Explorer

- Understanding the impact of code changes on your downstream BI assets

To give it a try, check out our docs or reach out to our solutions team.

.svg)

September 26, 2024

September 26, 2024

Introducing Monitors in Datafold

We're excited to announce that Datafold Monitors are now in GA. Monitors complement our existing features—such as data testing in CI, migration validation, and column-level lineage—to provide our customers with a more comprehensive data quality platform.

We support the following monitor types, each of which addresses a different set of problems:

- Data Diff: Identify discrepancies between source and target databases on an ongoing basis.

- Metric: Use machine learning to flag anomalies in standard metrics like row count, freshness, and cardinality, or in any custom metric.

- Schema Change: Get notified immediately when a table’s schema changes.

- Data Test: Validate your data with custom business rules, either in production or as part of your CI/CD workflow.

All of these monitor types can be created and managed in our application. However, for customers who prefer a more programmatic approach, our Monitors as Code feature allows you to configure monitors via version controlled YAML.

This is just the beginning. Keep an eye out for many exciting updates to come.

July 24, 2024

July 24, 2024

July 2024 Changelog — Enhanced lineage & Netezza integration

Here’s an overview of what’s new:

1️⃣ Enhanced Looker integration

2️⃣ dbt Exposures now available in the Data Explorer

3️⃣ Netezza integration

4️⃣ Materialize monitor diff results

Enhanced Looker integration

Datafold users can now import field labels and descriptions from Looker, enabling them to view and interact with this metadata directly in the Data Explorer.

.png)

dbt Exposures are now available in Datafold lineage

dbt Exposures are now accessible in Datafold's column-level lineage. For downstream applications or use cases defined with exposures, automatically explore lineage and understand the downstream impact of code changes on them.

Netezza integration is now available

Netezza is now available as a data source in Datafold. Datafold users can now perform in-database data diffs for tables in Netezza, or across Netezza and another data source (say during a data migration) to access the power of cross-database data diffing.

Materialize full data diff results for Monitors

For teams using cross-database monitors—scheduled cross-database data diffs—they can now materialize the full data diff result into their selected database. Quickly analyze discrepancies, log diff results, or perform ad-hoc analysis—faster! Simply enable the "Materialize diff results" toggle in a monitor's settings to enable this functionality.

June 18, 2024

June 18, 2024

Introducing No-Code CI in Datafold

.avif)

Setting up automated and efficient CI pipelines can be a challenge.

With our new No-Code CI integration, data teams can easily incorporate data diffing into their code review process—regardless of their data transformation or orchestration tooling—so they can deploy faster and more confidently.

As long as you’re version controlling your data pipeline code, this is for you.

Check out our recent blog post for more information.

May 29, 2024

May 29, 2024

May 2024 Changelog

Here’s an overview of what’s new:

1️⃣ No-Code CI Testing

2️⃣ Improved Downstream Impact Preview for Pull Requests

3️⃣ Case Insensitivity for String Diffing

No-code CI testing

We know not every data team uses dbt to transform and model their data. Now, Datafold Cloud users can incorporate data diffing into any CI workflow, regardless of your orchestrator. You can use the new No Code CI integration to tell Datafold which tables to diff for each pull request via the Datafold UI. For teams that want to programmatically send Datafold a list of tables to diff for each PR, use the new API functionality.

Now available: Improved downstream impact for pull requests

Datafold extends the concept of version control, similar to tools like GitHub for code, to data itself with Data Diffs. Datafold's CI testing also allows you to see how potential changes in your data will impact other dependent assets, such as downstream dbt models, tables, views, and BI dashboards—which we think is awesome! However, we know for PRs that change many data models, this information can potentially be challenging to digest.

Very soon, we’ll introduce a combined Impact Preview that shows the full set of downstream data assets that may be impacted based on the changes in your pull request—all in a single view.

.png)

Case insensitivity for string diffing

Datafold users can now choose to ignore string case sensitivity when diffing; this can be useful if you’ve purposefully changed the casing of a string value in a dbt change, or are okay with "Coffee" and "coffee" being identified as the same!

.png)

Happy diffing!

Kira, PMM

April 23, 2024

April 23, 2024

April 2024 Changelog

What’s new in Datafold:

1️⃣ Dremio support in Datafold Cloud

2️⃣ Sample for cross-database diffs

3️⃣ Improved CI impact view

Dremio integration in Datafold

We are excited to announce the launch of our new integration with Dremio in Datafold Cloud. This integration will enable current (and future!) Dremio users to:

- Accelerate migrations to Dremio faster with automated data reconciliation

- Enhance dbt data quality with automated testing of dbt models in CI/CD

- Validate data replication into Dremio with Datafold’s cross-database diffing and Monitors

Dremio is the Unified Lakehouse Platform designed for self-service analytics for flexibility and performance offered at an affordable cost. With Datafold, the data testing automation platform, Dremio lakehouse users can benefit from faster higher data development velocity while having full confidence in their data products.

Now supported: Sampling in cross-database diffs

Datafold now supports sampling for cross-database diffs. For large cross-database diffs, leverage sampling to compare a subset of your data instead of the full dataset. Sampling supports sampling tolerances, which dictates the acceptable level of primary key errors before sampling is disabled, sampling confidence, ensuring that the sample accurately reflects the entire dataset, and a sampling threshold.

Consolidated impact previews for pull requests

Datafold extends the concept of version control, similar to tools like GitHub for code, to data itself with Data Diffs. Datafold's CI testing also allows you to see how potential changes in your data will impact other dependent assets, such as downstream dbt models, tables, views, and BI dashboards—which we think is awesome! However, we know for PRs that change many dbt models, this information can potentially be challenging to digest.

Very soon, we'll introduce a combined Impact Preview that shows the full set of downstream data assets that may be impacted based on the changes in your pull request—all in a single view.

.png)

Happy diffing!

April 1, 2024

April 1, 2024

New: Introducing Data Replication Testing in Datafold

Monitors: Scheduled cross-database data diffs

Monitors in Datafold are the best way to identify parity of tables across systems on a continuous basis. With Monitors, your team can:

- Run data diffs for tables across databases on a scheduled basis.

- Set error threshold for the number or percent of rows with differences between source and target.

- Receive alerts with webhooks, Slack, PagerDuty, email when data diff results deviate from your expectations.

- Investigate data diffs—all the way to the value-level—to quickly troubleshoot data replication issues.

Datafold will also keep a historical record of your Monitor's data diff results, so you can look back on replication pipeline performance and keep an auditable trail of success.

Demo

Watch Solutions Engineer Leo Folsom walk through how data replication testing works in Datafold.

Happy diffing!

March 25, 2024

March 25, 2024

March 2024 Changelog

Here’s an overview of what’s new:

1️⃣ Cross-database diffing 2.0

2️⃣ Improved column selection

3️⃣ Datafold API enhancements

4️⃣ Just around the corner: Data replication testing

Cross-database diffing improvements

As we continue to improve this product experience and evolve the testing of data reconciliation efforts, we are invested in making cross-database validation as efficient and impactful as possible for data teams.

I’m excited to share three major improvements in Datafold to make source-to-target validation faster and more impactful:

- Faster cross-database data diffing: Up to 10x faster data diffing for cross-database diffs, reducing time-to-insight and compute costs against your warehouses.

- Real-time diff results: See data differences live as Datafold identifies them, rather than waiting for the entire diff to complete.

- Representative samples: Establish difference "thresholds" to stop diffs once the set number of differences has been found per column, saving your compute costs and time.

Improved column selection

Want to complete a data diff, but only care about a handful of the columns? Save time and compute costs by selecting only specific columns to be compared during a data diff. This is very useful for larger tables where there are known (and acceptable) differences for certain columns.

Datafold API enhancements

Datafold's API now allows you to easily fetch the db.schema.table of materialized diffs. For teams that are materializing diff results and want to programmatically query those tables, having this table path easily accessible via the Datafold API makes it straightforward to automate your custom pipeline.

Happy diffing!

March 19, 2024

March 19, 2024

March Changelog: How we’re evolving cross-database diffing in Datafold

Performance improvements

Our engineering team has spent months fine-tuning and adjusting our data diffing algorithm and we’re excited to share that teams can now experience up to 10x faster cross-database diffing.

Whether you’re undergoing a migration or performing ongoing data replication, leverage Datafold’s proprietary and performant cross-database diffing algorithm to validate parity across databases faster than manual testing ever could. Not only does this save data teams valuable time, but it reduces compute load and costs on their warehouses.

Real-time diff results

One of the innovations I’m personally most excited to share is real-time diff results. For large data diffs, we understand that you can act on partial information before the entire table diff is complete.

Now, with real-time diff results, the Overview and Value Tabs will populate as Datafold finds differences. How does this impact you?

- If you start seeing real-time value-level differences that you know are wrong, you can stop a diff in its tracks, and identify and fix the problem sooner.

- Leveraging the Overview tab in Datafold, quickly understand the magnitude of differences. For many teams, we recognize that there is often an error threshold/acceptable lack of parity. With real-time diff results, find out sooner if the diff you’re running is meeting those error expectations, and stop a diff if it’s exceeding it.

No more waiting for a longer-running diff to complete. Simply start seeing differences as we identify them.

Find differences faster with the representative samples

With Datafold’s new Per-Column Diff Limit, you can now automatically stop a running a data diff once a configurable threshold value of differences has been found per column. Similar to all of these new cross-database diffing improvements, the goal of this feature is to enable your team to find data quality issues that arise during data reconciliation faster by providing a representative sample of your data differences, while reducing load on your databases.

In the screenshot below, we see that exactly 4 differences were found in user_id, but “at least 4,704 differences” were found in total_runtime_seconds. user_id has a number of differences below the Per-Column Diff Limit, and so we state the exact number. On the other hand, total_runtime_seconds has a number of differences greater than the Per-Column Diff Limit, so we state “at least.” Note that due to our algorithm’s approach, we often find significantly more differences than the limit before diffing is halted, and in that scenario, we report the value that was found, while stating that more differences may exist.

Happy diffing!

February 21, 2024

February 21, 2024

February Changelog

Here’s an overview of what’s new:

1️⃣ Downstream Impact Tab of Data Diff results

2️⃣ Datafold is now available on Azure marketplace

3️⃣ MySQL 🐬 support for cross-database diffing and data reconciliation

4️⃣ Coming soon: Replication testing 👀

And make sure to join us at our next Datafold Demo Day on February 28th! Our team of data engineering experts will walk through some of these newest product updates, and demonstrate how Datafold is elevating the data quality game.

Downstream Impact Tab

We’ve been there: You’ve opened a PR for your dbt project with some code changes you just know are going to impact your head of finance’s core reports. You don’t necessarily know how (or even why), but your domain experience (and gut) tell you to merge with extreme caution.

Now, with the Datafold Downstream Impact tab, your fear of merging (and breaking) is removed. In one singular view, understand all potentially modified downstream impacts of a code change—from your downstream dbt models to that one dashboard your CFO is refreshing every 10 minutes.

The Downstream Impact tab leverages Column-Level Lineage so that (potentially very many) table-level downstreams are purposefully not included, if the specific columns connected to those downstreams are unchanged in the PR. Talk about less noise, more signal, am I right?💡

Quickly search and sort by dependency depth, type, and name, so you never have to experience a bad dbt deploy again.

The Downstream Impact Tab will populate for any data diff in Datafold Cloud triggered by a CI job, manual data diff run, or an API call.

Azure marketplace listing

We’re excited to announce that Datafold Cloud is now available on the Azure marketplace. This enables data teams looking to automate testing for their dbt projects, migrations, and ongoing data reconciliation efforts using their pre-committed Azure spend—making data quality testing more accessible than ever.

New MySQL integration

Datafold Cloud is proud to support a new integration for MySQL, so you can leverage Datafold’s fast cross-database diffing to validate parity for migrations or ongoing replication within MySQL and our 13+ other database integrations.

Coming soon: Data replication testing

Perhaps the thing I am most excited about to share with you all over the coming months: Datafold’s approach to monitoring and testing an often overlooked (but critical) part of the stack—data replication pipelines.

We know how important it is for the data you’re replicating across databases to be right. We know this data is often mission-critical—powering core analytics work and machine learning models, and guaranteeing data reliability and accessibility. We recognize that broken replication pipelines and consequential data quality issues have been a persistent, unsolved pain for data engineers.

Datafold’s solution to validating ongoing source-to-target replication is going to continue what we do best: data diffing…but pairing it with net new scheduling and alerting functionality from our end.

If your team is interested in gaining transparency into the your replication efforts, feel free to email kira@datafold.com to be included in our beta waitlist.

Happy diffing!

January 25, 2024

January 25, 2024

January 2024 Changelog

The Datafold team has kicked off the new year with some exciting new product updates. Here’s an overview of what’s new:

- 1️⃣ Azure DevOps + Bitbucket integrations

- 2️⃣ Tabular lineage view

- 3️⃣ Diff metadata now visible in diff UI

- 4️⃣ Support for Tableau Server

- 5️⃣ Coming soon: Data diff Columns tab

�Azure DevOps + Bitbucket integrations

Datafold Cloud now supports code repository integrations with Azure DevOps (ADO) and Bitbucket. Similar to our GitHub and GitLab integrations, upon a PR open in ADO or Bitbucket, Datafold will automatically add a comment providing an overview of the data diff between your branch and production tables and identify potential impact on downstream tables and data apps.

Lineage at scale: Introducing Tabular Lineage view

We get it: When your DAG contains thousands of models and downstream BI assets, it can be hard to wade through it in a graphical format. (Spaghetti lines, who?)

We’re excited to share that Datafold Cloud now supports a Tabular Lineage view, so you can filter, sort, and explore lineage in a columnar format (the way data people usually like to, well, interact with data 😂).

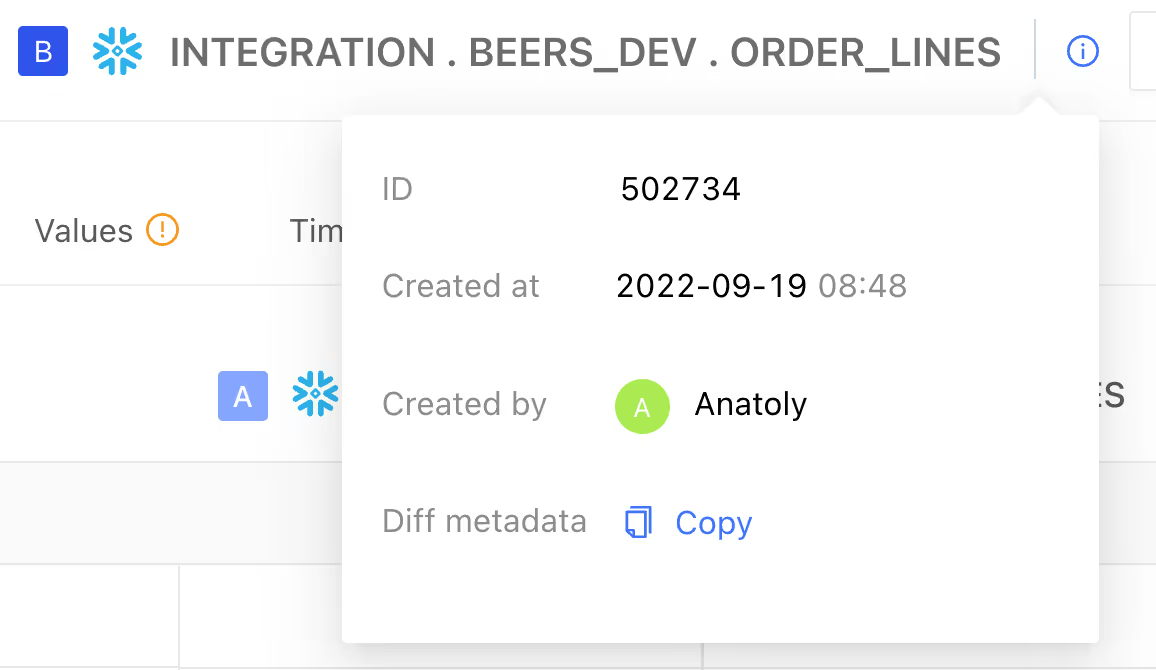

Diff metadata now visible in diff UI

Metadata about diffs (diff start/end time, creator, and runtime) are now visible within a diff result in Datafold Cloud. Using these new easily accessible data points, immediately know who to ask questions about a specific diff or dig into diff performance.

Now supported: Tableau Server

Datafold now supports integrating Tableau Server-hosted assets in column-level lineage and within the Datafold CI impact analysis comment, so you can:

- Understand how your data works its way from source —> workbook

- Prevent breaking data changes to your core Tableau assets

Datafold's integration with Tableau also works with Tableau Cloud.

Coming soon: Data diff Columns tab

We've heard loud and clear that users want to see the information they need at a glance: one summary, no clicking around. In particular, we want you to see which columns are different (and by how much), and which are the same. All that is available now in one place: the Columns tab of a data diff's results.

You can clearly see the differences and similarities between the two version of the table being diffed. Not overly general results; not too much detail (though you can get into the weeds in the Values tab). The Columns tab is, like a bowl of porridge that Goldilocks encounters during a walk through the forest—just right. This feature will be rolled out to customers over the next couple weeks.

Please reach out if you want to be an early user, or with any feedback!

.avif)

Happy diffing!

December 20, 2023

December 20, 2023

Azure, better diff UX, migrations toolkit, and more!

New product updates inlcude:

- 1️⃣ Support for Azure deployments in Datafold Cloud

- 2️⃣ ICYMI: The 3-in-1 migrations toolkit from Datafold

- 3️⃣ Column remapping for cross-database diffs

- 4️⃣ NEW: Delete diffs and set a data retention policy

- 5️⃣ Tableau workbooks are now visible in Datafold lineage and CI impact analysis report

Azure support

Datafold Cloud now supports deployment options in Azure, so you can run your data diffs wherever you see fit. As a reminder, Datafold Cloud also supports single-tenant deployment options in Google Cloud and AWS.

The 3-in-1 product toolkit for accelerated migrations

At Datafold, we think data migrations shouldn't suck. Which is why we’re support a 3-part product experience to plan, translate, and validate your migration with speed. Using Datafold, you can use column-level lineage to identify assets to migrate and deprecate, our SQL translator to move scripts over from one SQL dialect to another, and cross-database diffing to validate migration efforts—at any scale.

Better diff UX!

Smarter diff with partially null primary keys

Previously in Datafold, composite primary keys with a null column would be identified as a null primary key. Now, you can set a composite primary key that includes a column that can sometimes be null in Datafold Cloud. Talk about a small but mighty quality of life improvement for those more complex tables!

Column remapping in cross-database diffing

If you’re diffing across databases, Datafold Cloud can now diff tables that have changed column names with user-provided mapping. For example, you can now indicate that ORG_ID in Oracle is ORGID in Snowflake so that Datafold does not interpret them as different columns.

.avif)

More flexible deletion and retention policies

Now in Datafold Cloud, you can easily delete diffs and create custom retention policies for your diffs. In addition to deleting individual diffs, you can configure Datafold to automatically delete all diffs older than X days. What does this mean for you? Greater control of your data and (more importantly), keeping your legal and security teams happy 😉.

.avif)

Workbooks now supported in Datafold Cloud Tableau integration

Tableau workbooks are now visible in Datafold Cloud column-level lineage and the CI impact analysis report! If your team is struggling with the noise of Sheets in lineage or the Datafold CI comment, make sure to check this out.

.png)

December 5, 2023

December 5, 2023

Datafold's 3-in-1 Data Migration Toolkit

Datafold is slinging updates to support data migrations. With cross-database diffing for data reconciliation, SQL translation, and column-level lineage, the daunting endeavor of data migration can be a success instead of over budget, delayed, and never quite complete.

Cross-Database Diffing between Legacy and New Databases

Diffing between databases is critical to ensuring consistency between old and new data. Datafold has been shipping new database connectors at a rapid pace. Critically, Datafold Cloud users can now diff between different databases at scale (we’re talking billions of rows).

SQL Translator

With Datafold’s SQL Translator, you can efficiently and accurately convert SQL from the old dialect to the new:

It’s like Google Translate, but for your SQL.

Datafold’s SQL Translator can be used to translate thousands of lines of legacy code (such as stored procedures, DDL, and DML) into the dialect of your new data system. Oh, you can also use it for quick syntax checks as you write ad hoc queries.

Putting it all together

These new capabilities add to Datafold’s existing suite of tools, including our Column-Level Lineage graph, which can be used to identify what to migrate.

November 13, 2023

November 13, 2023

Product Launch: Downstream Tableau Assets Now Accessible in Pull Request and Lineage

We’re excited to announce the new Tableau integration for Datafold Cloud that shows users the Tableau Data Sources, Sheets, and Dashboards that could be impacted by your dbt models.

These Tableau assets will be visible in the Column-Level Lineage explorer in Datafold Cloud…

%2520(1).avif)

…as well as right within your pull request:

.png)

So your team has completely visibility into the Tableau assets that will be potentially changed with your code updates.

With the Tableau Integration for Datafold Cloud, users can now have a robust look at how their data travels through their stack, and prevent data quality issues from entering one of the most important tools of their business.

FAQ

What about dbt Exposures?

dbt Exposures require manual configuration, which is not scalable or automated. With Datafold Cloud’s Tableau Integration, your column-level lineage and impact analysis just works out-of-the-box.

Is this only for dbt models?

Nope — Tableau assets that are downstream of any data warehouse object will appear in Datafold Cloud Column Level Lineage.

October 30, 2023

October 30, 2023

Datafold Changelog — October 2023

The Datafold team has been hard at work improving your experiences with data diffing, Datafold Cloud, and new product innovations. Here’s an overview of what’s new:

1️⃣ Microsoft SQL Server and Oracle support in Datafold Cloud

2️⃣ Cyclic dependency identifier

3️⃣ Auto-type matching

4️⃣ New and improved ✨ Datafold docs ✨

5️⃣ E X C I T I N G new product betas 👀

🏘️ New database connectors: SQL Server &Oracle

Don’t let your data stack prevent you from high quality data. Leverage Datafold Cloud’s new connectors with both Microsoft SQL Server and Oracle to data diff where you need it.

🔀 Improved UX when cyclic dependencies appear in Datafold Lineage

🤚 Raise your hand if you’ve ever created a cyclic dependency 🙈? Now, when you’ve created this data modeling no-no, Datafold Cloud will alert you of the cyclical loop as well as identify the impacted dependencies in that loop. This can help your team quickly identify any bad practices or incorrectly modeled data.

✨ New and improved Datafold Docs

The Datafold docs have been given a facelift! Our new docs are easily searchable and organized by use case so you can get the most out of Datafold Cloud.

.avif)

⚡ Automatic type matching

Now, if there are two columns in the tables being diffed with the same column name, but with differing types of one of the following...

- int <-> string

- decimal <-> string

- int <-> decimal

...Datafold will automatically cast and compare — no more unhelpful type mismatches. This means you get actual useful diff results instead of a generic "type mismatch" error. Datafold is all about diffing, and we don’t want type mismatches to get in your way!

👀 Just around the corner: Exciting new product launches!

The Datafold team is excited for fall and winter. And not because of the plethora of holidays, but because of the insanely exciting new product launches that are coming to your Datafold instance very soon:

- 📈 Tableau BI integration in column-level lineage and impact analysis

- ⚔️ Cross-database diffing in Datafold Cloud for accelerated migrations and replication validation. Take a sneak peek of this new feature here.

- 🪣 Bitbucket git support

- …and more?!?!

Happy diffing!

September 20, 2023

September 20, 2023

New: Datafold Looker integration, PK inference, and improved CI printout

🐦❤️👁️ Datafold Cloud Looker Integration

ICYMI we launched the Datafold Cloud Looker Integration: bringing enhanced lineage and impact analysis to your dbt project and beyond. Using the Looker integration, you can:

- Visualize Looker assets (Explores, Views, Dashboards, and Looks) in Datafold’s column-level lineage

- See potentially impacted Looker assets from your dbt code change in the Datafold CI comment

Yes, we think this is some very cool tech (what can we say we’re a bit biased 😂). But more importantly we think that this means you stop getting those “you broke my dashboard” DMs 😉.

⚡Automatic primary key inference for incremental and snapshot models

Previously, Datafold Cloud identified primary keys from an additional YAML config or the dbt uniqueness test. Now, when you define a unique_key in your dbt model config, Datafold Cloud will automatically infer that is the primary key to be used for Datafold’s diffing. Unique keys defined in dbt can be both singular or composite keys. This is particularly useful for more complex incremental and snapshot models, where you may want to define a unique key, but not test uniqueness in dbt.

🔊 Enhanced Datafold Cloud CI printout: Goodbye noise, hello signal

Datafold CI comment will soon highlight which values are different between dev and prod by pulling them to the top of the comment. This will reduce alert fatigue and make it much easier to see whether your code changes will change the data (and how), or keep it the same.

Rows, columns and PKs that are not different will be grouped together under the NO DIFFERENCES dropdown.

Please note that this feature is currently being rolled out to existing customers over the next few weeks.

August 29, 2023

August 29, 2023

Downstream Looker assets in Pull Requests and Lineage

We’ve launched a Looker integration that shows Datafold Cloud users the Looker Views, Explores, Looks, and Dashboards that could be impacted by your dbt models.

These Looker assets will be visible in Column-Level Lineage in the Datafold Cloud UI …

… as well as right within your pull request:

August 22, 2023

August 22, 2023

VS Code extension, improved Datafold Cloud CI, and upcoming launches

The Datafold team has been hard at work improving your experiences with data diffing, Datafold Cloud, and new product innovations. Here’s an overview of what’s new:

1️⃣ Datafold VS Code Extension

2️⃣ Quality of life product improvements in Datafold Cloud (intuitive column remapping in CI and saying goodbye 👋 to stuck CI)

3️⃣ Some very exciting product launches on the horizon (hint: BI tool integrations in Datafold Cloud)

🚀 Datafold VS Code extension

ICYMI we launched the Datafold VS Code extension: a powerful new developer tool bringing data diffing directly to your dev environment. Use the Datafold VS Code extension to quickly run and diff dbt models in clean GUI, and develop dbt models with confidence and speed.

In addition, by installing the Datafold VS Code extension, you’ll receive free 30-day trial access to value-level differences—a Datafold Cloud exclusive (❗) feature. Join us in the #tools-datafold channel in the dbt Community Slack for feedback and any questions about this 🙂.

☁️ Datafold Cloud improvements

Column remapping in CI comments

If your PR includes updates to column names, you can specify these updates in your git commit message using the following syntax: datafold remap old_column_name new_column_name. That way, Datafold will understand that the renamed column should be compared to the column in the production data with the original name 🙏.

By specifying column remapping in the commit message, when you rename a column, instead of thinking one column has been removed, and another has been added, Datafold will recognize that the column has been renamed.

In the example above, the column sub_plan is renamed to plan, and Datafold recognizes these are the same column with this commit message. This feature is particularly useful if there are changes to upstream data sources that impact many downstream models.

Faster, leaner, and smarter Datafold in CI

Datafold is all about giving you the information you need, where and when you need it, as soon as possible. That includes getting out of the way quickly when it's not yet time to data diff. Now, when your dbt PR job does not complete for any reason, Datafold will detect that right away and cancel itself, allowing your CI checks to complete. Everyone loves faster (unstuck) CI!

👀 Coming soon - betas and upcoming launches

Keep an eye out for exciting developments on:

- 📈 Evolved lineage with Looker and Tableau integrations in Datafold Cloud. If your team is interested in seeing the Looker integration live, come join us at an upcoming Datafold Cloud Demo!

- 🔀 Cross-data warehouse diffing for accelerated database migrations and validating data replication.

- ...and more!

Happy diffing!

August 1, 2023

August 1, 2023

🆕 Announcing the Datafold VS Code Extension

We’ve launched the Datafold VS Code Extension—a new developer experience tool that’s integrating data quality testing, data diffing, and Datafold into your development workflow.

The VS Code extension is an enhancement of the open source data-diff product from Datafold. Using the extension, you can easily install open source data-diff, run your dbt models, and see immediate diffing results between your dev and prod environments in a clean GUI—all within your VS Code IDE.

⬇️ Install the Datafold VS Code Extension

You can install the Datafold VS Code Extension by using the VS Code Extension tab.

💻 Data diff a dbt model using the GUI

Once you’ve followed the simple steps in our documentation to get started, you’ll be able to diff any dbt model or set of models using either the simple GUI.

First, open the Datafold Extension by clicking on the bird of Datafold on the left hand side of your VS Code window. Then, click on any model's "play" button to run a data diff between the development and production version of that model.

💡 Be sure to dbt build or dbt run any models that you plan to edit or diff, to ensure relevant development data models and dbt artifacts exist.

⚒️ Data diff your most recent dbt run or build

You can also use the “Datafold: Diff latest dbt run results” command in the VS Code command palate. This enables you to automatically diff a group of models that were built in the last dbt build or dbt run.

🔎 Explore value-level data diff results

By installing the Datafold VSCode extension, you’ll receive free 30-day trial access to value-level differences—a Datafold Cloud exclusive feature (❕). To see value-level differences, click on the blue "Explore values diff" next to the "Values" section to see and interact with value-level differences.

👁️ Data diff in real time as you develop with Watch Mode

In the settings of the Datafold VS Code extension, you can enable "Diff watch mode." With watch mode on, the Datafold VS Code Extension will automatically run diffs after each dbt invocation that changes the run_results.json of your dbt project. Turn on this setting if you want diffs to be automatically run between changed dbt models.

🎥 Demo video

Watch Datafold Solutions Engineer Sung Won Chung install and use the Datafold VS Code extension!

📖 Resources

For additional resources, please check out the following:

- Detailed docs on the Datafold VS Code functionality

- Blog post on why we built this extension, and where we see it going in the future

Happy diffing!

July 1, 2023

July 1, 2023

Skip diffs, advanced filters, and a beta Looker integration

We’re excited to share some new product updates that give you greater control over what gets diffed, how you interact with diffs and their results with advanced filtering, and identifying how code changes impact your BI tools.

Here’s an overview of what’s new:

- Skip diffs with commit messages

- More powerful values filtering

- Datafold’s new Looker integration pre-release

⏩ Skip diff functionality with commit message

We get it—not every commit needs a diff! Now, you can choose to skip a diff generated by a commit by adding this string (datafold-skip-ci) in your commit message. By adding this string anywhere in your commit message, your commit will not trigger a Datafold CI run.

This feature is particularly useful if you’re adding in hotfix commits, committing many commits back-to-back in a short timeframe, or looking to reduce compute costs from unnecessary diff runs.

➖ Negative filtering in search

Never has filtering been more intuitive! We recently added functionality for negative search in Lineage data explorer. Using negative search, by adding a dash (-) before the term to exclude, you can more easily filter on specific patterns of schemas (compared to deselecting those that don’t meet your criteria). We’ve additionally added support for * and ? wildcards, where * matches any number of characters and ? matches any single character.

Examples:

- ORG_ACTIVITY -DEV will match any asset name that contains ORG_ACTIVITY and does not include the string DEV

- RUDDERSTACK*MARKETING will match any asset name that contains RUDDERSTACK followed by MARKETING at any point in the string

- PR_???_ will match any asset name that contains PR_ followed by any 3 characters and _ . For example, PR_???_ will return PR_123_ and exclude PR_12_ from search results

💥 More powerful diffs and values filtering

We’ve added new filtering capabilities in your diffs log and values-level diffs to make searching for diffs (and potential errors) faster and easier.

Quickly filter out diffs with differences

Using the Result filter in your log of Data Diffs, simply filter on Different to find only diffs where there were differences.

Filter columns in the UX

For Data Diff results with many differing columns, you’re now able to search and filter columns at scale—no more never-ending scrolling to the right to find the column you need!

To use, open the “Show Filters” menu to select and sort across your diff results at scale.

Filter columns by value

For easier value-level diff navigation, you can filter on specific column-values. Simply click on the gray filter symbol to the right of a column name and input the value you want to filter for. For example, look for a diff based on a primary key that’s giving you an issue!

👭 Join our Looker integration beta!

We’re very excited to share a pre-release of Datafold's new Looker integration! If your business uses Looker for reporting, you can enable Looker Views in Datafold’s lineage explorer and see potentially impacted Looker Views in Datafold’s CI comment—bringing impact analysis beyond your dbt project.

If you have any interest in trying out the new Datafold Cloud Looker integration early, please sign-up here.

👀 Coming soon

Keep an eye out for exciting developments on:

- 💻 Enhanced developer experience with our ✨new✨ VSCode Extension—click here if you would like to be a beta tester 🧑🔬

- 🔀 Cross-data warehouse diffing (pssst if you're interested in trying the alpha for this, please respond to the product newsletter email or email gleb@datafold.com)

- 📈 More BI tool integrations

- ...and more!

June 1, 2023

June 1, 2023

Data Diff Management + Version Control Integration

We’ve increased the amount of context available from your Github & Gitlab integrations to the Datafold user interface so you can more clearly understand the relationship between your diffs and specific commits and pull requests.

Filter Data Diffs by the pull request creator

Easily filter for pull requests by Github or Gitlab user names, trace that back to the specific pull request or the commit that triggered it.

Data Diffs grouped by pull request

This makes it easier to navigate between your pull request, commit history and the associated diffs, tracking changes and validation over time.

Grouped diff deletion and cancellation

You can now select a group of diffs and click the Delete Data Diff or Cancel Data Diff options in the top right section of the page.

Streamlined Data Diff Results

We’ve shortened the feedback loop in our results pages to rapidly show more relevant information. For example, you’ll now see column-level metrics earlier in the CI/CD and command-line data-diff results. We’ll also show downstream app dependencies for each Data Diff within the UI, allowing you to quickly get the appropriate lineage for a given downstream dependency.

.gif)

May 1, 2023

May 1, 2023

data-diff -- dbt

We have released the dbt integration for our open-source data-diff tool. Data-diff helps to quantify the difference between any two tables in your database. You can now see the data impact of dbt code changes directly from your command line interface. No more ad-hoc SQL queries or aimlessly clicking through thousands of rows in spreadsheet dumps.

If you use dbt with Snowflake, BigQuery, Redshift, Postgres, Databricks, or DuckDB, try it out and share your feedback. It only takes a couple settings and a one line command to see your data diffs in development:dbt run --select <model(s)> && data-diff --dbt

This shows the state of your data before and after your proposed code change:

Use this dbt + data-diff integration to quickly ensure your code changes have the intended effect before opening up a pull request.

We’re excited to hear your feedback via the project’s GitHub project page or the #tools-datafold channel in the dbt Slack community.

March 31, 2023

March 31, 2023

Diffing Hightouch models in CI/CD

We’ve all been there - accidentally breaking downstream dependencies that don’t live in your warehouse. How could you possibly have known what another team’s pesky filter was going to be? Well your business intelligence, marketing & operations teams can sleep more soundly knowing that your data team has full visibility into these types of breaking changes, and can prevent them before they happen.

Ship faster and more confidently after Datafold compiles and materializes Hightouch models based on each branch of a change, and then diffs them to flag any potential changes in the query output. We see teams moving towards faster and actionable data pipelines every day, so confidence in every change is vital to keeping your team humming along.

Diffing Hightouch models will show up alongside your standard diff results in Github comments and within the Datafold app.

To get started, first configure your Hightouch account within Datafold. Then, since this feature is still in beta, opt-in here to enable it!

.avif)

March 24, 2023

March 24, 2023

CI Jobs Management

It’s now even easier to manage CI runs within Datafold, and we’ve added several navigation improvements. The goal is to make it even easier to manage your CI jobs at scale.

- First, find and filter for your CI job quickly via the Datafold Jobs tab, which is now visible to all users.

- Status Page - We’ve added a more detailed CI job status page with a breakdown of individual steps and results

- Cancel + Rerun CI Jobs - You can now easily cancel running jobs, or rerun jobs within the Datafold user interface.

.gif)

All users now have access to the Jobs user interface, and are able to see a CI Job results page, and view the individual Data Diffs associated. Each CI Job results page contains the status of all data diffs, intermediate steps, and gives you the ability to cancel an active CI Job run. Excited to hear your feedback.

Clearer Diff Sampling Logic

Sampling diff results is helpful for speedy and efficient checks of extremely large data sets. However, sometimes you need to ensure 100% test coverage of every single row, even for large data sets. To assist, we’ve added more clarity to when and where data will be sampled during the in-app diff creation workflow.

You can now explicitly disable sampling for a diff. Users running data migrations, where running a data diff against the entirety of a dataset is required for user acceptance testing, it’s now clearer and easier.

.avif)

January 16, 2023

January 16, 2023

Introducing Slim Diff in CI/CD

- Slim Diff helps teams prioritize business-critical models in CI/CD workflows - it gives teams control over exactly which models to diff on each pull request. When enabled - Slim Diff runs data diffs for only specified models based on dbt metadata, and skips models that aren’t explicitly tagged or are excluded from data diffing.

Column Remapping in Data Diff creation flow

- Quickly remap columns within the Data Diff UI or API creation flow for known column name changes to ensure all columns are compared correctly.

Schema Comparison Sorting

- Faster schema comparisons to see what changed inline, especially when column order has changed.

Cancel In-Progress Data Diffs

- Now you can quickly cancel currently running diffs in both the Data Diff results, as well as the administrator interface. As always, you can cancel all diffs within CI run as before from the same administrator interface.

Globally exclude tables from CI/CD diffs

- Use your dbt metadata to exclude particular folders or models from being tested against in CI/CD workflows. Use cases vary from excluding sensitive tables to unsupported downstream usages. Your data team can configure Datafold to be aligned with their priorities.

December 8, 2022

December 8, 2022

Lightning-fast in-database comparisons for the data-diff library + DuckDB support

- Have you ever wanted to quickly and easily get a diff comparison of two tables in your dbt development workflow? Now you can! Our wonderful Solutions Engineers spun up a tutorial on how to use our open-source data-diff library to find potential bugs that unit testing or monitoring would have missed.

- Additionally, our data-diff community contributors have continued to improve the product - including adding DuckDB support. We appreciate the support @jardayn!

- The latest release of Datafold’s free, open-source data-diff library is optimized for even faster Data Diffs within the same database. Compare any two tables within a warehouse and receive a detailed breakdown of schema, row and column differences.

Improved Diff Results Sorting and Filtering

- We’ve added improved sorting and filtering interfaces to the Data Diffs analysis workflow, making it easy to find specific rows within your diff results. For example, if you’re trying to confirm that the values for a particular primary key in your sea of modified data changed exactly as expected, filter for the specific primary key or changed column value you’re looking for.

CSV Export

- You can now export CSVs of Data Diff results and primary keys that are exclusive to one of the datasets in your comparison! This is perfect for debugging and reconciling missing data between two data sets, and sharing that information across your organization.

- Don’t forget you can always materialize your Data Diff results to a table in your database and natively join your results to your source data, or do a deeper analysis on those differences. Enabling this setting in the Data Diff creation flow via our API or the Datafold app will create a table in your temporary schema with matched rows, values, and flags for which columns.

Materialize diff results to table is an option within the Data Diff creation workflow in both the Datafold App and our REST API.

September 19, 2022

September 19, 2022

Lineage Usage Metrics

- Column and Table-level query metrics in Lineage - right-click on any table or column reference within the Datafold Lineage UI to view how many times a particular user account has read or written to a particular table, allowing you to identify commonly or infrequently used data points.

- Popularity metrics now include all cumulative downstream usage of column or table, showing the total downstream reads for a particular client.

- Popularity Filters - Filter lineage nodes by their relative popularity compared to all indexed tables in Lineage

Data Diff Improvements

- Cancel CI Job button via the Datafold Jobs UI - Admin users are now able to cancel CI/CD diff tasks via the Jobs UI in Admin Settings.

- Copy Data Diff Configuration JSON to Clipboard - the info button within the diff results page now contains a button to copy the JSON payload required to create a diff via the REST API.

- Set diff time travel logic at the dbt-model level. For example, if your dev and production tables have known differences due to timing of incremental source data, you can add a time-travel configuration to ignore the most recent data, preventing false positives in CI/CD. Learn more about time travel here and more about dbt metadata configuration here.

Other Improvements

- Catalog search improvements to weight exact-text matches more aggressively, and hide less relevant results.

- Datafold CI/CD integration now populates a list of deleted dbt models within the pull request comments.

- Improve lineage support for dbt-based Hightouch models

July 7, 2022

July 7, 2022

Popularity counters in Lineage

To help understand how frequently the assets in your warehouse are used, Lineage now displays an absolute access count per table and column for the last 7 days. To help you interpret that information, a relevant popularity rating from 0 to 4 is assigned, indicating how relatively popular a particular database object is relative to others.

Other changes

- For on-premise deployments, we now support data diff in CI for Github on-premise servers. To use your own private Github server instead of a cloud version (https://github.com), set a <span class="code">GITHUB_SERVER</span> environment variable and set it to your Github on-prem URL.

- In the app, the BI Settings section has been renamed to “Data Apps” and now includes both Mode and Hightouch integrations.

- Performance improvements to lineage.

- In the Lineage UI, Hightouch models and syncs now link to Hightouch App. This can be configured using the “workspace URL" field in the Hightouch integration settings.

- Visual improvements to data source names and logos in Catalog and Lineage.

- Updated display of long names of tables in Lineage.

- Popularity is now a general filter in Catalog. It can be applied to both tables and columns.

- Data Source and Data App source filters in Catalog are now merged for better search experience.

- Users can now add, remove, and query tags for Mode dashboards, Hightouch models, and Hightouch syncs using GraphQL API.

- Added usage info for tables and columns to GraphQL API.

- CI configurations can now be paused, preventing them from running checks on pull requests.

- Added support for BigQuery’s bignumeric and bigdecimal data types.

- Now data source mapper field in Data Apps create/edit form is validated after all the data sources are mapped.

- In the Data App settings, we’ve added direct links to our documentation.

Bug fixes

- In some cases, data diffs were not canceled after CI run cancellation. These diffs were stuck in a WAITING status forever.

June 23, 2022

June 23, 2022

Multidimensional Alerts (Beta)

Users can use <span class="code">GROUP BY</span> in alert queries to dynamically produce several time series at once. Each dimension is named after the values of the dimensional/categorical field(s) of <span class="code">GROUP BY </span>; its thresholds and anomaly detection can be configured separately. New time series will appear (and disappear over time) according to the data’s changes without the need to modify a plethora of alerts with <span class="code">WHERE</span> filters.

This feature is currently in Beta and is available upon request — please reach out to support@datafold.com to enable it for your organization.

Datafold <> Hightouch Integration

Hightouch models and syncs are now discoverable through the Datafold Catalog and visible in Datafold’s Column-Level Lineage - making it possible to trace data from source to activation.

This feature is currently available upon request — please reach out to support@datafold.com to enable it for your organization.

See downstream data applications in PR Comments

Datafold now shows downstream data applications, e.g. Mode reports and Hightouch syncs, that might be affected by a code change.

Data Diff results materialization

Users can now save Data Diff results in their databases for further analysis. Current support is limited to PK duplicates, exclusive PKs, and all value level differences.

Other changes

- Significantly improved CI-based Data Diff performance for large warehouses with many tables, schemas, etc.

- Expandable metric graphs to make comparison more convenient.

- For On-Premises Implementations - If the environment variable <span class="code">DATAFOLD_AUTO_VERIFY_SAML_USERS</span> is set to "true", then users created during SAML sign-up will not have to verify their emails.

- Better display for values match indicator in Data Diff -> Values tab.

- Reformatted long alert names in the filter popup for readability.

Bug fixes

- Resolved the issue where the Datafold-sdk failed to perform a primary keys check for manifest.json if there were some tables in the manifest that had not yet been created in DB.

- Jobs request fails when filters are cleared.

June 9, 2022

June 9, 2022

Databricks support

You can now add Databricks as a data source, with full support for Data Diff, table profiling, and column-level lineage.

Other changes

- Data Diff sampling thresholds are no longer limited to hardcoded defaults and can now be configured from the UI.

- We updated the Jobs page to make connection types, table names, and runtimes easier to read.

Bug fixes

- Slack and email alert notifications were not delivered for some customers between 2022-05-31 18:00 UTC and 2022-06-07 11:00 UTC (SaaS)

- Profile histograms and completeness info did not render immediately on load.

- Job Source filter did not contain all the possible values that our API can return.

- “Created time” and “last updated time” were not displayed in the list of Jobs.

- Incorrect status in gitlab CI pipelines. Datafold App will no longer block a merge if something is wrong with the Datafold App.

May 26, 2022

May 26, 2022

Lineage UI filters

Navigating large lineage graphs is now easier with filters that help filter out the noise. Datasource/database/schema filters allow you to control the amount of information displayed.

User group mapping between Datafold and SAML Identity Providers

Organizations using a SAML Identity Provider (Okta, Duo, and others) to authenticate users to Datafold via Single Sign-On can now set up a mapping between SAML and Datafold user groups.. Users will be automatically assigned to desired Datafold groups according to the pre-configured mapping when using SAML login.

This feature is available on request — please get in touch with Datafold to enable it for your organization.

Other changes

- Added a special method to our SDK to check the correctness of dbt artifacts submitted to Datafold when using the dbt Core integration. Now Data Diff can finish even if something is wrong with uploading dbt artifacts. See the documentation for details.

- Now Datafold shows Slack users/groups with the conventional @-form, like in the Slack App.

- SAML validation & configuration errors are now exposed to users so that they can debug their setup.

Bug fixes

- Sometimes the job status is displayed as `notAvailable`.

- BI reports with special characters in names (slashes, hashes, etc) are not displayed or routed correctly.

- When BI report's preview is downloaded with an error, the loading indicator is displayed forever.

- Multi-word search requests were squashed, omitting spaces.

- Inviting a user that was already in Datafold caused an error with an unclear message. Now it says explicitly that the problem is with the user being already invited.

May 26, 2022

May 26, 2022

Lineage UI filters

Navigating large lineage graphs is now easier with filters that help filter out the noise. Datasource/database/schema filters allow you to control the amount of information displayed.

User group mapping between Datafold and SAML Identity Providers

Organizations using a SAML Identity Provider (Okta, Duo, and others) to authenticate users to Datafold via Single Sign-On can now set up a mapping between SAML and Datafold user groups.. Users will be automatically assigned to desired Datafold groups according to the pre-configured mapping when using SAML login.

This feature is available on request — please get in touch with Datafold to enable it for your organization.

Other changes

- Added a special method to our SDK to check the correctness of dbt artifacts submitted to Datafold when using the dbt Core integration. Now Data Diff can finish even if something is wrong with uploading dbt artifacts. See the documentation for details.

- Now Datafold shows Slack users/groups with the conventional @-form, like in the Slack App.

- SAML validation & configuration errors are now exposed to users so that they can debug their setup.

Bug fixes

- Sometimes the job status is displayed as `notAvailable`.

- BI reports with special characters in names (slashes, hashes, etc) are not displayed or routed correctly.

- When BI report's preview is downloaded with an error, the loading indicator is displayed forever.

- Multi-word search requests were squashed, omitting spaces.

- Inviting a user that was already in Datafold caused an error with an unclear message. Now it says explicitly that the problem is with the user being already invited.

May 12, 2022

May 12, 2022

Data Diff sampling for small tables disabled by default

To avoid unnecessary overhead, Data Diff sampling is disabled for smaller tables. At this point the thresholds for table size are hardcoded defaults, configuration UI is coming. See the documentation for more details.

Other changes

- Alert query columns are automatically classified to time dimension and metric columns; there is no more need to put the time column first.

- Datafold no longer uses labels on GitLab to track the status of the Data Diff process, the status can now be tracked from the CI pipelines functionality.

Bug fixes

- Issue with include and exclude columns in diffs

- Off-charts dependencies of the in-focus table in Lineage are now displayed (and act) correctly as "Show more" → Change direction of Lineage

- The Settings menu item in the Admin section is sometimes not rendered correctly

- Catalog search by one- and two-letter words does not work

- Rows with NULL primary keys always got filtered out during data diff if sampling had been enabled

April 28, 2022

April 28, 2022

Data Diff filters can be configured in the dbt model YAML

Now you can configure Data Diff filter defaults in dbt model YAML. Filtering can be used to force Data Diff to compare only a subset of data, i.e. you may want to compare just the latest week to save DWH resources and reduce diff execution time. See the documentation for details.

Other changes

- Selecting a column and its connected nodes in Lineage is now followed by an indicator that also allows to exit the selected path mode. Click on empty space is deprecated.

- Fold sections of Github / Gitlab printouts to save screen space. They can be easily unfolded to check verbose diff information.

- Show actual Slack error codes on test notifications, so that users can debug their Slack-Datafold integration.

- Datafold now sends a confirmation email when SAML users are auto-created.

- Now Lineage is showing all columns of table that are in the database, not only ones that have connections detected by Lineage.

- Improvement to the autocomplete feature in Data Diff.

Bug fixes

- API key not copied into clipboard with input built-in tool

- Cell data in Data Diff Sampling tab is not copied from the popover

- Sometimes NaN appears instead of alert weekly estimates.

- Disabled users logging in through OAuth no longer raise an error.

April 20, 2022

April 20, 2022

You can now receive Alert notifications at arbitrary webhooks with arbitrary payloads (including but not limited to JSON) — in addition to Slack & email notifications. See the documentation for details.

This feature is available only on request — please contact Datafold to enable it for your organization.

For API-first users, all API errors from all API endpoints are now unified as per RFC-7807 with the same structured JSON payload, the 4xx HTTP status codes are normalized for most cases. This might simplify parsing the error messages, for example, due to invalid input and incompatible configuration. The UI error messages will be more descriptive in some cases where they were not.

Other changes

- A new API endpoint <span class="code">`/api/v1/dbt/check_artifacts/{ci_id}`</span>to check for dbt artifacts after uploading. This endpoint might be triggered during a CI process, for example, in Github actions or Gitlab CI, to help Datafold understand the status of downstream tasks.

- Improved performance of dataset suggestions in Data Diff, now search-based.

Bug fixes

- Lineage off-chart dependencies for upstream nodes not displayed

- Snowflake table/column casing issues are resolved

- Special characters are now properly handled on the data source names

- Table profiling will not be done for disabled data sources

- Lineage column selection dropped after table expansion

- Jobs UI now shows main jobs instead of result sub-jobs for profiling and data diffs

- Off-chart edge switches lineage direction for primary table

- Redirect to lineage from profile was sometimes broken

April 4, 2022

April 4, 2022

Refactored navigation design

Other changes

- Improved formatting of integers for column profiles in Data Diff

- Now we're displaying columns list, their description and tags in Profile, even if profiling is disabled

- Added excludes/includes support to GraphQL search endpoint

Bug fixes

- Fix: lineage not expanding for the second time

- Fix: last run filter in search showing numbers instead of days/weeks

- Fix: expanding lineage showing incomplete list of tables

- Fix: incorrect sorting in a primary key block in the Data Diff UI

- Fix: ability to navigate to data source creation dialog with non-confirmed e-mail

March 29, 2022

March 29, 2022

SAML

Organizations can now use any SAML Identity Provider to authenticate users to Datafold via Single Sign-On. This includes Google, Okta, Duo, and many others, including private/corporate identity providers.

Other changes

- During CI runs, data diff jobs will automatically select a created_at or updated_at column with an appropriate timestamp type as the time dimension

- Catalog search has been improved in both performance and result ranking

- Tags automatically created during dbt processes that have been superseded are periodically removed

- A custom database can be specified for Lineage metadata in Snowflake sources

Bug fixes

- Masked fields in Snowflake data sources could cause errors when materializing temporary tables

- Disabled users could not be re-enabled

- Posting labels to Gitlab triggered notifications when there were no changes

- Table profiling failing for views in PostgreSQL data sources

March 8, 2022

March 8, 2022

New Lineage UI

The lineage UI was updated to improve the performance for large graphs and to make exploring dependencies more intuitive. Among other changes, the view now distinguishes between upstream and downstream graph directions, and filter settings have moved to the top to provide a larger area for the lineage canvas.

Improved Slack alert messages

To make the anomaly notifications more actionable, the notifications now include the alert name, the actual value and provide more context to the anomaly that occurred.

Reduced verbosity for new tables in the Data Diff CI output

When new tables are created in a PR, the block has been reduced to only show the number of rows and number of columns, and a link to the table profile is inserted.

Other changes

- Automatically created tags from ETL are now cleaned up automatically after their initial use to reduce tag clutter

Bug fixes

- BI dashboards stopped displaying in the catalog

- Added missing icons of BI data sources

- Lineage paging stopped loading off-chart dependencies

- Github refresh button didn’t work correctly

- dbt metadata synchronization for dbt older than 1.0.0 in combination with Snowflake didn’t work correctly

February 21, 2022

February 21, 2022

Fine-grained control of what data assets show up in Datafold