Unlocking data quality with automated regression testing

Dive into the significance of automated regression testing in data analytics engineering. Discover how top-tier data teams harness these software engineering practices to guarantee data quality and efficiency.

Software engineers have done a great job automating their daily work. A single code change can trigger entire testing and deployment suites with CI/CD pipelines and sophisticated workflows. Developers are more productive than ever and their automation-backed productivity is spreading to multiple disciplines.

Today’s forward-thinking data practitioners are doing rigorous testing and looking downstream before deploying changes to production. They’re checking to see if a minor data change will affect dashboards, comparing datasets between pre-prod and production, and validating accurate data replication across multiple platforms.

This is the future direction of analytics engineering. Data changes far too fast for data integrity and quality to be managed manually. Furthermore, decisions are made on high-quality data, so data professionals should be leaning in on automated solutions to maintain quality and speed without disruption.

In this article, we’ll take a closer look at how data professionals can leverage the software engineering practice of automated regression testing in their workflows.

Why automated regression testing matters

One of the most frustrating aspects of data engineering is when simple changes break something downstream. In fact, Datafold’s CEO experienced this when he was a founding engineer at Lyft several years ago. He deployed a two-line SQL hotfix during a production incident, which led to misrepresented numbers, halts to ML apps, and impacted decisions. Automated regression testing (with data diffing and column-level lineage) would have prevented this problem.

Regression testing is when you retest existing features to make sure they still work after a code change. In the software world, these tests are typically done by software developers and quality assurance teams. Depending on the change, regression tests might be done manually, with automation, or a combination of both. Ultimately, regression testing answers the question: “Did I break something or make something less usable by touching the code?”

Regression testing differs from the classic assertion-based approach we typically see in data analytics. For example, a dbt (Data Build Tool) practitioner might say, “All of my primary keys must be unique and not null,” or, “No values in this table should be above $100.” Those tests are helpful, but they don’t check for what isn’t known or expected in the dataset. This is what regression testing is really trying to uncover.

The nuts and bolts of Datafold’s testing process

Let’s say you’re using dbt to update a fct_transactions table. You want to check your dev schema against the production schema to see what’s different. This is a great opportunity for regression testing.

Regression tests can show you that: A) Everything is working as expected with no downstream problems, B) There are no unexpected differences between dev and prod, and C) You did your job correctly. Without regression tests, you’re left using intelligent guesses, manual spot checks, and institutional knowledge. This works fine for some people—until it doesn’t.

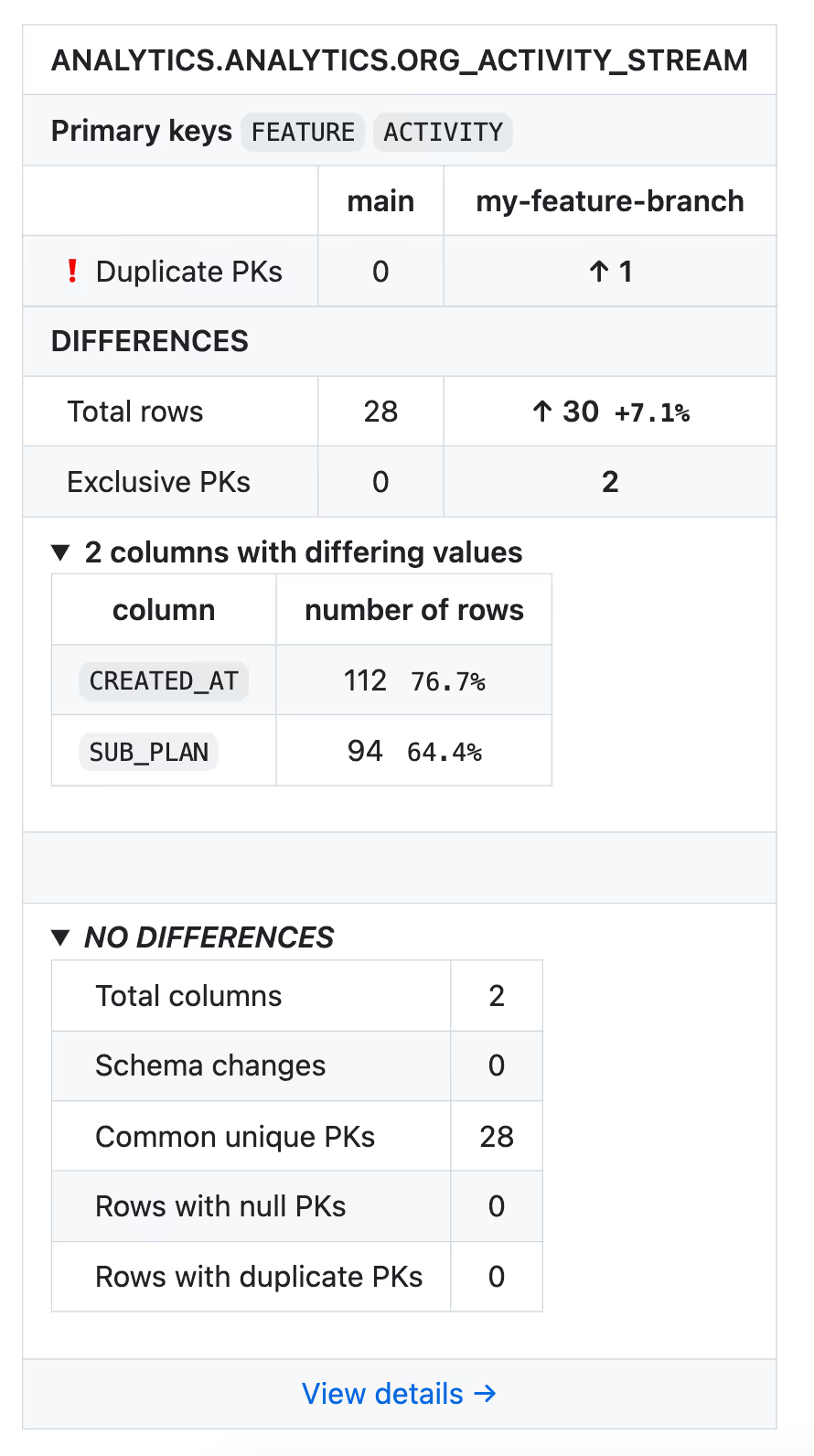

Automated regression testing with Datafold in your CI (continuous integration) pipeline helps you to see not only that everything looks as it should, but you’ll also see exactly which rows and columns differ directly in your pull request. This includes seeing what rows should exist but don’t, and what rows don’t exist but should. Datafold lets you “double-click” directly into the specifics, giving you exact percentages and other analysis so you can feel totally confident in the changes you’re about to make to your data. Only once those changes are reviewed and accepted can those changes be merged into production.

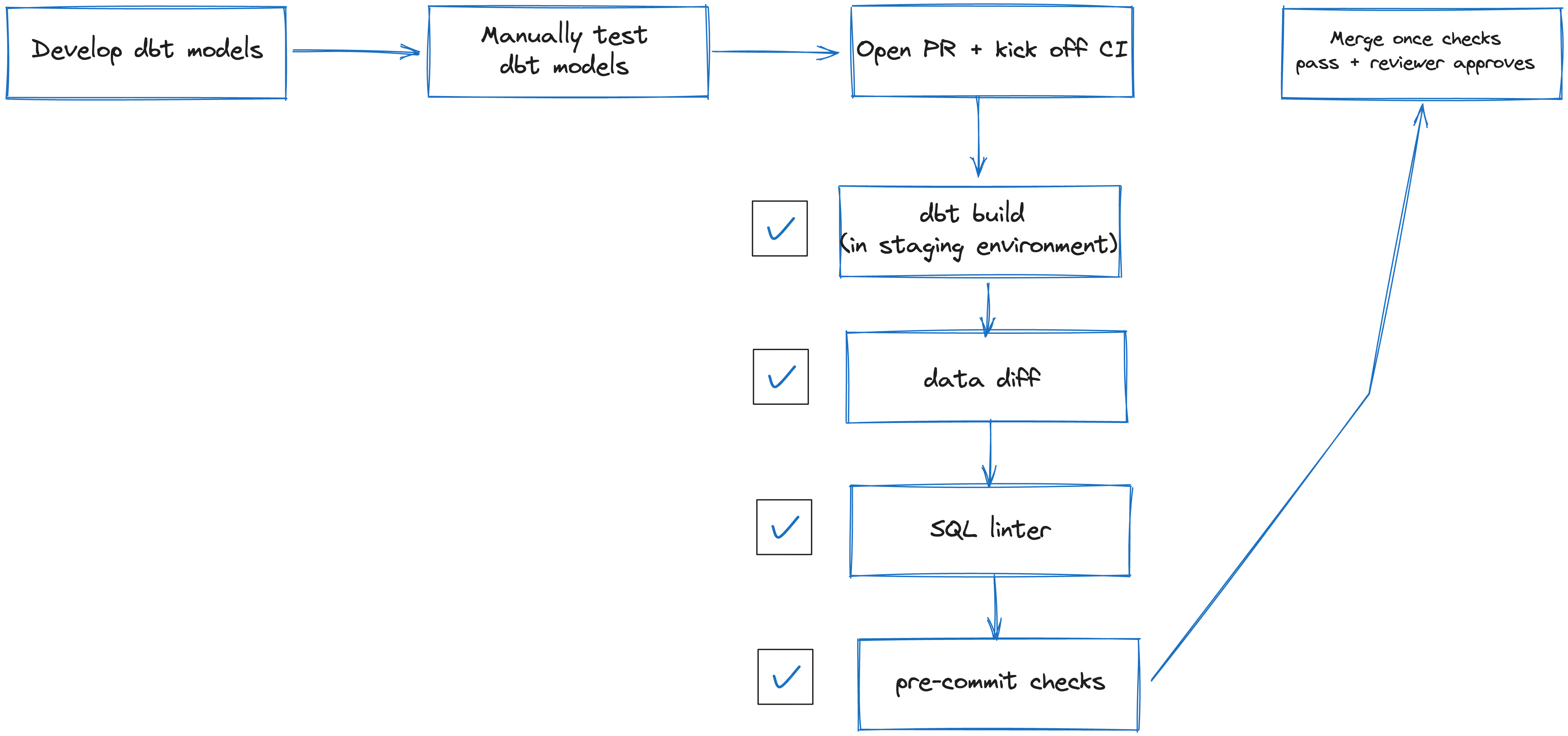

How CI and automated regression testing work

Many data professionals are managing their code with GitHub or GitLab, doing manual pull requests and code reviews. CI helps automate all of that. You can enable CI so every commit and PR follows the exact same testing protocols for your team, including data diffing and regression testing. Data teams will usually build their changed dbt models (a la Slim CI), run the dbt tests on them, run Datafold and data diffing, and use a SQL linter during their CI process. This guarantees that the impact of every code change is documented, approved, and understood in a standardized way.

Just because a process is automated doesn’t mean teams are uninvolved. CI checks give teams the autonomy and context to approve code changes. This is how software engineers work, only they’re doing application-specific tests and not data diffs or transformation validations.

There’s no one-size-fits-all method of working with automation. Some data teams let CI do all the decision-making on changes, while others have a review board that looks at Datafold printouts and approves changes after review.

We highly recommend using CI and integrating automated testing workflows for your dbt project. Whenever you commit a change to your dbt repo, you can kick off a suite of tests using dbt and Datafold. The results of these tests can either raise a flag or stop the code change altogether.

The maturity angle: Carrying the flag for process and data maturity

Mature data teams are forward-looking and self-aware. They know what they’re good at and they know where mistakes or errors can take place. Automation is one of the most important tools for mature data teams.

As we’ve seen, automated regression testing can be used to test data pipelines and models after every change. Mature data teams typically use automation and regression testing for:

- Improved data quality: Prevent unforeseen regressions and ensure that data pipelines are always producing accurate and consistent data

- Reduced risk: Eliminate human error and other sources of bad data in data pipelines

- Increased efficiency: Free up time to focus on more strategic tasks by automating and standardizing testing practices

- Faster time to market: Release new changes to their pipelines quickly and with confidence

- Better data governance: Build data compliance processes and policies directly into your deployment pipelines

Just as improved data quality increases confidence in the usage of data, automation can increase the confidence in making changes to pipelines and models. When a data analyst is armed with thorough and clear test results, they can be more confident in making decisions and adjusting parameters that are key to their workflows. Datafold allows you to get very granular in understanding changes in your data, even down to individual rows and understanding downstream impacts.

There’s also the human factor of CI in data analytics. CI simplifies and provides clarity for code changes, reducing the chances of deploying bad code to production. It can impose standards on code quality, documentation, and tolerance for the percentages on impacted data.

Case study: Real-world implementation

When teams are left to do manual testing on data changes, they can’t comprehensively cover the impact of a change or ensure all dbt code changes are tested the same way! The online marketplace, Thumbtack, was facing this issue while they were scaling their business. They were tracking issues in spreadsheets and spending hours reviewing individual pull requests. It got to the point where they couldn’t scale their own processes and data teams were overwhelmed.

Thumbtack integrated Datafold into their CI pipeline via GitHub and began to validate every SQL code change automatically. The results were significant:

- Quickly increased productivity by 20%

- Saved hundreds of hours of testing and review time every month

- Improved their data quality with Datafold’s column-level lineage

- Increased confidence in data usage and quality

Data teams that build CI workflows increase their maturity in multiple ways. The results are consistent across every implementation. When automation is in place, data teams have less to worry about and can focus on more important work.

Datafold’s impact on automated regression testing

Data teams need a way to guarantee that new code doesn’t break anything. Continuous integration and automated regression testing are the most immediate ways of getting there.

We’re starting to see a lot of traditional software engineering and QA testing practices in the data engineering world. This is a good thing. Thorough testing of data was always left out of the loop, which is why Datafold exists. We fill a fundamental gap in data validation and quality testing, which is in dire need of evolving. The classic assertion-based testing isn’t good enough: data practitioners need to look at regression testing and automation by running tests any time a new line of code or a change in logic is introduced.

Automation and data diffing frees you from guessing whether a code change does only what it should do. It can show you unexpected changes and give you precise details about what’s different between your pre-production and production environments. It can compare two versions of the same table that might exist in two data warehouses, or validate the parity between tables during a data migration. Most of what’s difficult in today’s data work is because there’s not enough automation and maturity in place.

The benefits of automated regression testing, continuous integration, and detailed data differences are available today, right now, with Datafold. We give you an unopinionated, clear report of what’s changed in your data and how it impacts downstream consumption.

.avif)