At the frontier of velocity and data quality

How data teams don't have to sacrifice data quality or deployment velocity

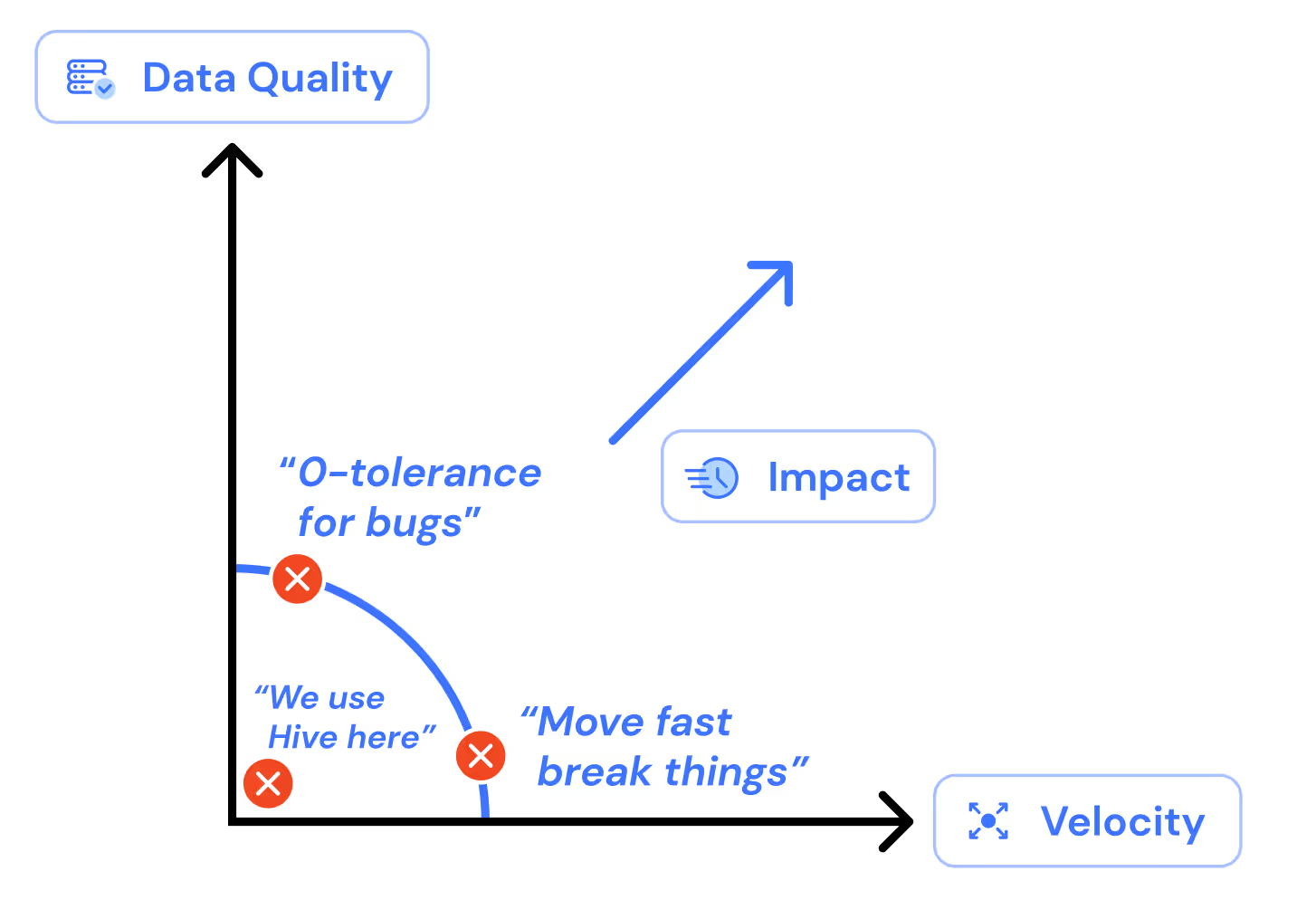

As many data teams enter into a new year with constrained budgets, demanding timelines, and a never-ending backlog of data quests, I can't help but think of the problem that has plagued the software development community for years: balancing velocity and quality.

Thousands of smart people wrote books and papers on the topic, and Facebook’s “Move fast and break things” motto is now a cliche illustrating a culture that prioritizes velocity at all costs. How does this apply to data engineering, and why should data teams care about it at all? And why is talking about this now so important for us data practitioners?

Why is velocity important to data teams?

Velocity is the driver of impact. Because data teams exist to power human or machine decisions with data, the faster we ship data products, the more impact we make to the business and as a team. And because we often operate under high uncertainty (e.g., no idea if we can fit a model to this data) and ambiguous requirements (stakeholders think they know what data they want), the faster we ship, the quicker we learn what works and what doesn’t.

Why is data quality important to data teams?

Data quality is a lot of different things and is often hard to measure. Except for ML, where we can objectively measure the performance of a model against ground truth, in most other cases, what we care about is trust. If data users don’t trust the data, they stop relying on it for decision-making, which negates the impact of the data team. When incorrect data is used to make an important decision, that impact can go below zero.

test

At the frontier of velocity and data quality

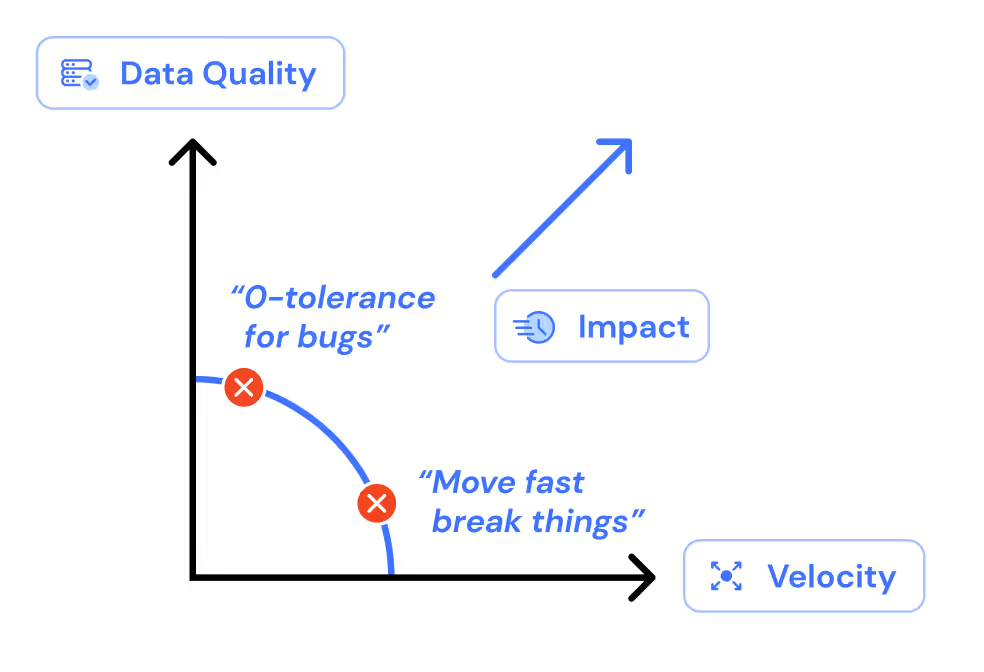

The trade-off between velocity and data quality is obvious. We can spend a lot of time validating our work, asking our peers to review our code, submitting it for review to a change management council, etc., all to minimize the chance of making a mistake. Or we can merge our changes directly into production.

test

But intuitively, we understand this trade-off is not binary, and we’re most often not choosing between the extremes. Borrowing from microeconomics, we can express this tradeoff as a frontier:

I should credit the excellent data team at FINN with this concept, as they are the first team I met who uses that mental model to guide their data platform strategy!

The archetypical example is a data team of one in a small startup shipping as fast as possible with no testing – the gains of having some insight, even if directionally correct, outweigh the gains of comprehensive testing under resources and space constraints. On the other hand, there’s the data team at a large bank where a “Change Council” only approves pull requests after a lengthy review.

The data team maximizes the most impact by shipping as fast as possible with the highest possible data quality, but in reality, the frontier defines the possibilities. Our choices about how we develop, test, and deploy our code determine where we are on the frontier. When we operate as a team, our team guidelines determine that. Guidelines, such as

- Each new dbt model’s primary key should have uniqueness and not-null tests

- At least one other data engineer should review each new PR

determine where the team operates on the frontier.

And moving along the frontier allows the team to pick an optimal quality-velocity trade-off.

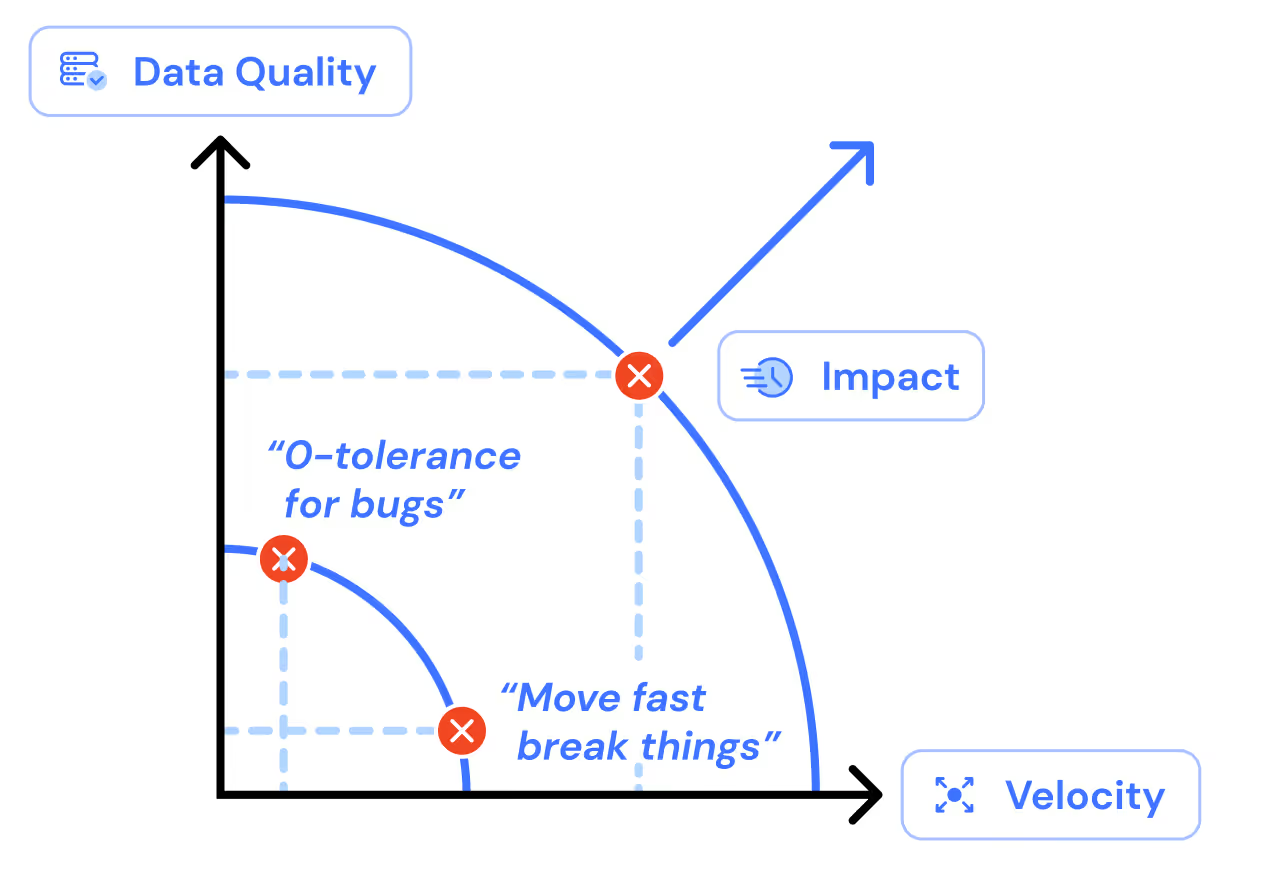

But you know what’s much, much better? Moving the frontier itself!

Moving the frontier up and to the right itself allows us to achieve equilibriums far more interesting: for the same levels of quality, we know we can ship with much higher velocity. And vice versa: the “move fast and break things” team can now maintain the same velocity with a previously unthinkable data quality.

What moves the frontier?

Just like with the Production Possibility Frontier in economics, what changes the frontier is technology. Plow, steam engines, and electricity were all examples of technology that massively moved the frontier for production forward, allowing us to produce more of everything at greater speeds.

Similarly for us data practitioners, the frontier is determined by our tools.

And just like tools can ultimately hinder our ability to ship quality work fast...

They can also massively improve our impact!

Luckily, with the Modern Data Stack resting upon the dbt/Dagster and Snowflake/Databricks/BigQuery foundation provides a much better (further up-and-to-the-right) frontier than legacy technologies. In this world, our queries run (and fail) reasonably fast, and we don’t have to worry about corrupted partitions, “small files”, and quiet failures from inserting strings into float columns (recovering Hive user here).

However, such a stack alone is not the Best – the furthest up-and-to-the-right with modern technology – frontier for data teams.

What differentiates the teams operating on the Best frontier is a mature change management process.

Changes of what? Changes of the code that processes data, e.g. dbt SQL. And while change management may not sound cool, it is, in fact, what we as data practitioners do all day. We write code, and we ship some of it to production. That’s how we introduce changes (and bugs).

The three components of a great data change management process are:

- Separate development and production environments with representative data

- Continuous Integration and Deployment (CI/CD) for data code

- Automated testing during Development and Deployment

Develop, test, and deploy in separate environments

Developing and deploying your code changes in separate environments builds the foundations for enabling robust CI processes and integrated automating testing. But, a big caveat to these separate environments is that representative data must be used in these environments. When you use representative data during development and testing, you replicate the reality of your production environment, allowing you to gain a firm understanding of how your code changes will actually change the data. Modern data tooling like dbt and Snowflake make developing, staging, and deployment in separate environments with representative data much easier than it was in the past.

CI/CD

Continuous integration and continuous delivery/deployment enable data teams to build, test, and deploy new changes in an automated and governed fashion. A robust CI process is the backbone of a mature change management process.

In a typical CI process, data teams should automatically:

- Check that their data models build properly (no compilation errors)

- Data models pass basic assertion tests and requirements (e.g. no primary keys are null or duplicative)

- Test for unforeseen regressions through a data diff

- Optionally, add some sort of SQL linter to ensure code changes meet organizational standards

An effective CI process meets two critical points on the frontier:

- Removes time spent on manual testing and reviews: By adding automatic checks in the CI process, we reduce the need for extensive manual testing and ad hoc SQL query spot checks. Our reviewers can see if tests pass, and determine more quickly if changes can be approved or not, saving your team valuable time.

- Establishes a layer of governance: With checks in our CI process, we’re ensuring every PR undergoes the same tests and scrutiny. This creates a consistent data quality testing layer that scales with our dbt model growth.

When you combine these two points together, we adopt practices that naturally force higher data quality; if a PR doesn’t compile properly, don’t merge it in! If an unexpected data change is introduced via a data diff, explore it before it reaches the hand of a BI tool user!

Modern data tools like dbt integrate closely with git providers like Github and GitLab to easily create and maintain CI pipelines.

Automated testing

There are two primary testing formats we see data teams use in their change management process: assertion-based tests and regression testing with data diffs.

- Assertion tests validate your most critical assumptions about our data. These will likely catch 1% of your data quality issues.

- Data diffs identify regressions—both expected and unexpected—that are introduced with our code changes. Data diffs can identify 99% of the other issues not captured by assertion tests.

Why differentiate between these tests? A few reasons.

Assertions need to be written and curated manually, which does not scale with hundreds/thousands of data models. We should define those for the most critical columns, metrics, and assumptions. For example, all primary key columns should be unique and not null, and every intermediate model should maintain referential integrity with its upstream model.

Data diffs, on the other hand, cover 99% of other issues not covered with assertions by showing how a code change impacts the data. Unlike assertions, it is agnostic of the domain. It does not tell you if the data violates your business assumptions, but provides you with full visibility into the changes, which is key to identifying any potential regression.

If we implement these tests during our CI processes, we can automatically ensure every dbt model change is tested in the way we expect, develop transparency into how our code changes actually impact the data itself, and enable our teams to review and merge PRs with greater speed and confidence—the midpoint of the frontier!

Getting started on pushing the frontier

Separate development and deployment environments allow us to build, test, and deploy with greater organization and speed.

CI/CD pipelines ensure every code change undergoes the same level of scrutiny, and automates away tedious manual testing.

Automated testing with data diffs allows us to find unexpected data quality issues that will never be captured by traditional assertion tests, increasing transparency into the impact of our code changes and testing our data in a faster way.

When we implement these practices and technologies, we find up-and-to-the-right of the velocity and quality graph and strike the balance that so many data teams strive for.

See you on this frontier!

.avif)