Lakebridge alternatives: Why Datafold is the best choice

Lakebridge helps partners automate migrations to Databricks SQL, but its narrow focus and rule-based approach come with tradeoffs. Datafold’s Migration Agent offers a more flexible, AI-powered solution that supports multiple target platforms and provides value-level data validation.

Lakebridge is Databricks’ new open-source toolkit for migrating enterprise workloads to DBSQL, but it’s not plug-and-play. Designed for partners, not end users, Lakebridge requires deep config knowledge, manual setup, and legacy system fluency to use effectively.

Originally developed as BladeBridge and acquired by Databricks in early 2024, Lakebridge was rebranded and relaunched to support Databricks’ growing partner ecosystem. At the 2025 Databricks Summit, the positioning was clear: this is a tool for system integrators (SIs) and consultants.

We reviewed the docs and launch materials to unpack what Lakebridge actually does and where it falls short. If you’re an SI, you’ll find a detailed walkthrough of its architecture, strengths, and current limitations. If you’re a company planning a migration, this guide will help you understand why Lakebridge likely requires external help, and why those tradeoffs matter when speed, accuracy, and long-term flexibility are on the line.

Lakebridge’s current limitations include:

- No comprehensive value-level data validation: While Lakebridge offers schema-, column-, and row-level reconciliation (with exact and aggregate modes), but documentation does not indicate support for full field-by-field comparisons needed to guarantee 1:1 data parity.

- Static, rule-based translation only: Lakebridge’s Converter uses configuration-driven, rule-based logic for code translation. This works well for common patterns, but complex or custom logic may require manual fixes, especially for stored procedures or unconventional SQL.

- No AI-driven feedback loop: Translation is one-directional. There is no “translate, diff, repeat” feedback cycle powered by advanced AI or large language models to fine-tune conversions until complete parity is achieved.

- Limited workflow transformation: Lakebridge focuses on migrating legacy SQL and ETL scripts into Databricks SQL or notebooks, but does not natively support modern tools like dbt or Coalesce.io as targets or transformation frameworks.

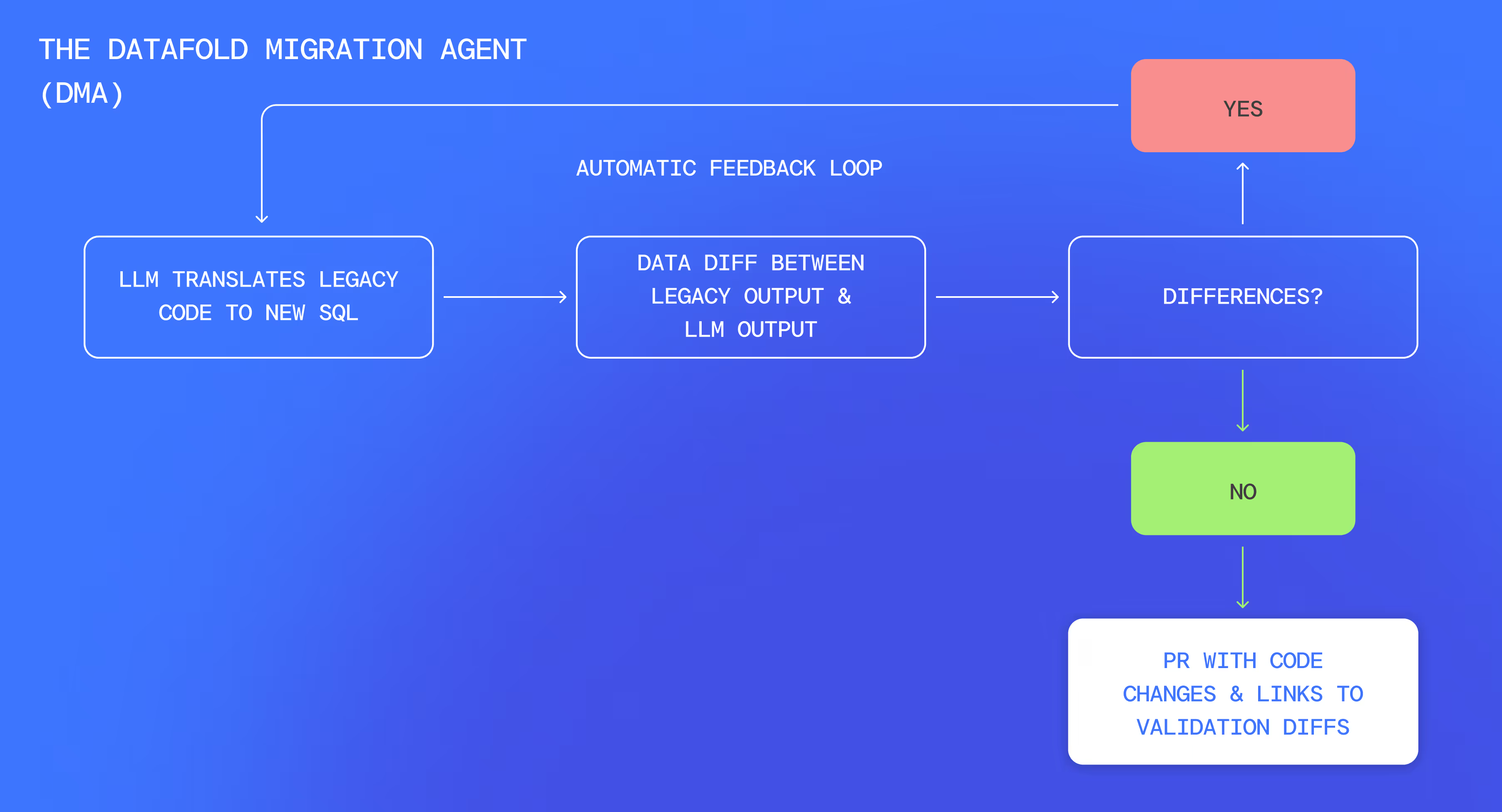

In contrast, Datafold’s Migration Agent (DMA) is data warehouse agnostic and offers true end-to-end automation, from code translation to value-level validation. Its LLM-powered feedback loop fine-tunes translations until value-level parity is achieved, comparing every data point across legacy and new systems to ensure that every record is migrated perfectly.

It’s this level of automation and precision that makes Datafold the superior choice for complex migrations or high-stakes data integrity requirements.

What is Lakebridge and how does it work?

Lakebridge is an open-source, CLI-based tool to help migrate legacy SQL and ETL workloads into the Databricks ecosystem. Rather than a turnkey product, it’s positioned as a migration accelerator that technical teams and partners can use to streamline migrations to DBSQL.

We pieced together its capabilities from product descriptions and documentation to understand what it does well, where it has limitations, and how it stacks up against Datafold’s Migration Agent. (If you’re ready to get into the technical weeds, check out DMA’s docs or this blog that looks into how DMA works).

Each tool within Lakebridge addresses a different phase of the migration process. While promising in scope, the tools remain loosely integrated and require a fair amount of hands-on work to get right. Here’s what each component does, and where its limits show.

Lakebridge features and components

Lakebridge includes four key modules designed to support different stages of a migration:

Profiler

As of publication date, the Profiler component is lightly documented in the docs. Based on early launch materials and legacy BladeBridge functionality, the Profiler is designed to connect to the source database and extract metadata and usage patterns to help assess the scope of a migration.

Analyzer

It profiles databases, stored procedures, and ETL workflows, giving you a clear map of what you’re working with. This helps you understand the scope and complexity of your migration, making it easier to plan timelines and resources.

However, it doesn’t connect directly to your source database. Code must be manually exported for analysis, and the insights are primarily structural. There’s no fine-grained column-level lineage or value-level impact analysis to help understand how specific changes could ripple through downstream models.

Converter

This is the workhorse of Lakebridge. It translates legacy SQL, stored procedures, and ETL scripts into Databricks SQL or Databricks notebooks. BladeBridge, the primary converter, uses a configuration-driven approach to generate Databricks-compatible outputs, including workflows and code artifacts.

Hence, the translation layer remains rule-based, not semantic. BladeBridge (the plugin) relies on static pattern-matching rather than structured understanding, making it prone to failure on complex or unconventional logic. This approach was state-of-the-art when BladeBridge first emerged, but it’s now showing its age in comparison to modern, LLM-powered systems that understand context and intent.

Translation success will likely be inconsistent, particularly for stored procedures, nested queries, or domain-specific edge cases where manual rework may still be necessary.

A second plugin, Morpheus, offers stricter equivalence guarantees and structured parsing, but supports fewer dialects and is still early-stage.

Validator

The final step in Lakebridge includes Reconcile, an automated tool designed to compare datasets between a legacy system and Databricks after migration.

Reconcile supports comparisons at the schema, column, and row levels, and can apply exact, aggregate, and threshold-based checks. This helps teams identify structural mismatches and surface inconsistencies in row counts or field presence across environments.

However, Lakebridge’s reconciliation appears to stop short of full value-level data diffing. Based on available documentation, Reconcile can detect that a row has changed, but it does not appear to report which specific columns within that row differ, nor how they differ. There is also no indication that it produces detailed, auditable mismatch reports at the field level.

Additionally, validation in Lakebridge is not iterative or AI-powered. There’s no learning loop to automatically refine translations based on discrepancies uncovered during validation.

Lakebridge migration solution pros and cons

Lakebridge’s strength lies in its open-source availability, support for legacy platforms, and configuration-driven framework.

However, Lakebridge remains Databricks-only, and it lacks the level of granular, value-level data diffing and iterative, LLM-powered feedback loops found in more advanced AI-powered solutions like Datafold. It cannot automatically fine-tune translations until perfect parity is achieved.

Comparing Datafold and Lakebridge

When comparing Lakebridge with Datafold’s Migration Agent (DMA), several key differences emerge. While both tools aim to simplify data migrations, Datafold leverages advanced AI to deliver superior accuracy, flexibility, and speed. This AI-driven approach gives DMA a distinct edge, especially for complex migrations or high-stakes data integrity requirements.

Lakebridge uses rule-based automation via two transpilers: BladeBridge (its mature engine) and Morpheus (a newer transpiler with limited dialect support and experimental dbt repointing). Both rely on predefined translation rules, which makes its translations consistent but rigid, often requiring manual intervention for unconventional code patterns.

Datafold, on the other hand, uses a sophisticated, LLM-powered feedback loop that learns and improves over time. This adaptive AI doesn’t just translate code; it continuously fine-tunes and validates until perfect parity is achieved.

Here’s the head-to-head comparison:

Lakebridge is a free, open-source toolkit that lowers the barrier to entry for DBSQL migrations, but its effectiveness depends heavily on partner expertise and the complexity of your source systems.

While feature checklists are useful, the real test is how these tools perform in live migrations, especially when accuracy, speed, and flexibility matter most.

In industries like finance and healthcare, where data integrity is non-negotiable, migration tools must do more than translate code. They need to preserve logic, validate outputs, and support diverse workflows from Informatica and Oracle to dbt and HL7.

A rigid, SQL-only approach risks losing critical transformations, whereas Datafold’s broader workflow support ensures accuracy and compliance across diverse data environments.

Why Datafold’s AI-driven approach matters

The difference in automation approaches is crucial. Lakebridge uses static, rule-based automation. This approach is prone to breaking with complex or unconventional code patterns, leading to manual workarounds and more testing cycles.

Datafold’s AI-driven approach is different. Its LLM-powered feedback loop doesn’t just translate code—it learns from each iteration, continuously refining translations until perfect parity is achieved. This adaptive AI minimizes human error and accelerates timelines by reducing the need for manual fixes.

In other words, Datafold’s AI improves it with every pass, minimizing human intervention and accelerating delivery timelines. That makes it especially well-suited for complex, high-stakes migrations where precision is critical.

But that’s not all. Datafold’s Migration Agent also has something called a Source Aligner that helps tackle one of the hardest parts of data migrations: making sure you’re comparing apples to apples.

Before DMA starts translating and validating code, Source Aligner locks in a snapshot of the input datasets on both the legacy and new systems. This way, outputs and inputs of data transformation models are exactly the same—no missing rows, no unexpected values. By freezing the data in place, it cuts out the noise that comes from inconsistent environments.

This means that when differences show up during validation, you know it’s because of actual code changes, not because the data didn’t line up. This ensures more accurate comparisons and more reliable validation from start to finish.

With its continuous learning AI, transparent audit trails, and unmatched flexibility, Datafold isn’t just a migration tool—it’s a long-term data integrity solution. It’s a unique approach that makes a big difference for high-stakes migrations where accuracy really matters.

Which migration tool is right for you?

Lakebridge represents Databricks’ latest move to lower the barrier for teams migrating legacy systems into its ecosystem. By open-sourcing BladeBridge and building Lakebridge as a partner-first toolkit, Databricks is following industry trends to lower the barrier for companies to switch to its platform, which could tip the balance in the ongoing cloud data wars.

But Lakebridge’s Databricks-only focus means it’s best suited for teams already committed to DBSQL. If you need flexibility—like migrating to Snowflake, BigQuery, Redshift, or another data warehouse—Datafold’s Migration Agent (DMA) is worth a strong consideration. Its data warehouse agnostic design offers long-term flexibility, which can be crucial for teams navigating multi-cloud environments or avoiding vendor lock-in.

Plus, DMA goes beyond schema checks by comparing every data value across legacy and new systems, ensuring perfect parity. Its advanced AI maintains accuracy through automated validation and continuous refinement.

For teams where accuracy is mission-critical—like finance or healthcare—DMA’s value-level data validation and iterative feedback loop provide the peace of mind that nothing got lost in translation. And with features like Source Aligner and value-level diffing, DMA offers a level of transparency and auditability that’s hard to match.

If you’d like to learn more about how DMA can accelerate your migration, please book time with our team.

.avif)