How to build a successful cloud migration testing strategy

Explore essential strategies for cloud data migrations. Learn about lift and shift, migrate and refactor methods, and effective testing techniques for a seamless transition.

There are two levels of difficulty when it comes to cloud data migrations: pretty hard, but doable and OMG how do I make it stop? More formally, the industry calls these strategies "lift and shift" and "migrate and refactor" (or sometimes "transform and shift").

Each migration strategy requires testing that proves whether the migration was successful. In a lift and shift scenario, success means everything is the same between the old and new systems. In a migrate and refactor scenario, success means validating that dozens—if not hundreds—of functions still work.

No matter where you are in your data migration journey, we’re here to help. Let’s dig in and talk about the best testing strategies so you can come out on the other side of this thing feeling like you’re at the top of the mountain and not buried somewhere underneath.

Why undergo a data migration?

Data migrations are important work. For the past several years (and many more to come), companies have decided to move their data out of legacy systems and into something more modern.

There are countless reasons for a data migration. Some are simple, some complex, some driven by a business requirement, some driven by regulatory compliance. Here are some examples:

- Business intelligence and analytics: To make data accessible to a BI and visualization suite

- Data center relocation: Migrating out of on-prem data centers and going all-in on the cloud for cost savings and scalability

- Changes to business relationships: A merger or acquisition requiring data consolidation across several business entities

- Regulatory compliance: A legislative change or scaling business into new geographic territories that have specific laws around data management

- Cost savings: Moving off of expensive, proprietary solutions and into something more open or affordable

- Business agility: Implementing a more flexible infrastructure that allows for more real-time decision making

Choosing to undergo a cloud data migration is an important decision that affects the business, the technology, and the people using the data. We definitely recommend checking out our Data Migration Best Practices guide if you want to know more.

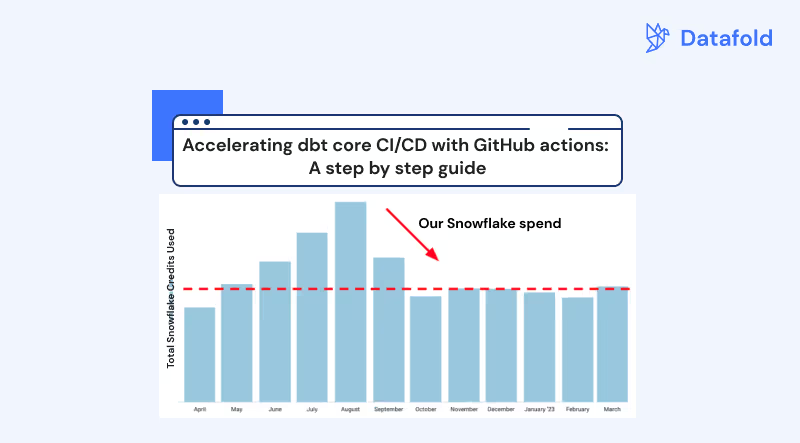

Where to apply tooling and automation to your data migration

Tooling and automation are your best friends when it comes to your migration project. Moving between source and destination systems is always, always, always riddled with technical complexities and opportunities for big and small problems to pop up. You’re going to need help because you’ll be testing your work every step of the way.

Furthermore, the bigger your data migration, the more you’ll rely on tooling and automation. Data complexity grows non-linearly. (No, we don’t have the math to prove it. You’re just gonna have to take our word for it.) The addition of a single table can lead to innumerable data consumers, all of which need you to get this migration right. Without the right tools, you’re unlikely to have visibility into the people, processes, and systems that are depending on your data.

So, where do you apply tooling, automation, and testing in your data migration project? Always and everywhere. Here’s why:

- No team of data engineers can keep track of all the implications of a data migration

- Data is your most valuable asset and you need to get it 100% right, not 95% or 98%

- Everyone makes mistakes and tools are the best way of hedging your bet against imperfection

- Everyone underestimates the complexity of a migration

To quote Dwight Einsehower, “Plans are nothing, but planning is everything.” You can win an academy award for the most complete and perfect data migration plan and you’ll still find stuff later on that you forgot. It’s hard enough to do the day-to-day data engineering work. Forget about flawlessly pulling off a migration without tooling and automation.

Planning a migration is HARD! It requires a lot of detail-oriented work. You really have to know what assets are important, who’s using them and how, and then determine what can be deprecated during your migration. We highly recommend using a tool like Datafold with column-level lineage that integrates with your entire stack. It’s the most effective means of identifying and prioritizing your migration assets as quickly as possible.

Getting your migration, validation, and translation right

There are three phases to a data migration: planning, translation and migration, and validation. The biggest and most important phases are migration and validation.

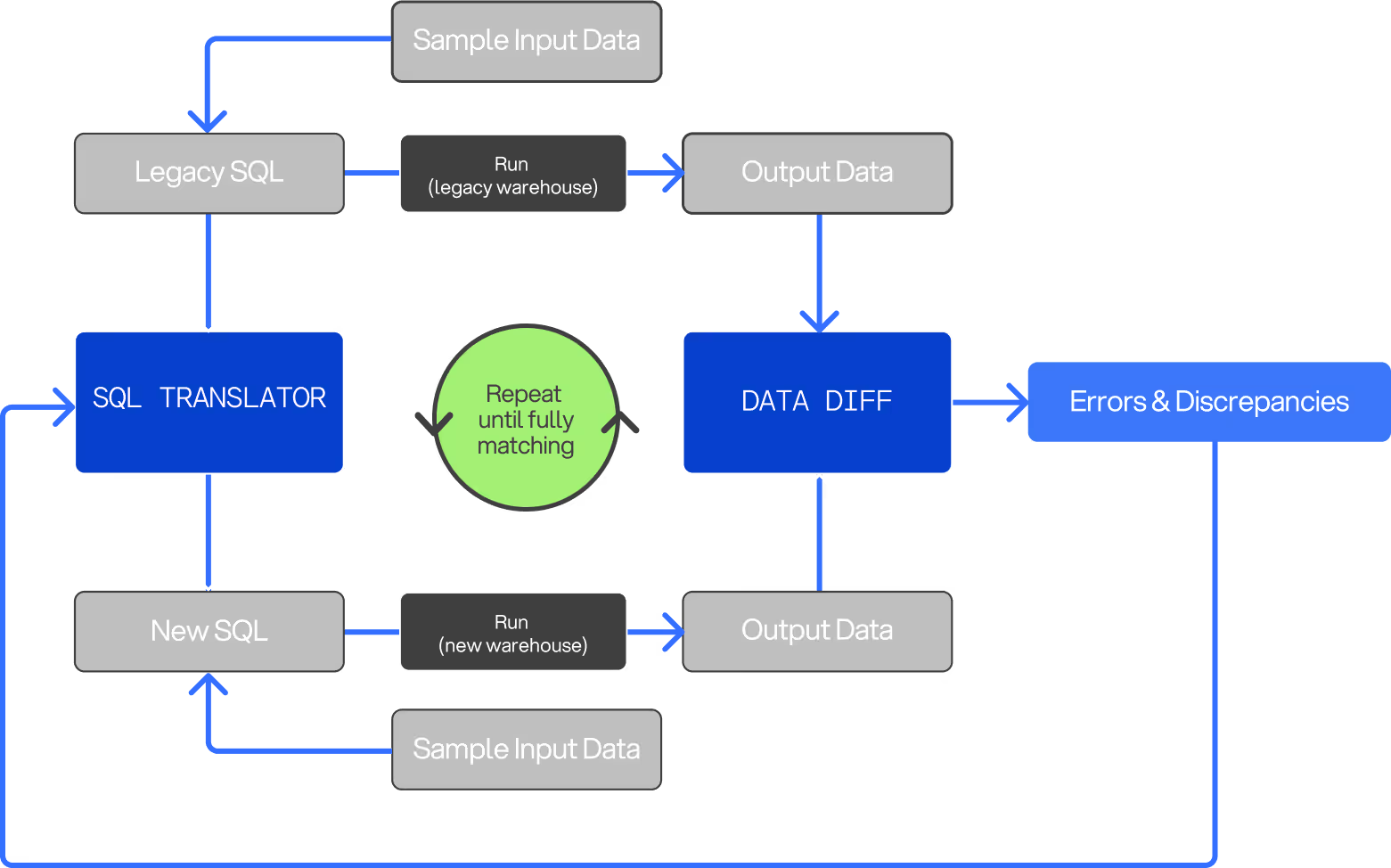

In the migration phase, you need to move everything* from A to B. That’s always a little more complex than people usually expect. Even if both A and B are SQL-based, that doesn’t mean the SQL scripts for A will work in B. For example, let’s say you’re migrating from Teradata to Snowflake. This involves architectural and SQL-support differences that require rewriting or translating SQL scripts to function correctly in the target system. Here’s where Datafold’s Migration Agent (DMA) makes a significant difference:

It automates SQL translation and ensures your legacy SQL scripts are optimized for the new system, saving time and reducing the risk of errors. With DMA, even complex transformations or stored procedures can be automatically translated, validated, and made migration-ready.

The translation phase is about ensuring your legacy SQL scripts are rewritten to function correctly with the new system. It’s an important part of a successful data migration, but isn’t as immediate a concern as the migration and validation. But if you have hundreds of scripts, is that something you’ll want to do manually? It’s much better to use a modern, automated translation tool like the one supported by Datafold and then use automation to test the new scripts’ functionality.

Then there’s the validation phase: the most tedious and difficult part of any migration. You’re going to need to validate that the move was successful—more importantly, you need to prove to stakeholders that their vital data is unchanged between systems. Questions like these need answers:

- Did you actually move everything?

- Is the data intact?

- Did your unicode entities get messed up in the process?

- Are your most important data consumers getting data back in the way they used to?

There’s really only one way to answer these questions: tooling and automation. No matter how large or small your data migration, ensuring you’re at 100% parity between your old and new systems is not a manual task. This is where Datafold’s cross-database data diffing comes into play. With row-level precision, Datafold can compare your source and target databases, highlighting discrepancies and ensuring complete parity between systems. This automation not only reduces the risk of errors but also provides the actionable insights and confidence stakeholders need to move forward.

*There are often tables that are unused in a legacy system and should be deprecated/not migrated to your new system. Column-level lineage paired with usage data can be an effective means of identifying unused/non-queried tables in your data warehouse.

Example migration from SQL Server to Snowflake

Let’s walk through a super high-level sample migration from Microsoft SQL Server to Snowflake, keeping an eye out for where you should apply tooling and automation.

Step one: Understand your legacy architecture. Identify what databases, objects, schemas, and functions you’re going to migrate. Map out all your data producers and consumers—including users/roles, systems, and applications. This will include your data pipelines, scripts, third-party SaaS apps, internal BI tooling, and humans (including non-functional requirements).

- Opportunities for tooling: Column-level lineage is a great tool to quickly identify which tables are powering the most important reports of your business. Also check out automated documentation tools to map out your SQL Server environment. The more you know your dependencies and complexities, the better.

Step two: Develop a migration plan. Determine what you can and cannot easily migrate. Some databases will fit in easily to your new Snowflake architecture, some may not. You should have enough information here to develop a set of “final state” diagrams and documents. Some legacy approaches will remain, some will be replaced.

- Opportunities for tooling: Use a project management tool. Trust us. Data migration plans change all. the. time. You could be 12 months into a “migrate and refactor” effort when you realize that your next-gen data destination isn’t going to be your destination.

Step three: Execute the migration. The migration will occur in phases and likely in a priority order that makes sense for your business. We recommend following an “order of operations” that includes migrating the assets that power your business reporting first (so there’s little disruption to your BI tool). If you’re doing lift and shift, this process might take weeks. If you’re transforming as you go, it could take years.

- Opportunities for tooling: We have a whole article discussing our favorite data migration tools. But if you’re into spoilers: we recommend commercial tools like Fivetran and Airbyte to expedite moving your data between systems.

Step four: Translate the legacy scripts. Update everything that made your legacy SQL infrastructure run your business. Get it all ready and operational for Snowflake. For example, SQL Server SQL syntax for data functions, like DATENAME, is just different enough to Snowflake’s DAYNAME, that would cause issues as you're migrating your data between systems. Use a tool like Datafold with a SQL translator, so you can avoid tedious SQL syntax differences.

Step five: Validate the migration. You’re likely to be continuously replicating, validating, and testing throughout the migration process, but you’ll do a final validation before shutting down the legacy SQL infrastructure. In the meantime, there will be a lot of fixing of what didn’t go according to plan.

- Opportunities for tooling: Datafold Cloud supports fast cross-database diffing, so you can identify the parity of tables across databases in seconds. No more jumping between systems and writing ad hoc SQL tests; simply know if your data is equal across systems with Datafold. This is the most effective and fastest way to get stakeholder sign-off on your team’s migration efforts.

Step six: Party hard! The migration is done, the data is validated, the scripts are translated, and it’s time to get down.

- Opportunities for tooling: We recommend an automated cocktail making machine.

Test the night away

As you can see, there’s opportunity for testing at every little step of a data migration. Because these projects are so complex, you want to get the best possible set of tools to keep you and your work in check. Doing a migration well means testing well, automating well, and having helpful tools.

Our cloud data migration testing strategy is clearly: automate where you can. Validating that a migration was successful can take an organization months without the aid of column-level lineage for planning, a SQL translator to avoid taxing SQL dialect nuances, and cross-database diffing.

Ready to see how Datafold can accelerate your data migration, whether it’s from Teradata to Snowflake or another platform? Reach out to one of our migration experts to share details about your tech stack, scale, and concerns. We’ll help you explore how solutions like automated data diffing can streamline your migration and address your biggest challenges.

.avif)