Redshift to Snowflake Migration: Challenges, Best Practices, and Practical Guide

Navigating the transition from Amazon Redshift to Snowflake comes with an array of technical challenges, demanding meticulous planning and strategic approaches. This article delves into key obstacles, including architectural disparities and distinct SQL dialects, offering insights into the unique attributes of each platform.

We look at the main challenges, four best practices, and share our strategies on how your team can best overcome these hurdles to ensure a seamless transition from Redshift to Snowflake.

Lastly, Datafold’s powerful AI-driven migration approach makes it faster, more accurate, and more cost-effective than traditional methods. With automated SQL translation and data validation, Datafold minimizes the strain on data teams and eliminates lengthy timelines and high costs typical of in-house or outsourced migrations.

This lets you complete full-cycle migration with precision–and often in a matter of weeks or months, not years–so your team can focus on delivering high-quality data to the business. If you’d like to learn more about the Datafold Migration Agent, please read about it here.

Common Amazon Redshift to Snowflake migration challenges

Technical challenges

Migration from Redshift to Snowflake introduces technical challenges that necessitate meticulous planning and a strategic approach. While there are some differences in their architectures, both platforms function as cloud-based data warehouses. Hence, the primary challenges revolve around migrating, SQL dialect differences, and validating the data. We look at these hurdles here.

Migration planning

Even though both Redshift and Snowflake are cloud warehouses with somewhat similar architectures, data teams still need to invest considerable time upfront to ensure that data structures, schemas, and data types are accurately mapped and transformed to fit the target environment.

Misaligned schemas can lead to data import issues, query failures, and data integrity risks. Redshift and Snowflake may use distinct naming conventions, data types, and constraints for defining schemas. Hence, schema elements must be converted to ensure compatibility with.

Data types employed in Redshift might not always have direct equivalents in Snowflake. Thus, data type mapping and conversion are essential to prevent data loss or corruption during the migration. This requires careful attention to detail and validation to ensure that the data types in Snowflake can effectively accommodate the migrated data.

Using column-level lineage to help plan out migration assets can mitigate some of these challenges. Datafold’s column-level lineage enables data teams to trace the flow of data from its source to its destination, providing insights into how columns are used and transformed throughout the data pipeline. By understanding the lineage of each column, data teams can identify dependencies, detect potential issues, and ensure that all necessary transformations are properly accounted for in the migration process.

SQL dialect differences

SQL dialect differences between Redshift and Snowflake represent another significant hurdle during migration. While both databases use SQL as their query language, there are notable distinctions in their syntax, functions, and capabilities that can pose challenges for data teams.

Traditional translation tools often struggle with these complexities, turning what might seem like a straightforward task into a months- or even years-long process.

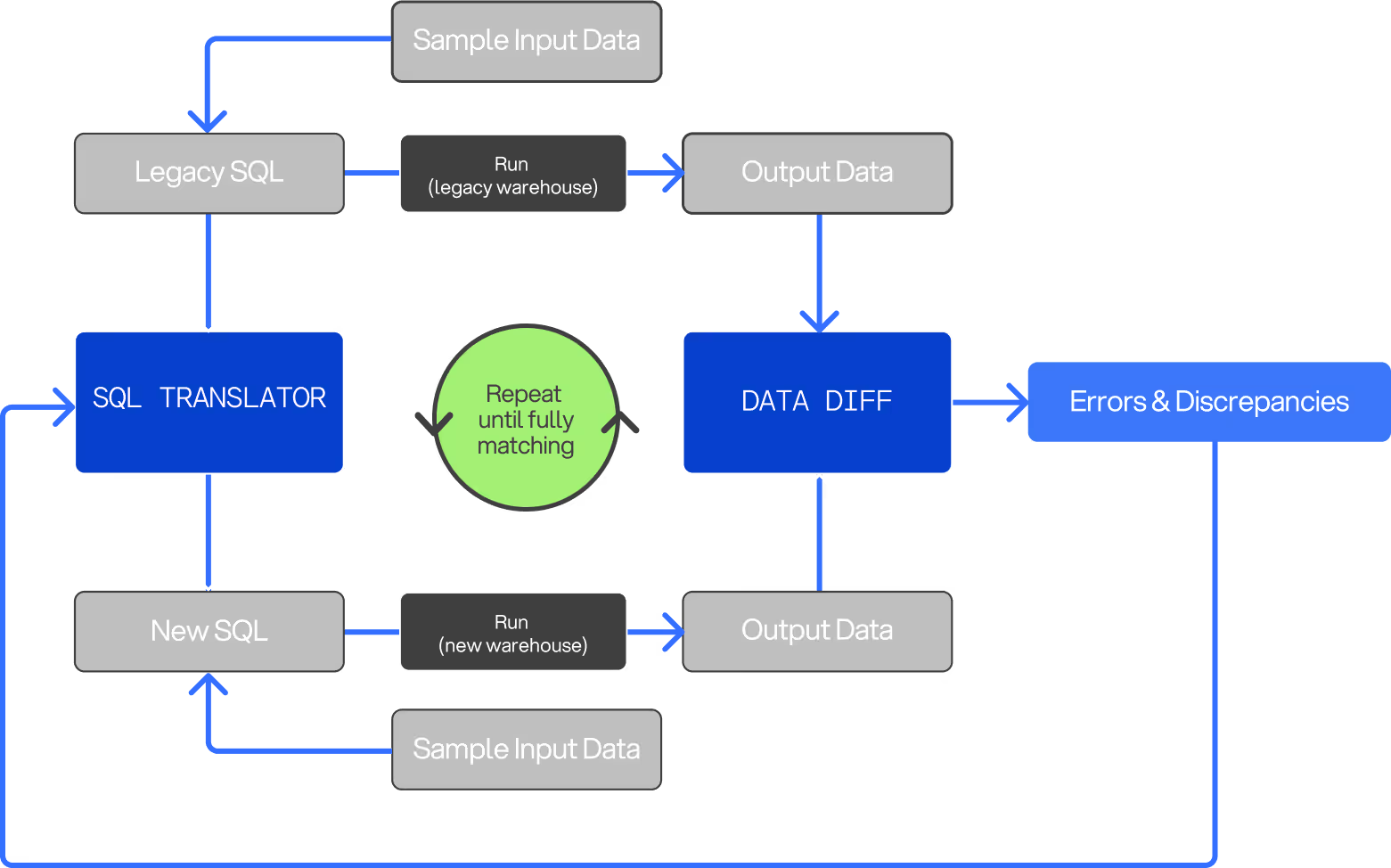

Datafold’s Migration Agent simplifies this challenge by automating the SQL conversion process, seamlessly adapting Teradata SQL code—including stored procedures, functions, and queries—for Snowflake. This automation preserves critical business logic while significantly reducing the need for manual intervention, helping teams avoid the lengthy, resource-intensive rewrites that are typical in traditional migrations.

Redshift SQL

Amazon Redshift uses a PostgreSQL-based SQL dialect, which is ANSI SQL compliant. However, it includes unique data warehousing extensions like columnar storage and automatic compression. Redshift's SQL dialect supports analytical functions, window functions, and complex query optimization.

Snowflake SQL

Snowflake utilizes a dialect of SQL that closely adheres to ANSI SQL, making it familiar to most database professionals. Its SQL offers far more robust and comprehensive support for JSON; in Redshift, working with JSON data can be cumbersome and often requires additional preprocessing and transformation steps, while Snowflake's SQL dialect allows you to work directly with JSON data, making it accessible and queryable without the need for extensive data transformation. Snowflake's SQL dialect also facilitates efficient scaling of compute resources, optimizing performance and cost-efficiency for diverse workloads.

Example of dialect differences between Redshift and Snowflake: the coalesce function

Let's consider a distinct example that highlights the differences in handling the coalesce function in Redshift and Snowflake, which often becomes evident when using a SQL translator.

In a hypothetical scenario where you have a table called sales_data that records sales transactions, you want to query the sales amount for each transaction. However, in some cases, the sales amount might be missing or NULL in the database.

In such a scenario, you might use the coalesce function to handle these NULL values by providing a default value when querying the data.

Query in Redshift’s T-SQL

In Redshift, you can use coalesce with a single argument:

Equivalent Query in Snowflake SQL

In Snowflake, you must provide at least two arguments to coalesce:

Other differences exist: while Redshift uses GETDATE() for current timestamp retrieval, Snowflake employs CURRENT_TIMESTAMP(). Similarly, Redshift's TRUNC() function differs from Snowflake's DATE_TRUNC(), requiring adjustments for date manipulation. Additionally, Snowflake uses a more elaborate syntax when converting timestamps to different time zones compared to Redshift's SYSDATE and DATE() functions.

Some Redshift-specific features like DISTSTYLE, DISTKEY, SORTKEY, and data type distinctions like BPCHAR (blank-padded character) and CHARACTER VARYING (used to store variable-length strings with a fixed limit) have no direct equivalents in Snowflake. It's essential to carefully address these differences to ensure a smooth transition between the two platforms.

Validating data

Migrations introduce the potential for data integrity issues. For instance, data truncation, incorrect data mapping, or data type mismatches can occur, leading to inaccuracies in the migrated data.

Validating data during and after migration is critical for maintaining data quality and ensuring that the transition doesn't introduce discrepancies. This typically entails thorough testing of data pipelines, SQL queries, and ETL processes.

Testing data pipelines involves several things:

- Ensuring that data extraction from Redshift is complete and accurate, with no loss of information during transit

- Validating that any data transformations applied are still aligned with the intended logic and business rules (e.g., confirming that data type conversions executed correctly)

- Checking that data is loaded into Snowflake tables accurately, with correct mapping to target columns

The overall ETL process should also undergo validation testing. This entails a comprehensive, end-to-end test of the entire data workflow, from extraction to data loading in Snowflake. This is a necessary last mile step to triple check that the entire process operates as intended without data loss or integrity issues.

But validation can become a “black box” in migration projects, where surface-level metrics like row counts may align, but hidden discrepancies in data values go undetected.

Traditional testing methods often miss deeper data inconsistencies, which can lead to critical issues surfacing only after the migration reaches production. Each failed validation triggers time-intensive manual debugging cycles, delaying project completion and straining resources.

Benefits of migration to Snowflake

Scalability and cost efficiency

Since Snowflake's architecture decouples data storage from compute resources, you can independently scale compute resources to meet the specific needs of your workloads, eliminating the need for manual optimization and reducing infrastructure costs. This flexibility ensures that your organization can efficiently handle fluctuating data demands, whether they involve running complex analytical queries or handling routine data processing tasks.

Efficient data handling

Snowflake offers a significant advantage over Redshift when it comes to handling diverse data types. Unlike Redshift, which often requires additional preprocessing for semi-structured data such as JSON, XML, and Avro, Snowflake seamlessly integrates these formats into its data environment. Migrating to Snowflake can accelerate their data analytics initiatives by eliminating the time-consuming data transformation steps often necessary in Redshift.

Data sharing and collaboration

One of Snowflake's standout features, setting it apart from Redshift, is its robust data sharing capabilities. Unlike Redshift, which often requires complex and manual data export and sharing processes, Snowflake streamlines this by allowing organizations to share read-only or read-write access to their data with fine-grained control. This not only ensures data governance and compliance but also fosters agile data-driven collaborations and insights.

Business challenges

Migrating from Redshift to Snowflake presents several business challenges that organizations must navigate to ensure a smooth transition. Beyond the technical considerations, a successful migration starts with strategic planning and effective change management. We look at the top five concerns here.

The never-ending “last mile”

Even when a migration is near completion, the “last mile” often proves to be the most challenging phase. During stakeholder review, previously unidentified edge cases may emerge, revealing discrepancies that don’t align with business expectations. This phase often becomes a bottleneck, as each round of review and refinement requires time and resources, potentially delaying full migration and user adoption.

Downtime and performance impact

Migrations often require downtime or reduced system availability, which can disrupt regular business operations. Organizations must carefully plan for these downtimes, schedule migrations during low-traffic periods, and communicate effectively with stakeholders to minimize the impact. Ensuring that performance is not compromised during and after migration is crucial to maintain customer satisfaction and operational efficiency.

User training

Transitioning to a new data platform like Snowflake may require user training and adjustment periods. Business users and analysts may need to familiarize themselves with Snowflake's user interface, reporting tools, and query optimization techniques. Ensuring a smooth transition and minimizing productivity disruptions is vital to maximize the benefits of the migration.

Compliance

Maintaining data compliance and security standards throughout migration can be challenging. Organizations must ensure that data security, privacy, and compliance requirements are consistently met in the new environment. Adhering to regulations such as GDPR, HIPAA, or industry-specific standards remains a priority and may require adjustments to data handling and access policies.

Cost management

Shifting to Snowflake may lead to changes in cost structures. Snowflake's pricing model, based on storage and compute usage, differs from Redshift's, which may impact budgeting and financial planning. Organizations need to carefully monitor and optimize their Snowflake usage to avoid unexpected cost escalations, necessitating ongoing cost management strategies.

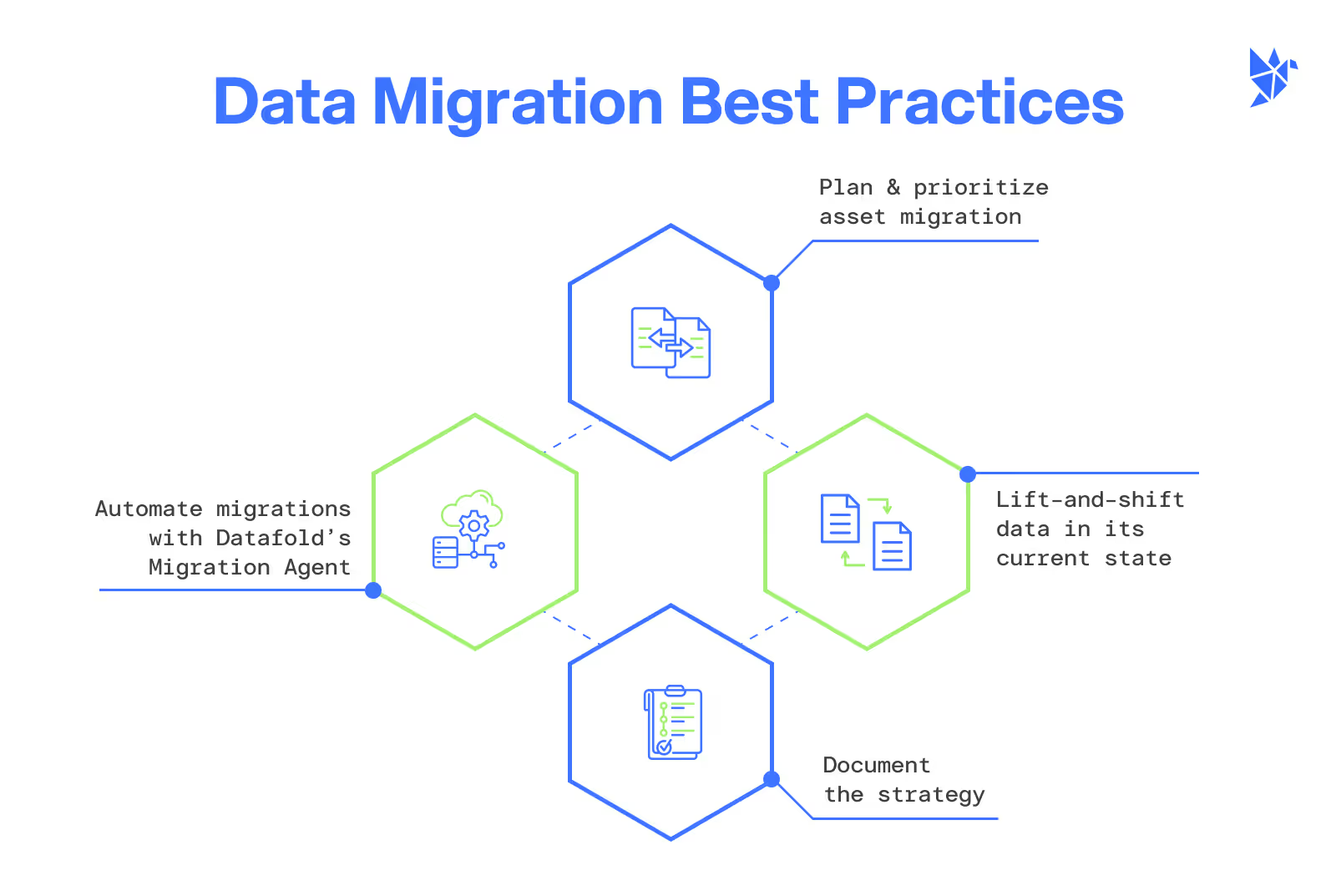

4 best practices for Redshift to Snowflake migration

Migrations test even the best-laid plans, but having a strategy in place can turn what seems challenging into a manageable process. Our extensive guide is a valuable resource, featuring specific best practices curated for your Redshift to Snowflake migration. It serves as a strategic compass, aligning your technical requisites with your overarching business objectives.

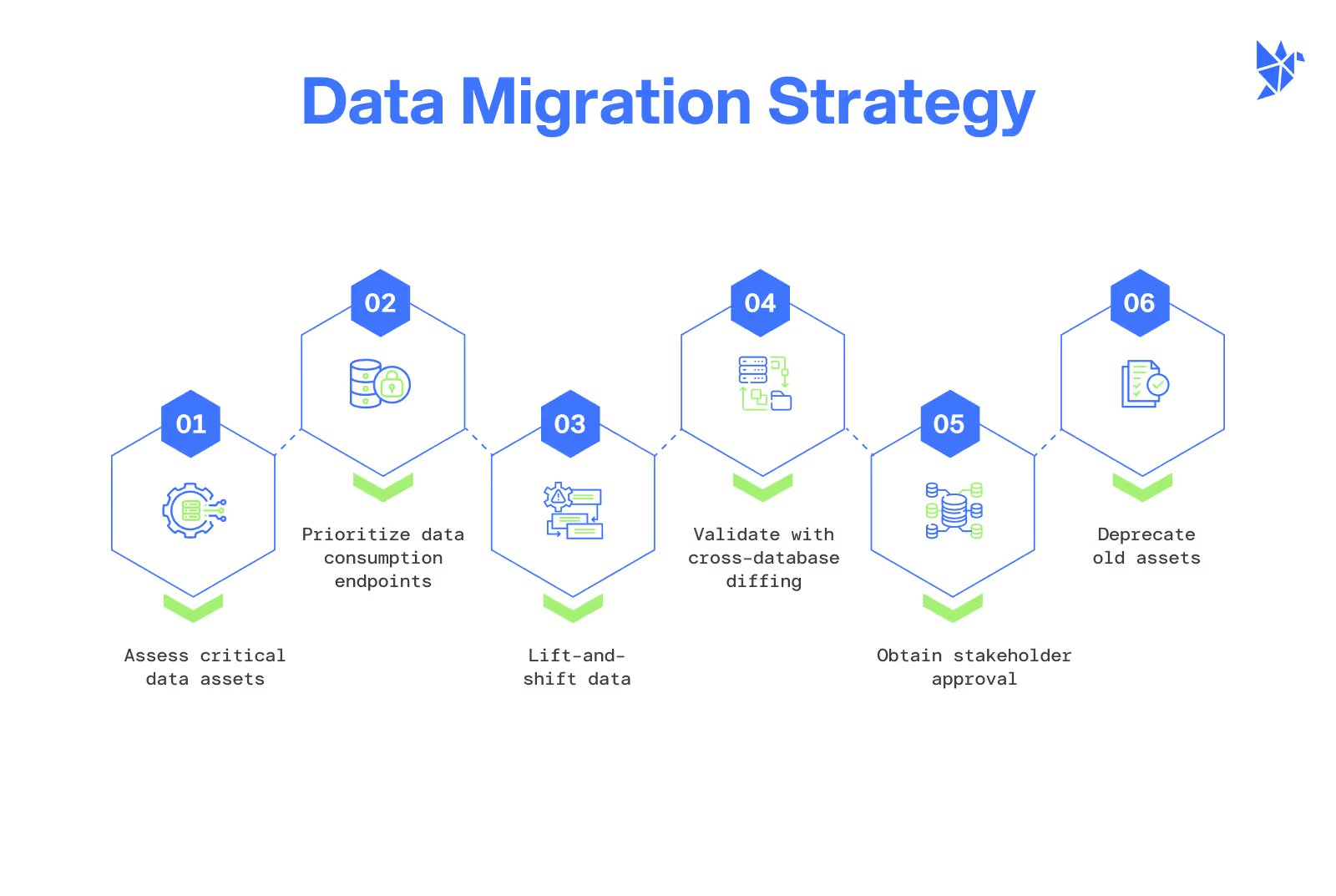

Now, let’s take a look at the key strategies outlined in our guide that were designed to optimize your migration journey:

- Plan and prioritize asset migration

Efficient migration planning entails leveraging column-level lineage to identify critical data assets and potentially deprecated ones for Snowflake integration. The initial step involves migrating data consumption points ahead of data production pipelines, streamlining the transition and alleviating the workload on the Redshift system.

- Lift and shift the data in its current state

Leverage Datafold’s DMA for the initial lift-and-shift, automatically translating Teradata SQL to Snowflake’s syntax, which minimizes manual code remodeling and speeds up migration.

- Document your strategy and action plan

Ensuring meticulous documentation of your migration strategy and execution plan is critical for success. Upfront documentation not only aids in obtaining stakeholder endorsement but also highlights potential blockers to be addressed. Integrate Datafold’s DMA to streamline SQL translation, validation, and documentation for a unified strategy.

- Automating migrations with Datafold’s DMA

Datafold’s Migration Agent (DMA) simplifies the complex process of migrating SQL and validating data parity across Teradata and Snowflake. It handles SQL dialect translation and includes cross-database data diffing, which expedites validation by comparing source and target data for accuracy.

By automating these elements, DMA can save organizations up to 93% of time typically spent on manual validation and rewriting.

Putting it all together: Redshift to Snowflake migration guide

A smooth transition from Redshift to Snowflake demands both technical ability and project management. Our guide presents a comprehensive set of best practices, enabling your team to expertly navigate this transition. By aligning technical and business strategies and leveraging Datafold’s DMA, your team can navigate this transition more effectively. Here’s how to put it all together:

- Plan the migration from Redshift to Snowflake

To prepare for the shift to Snowflake, it's important to first conduct a meticulous analysis of column-level lineage to pinpoint essential data assets earmarked for migration. Start by transferring data endpoints like BI tools and analytics dashboards to Snowflake, guaranteeing seamless data accessibility and minimizing operational disturbances during the transition.Use Datafold’s Migration Agent (DMA) to prioritize critical data assets, starting with data consumption points like BI tools and dashboards to enable a smooth transition with minimal disruption.

- Prioritize data consumption endpoints first

To prepare for the transition to Snowflake, prioritize migrating user-facing data endpoints, such as analytics queries and applications, ahead of data production pipelines. Achieve this by replicating data from Redshift to Snowflake, enabling data users and applications to query directly in Snowflake.

- Implementing lift-and-shift from Redshift Snowflake

Choose a lift-and-shift strategy for a smoother migration, while keeping an eye on architectural differences and variations in SQL dialects between Redshift and Snowflake.Lift-and-shift data in its current state using a SQL translator embedded within Datafold’s DMA which automates the SQL conversion process. Perform data diffs to confirm a 1-1 table equivalence between the Redshift and Snowflake databases.

- Validate with cross-database diffing

Then, use Datafold’s cross-database diffing to verify data parity, enabling quick and accurate 1-to-1 table validation between the Teradata and Snowflake databases.

- Secure stakeholder approval

Once the migration is stable, seek stakeholder approval. Utilize data diff tools to showcase parity between Redshift and Snowflake, strengthening the migration's effectiveness.

- Deprecate old assets

Announce the phasing out of legacy Redshift resources and distribute parity reports to stakeholders, bolstering their transition to the Snowflake environment.

Conclusion

If you're eager to discover how Datafold can help your data team handle migrations more efficiently, reach out to a data migrations expert and tell us about your migration, tech stack, scale, and concerns. We’re here to help you understand if data diffing is a solution to your migration concerns.

Migrations pose significant challenges and often extend over long periods. Datafold simplifies this process through automation, empowering your team to focus on what truly matters: delivering reliable, high-quality data to your organization.