Introducing data replication testing in Datafold

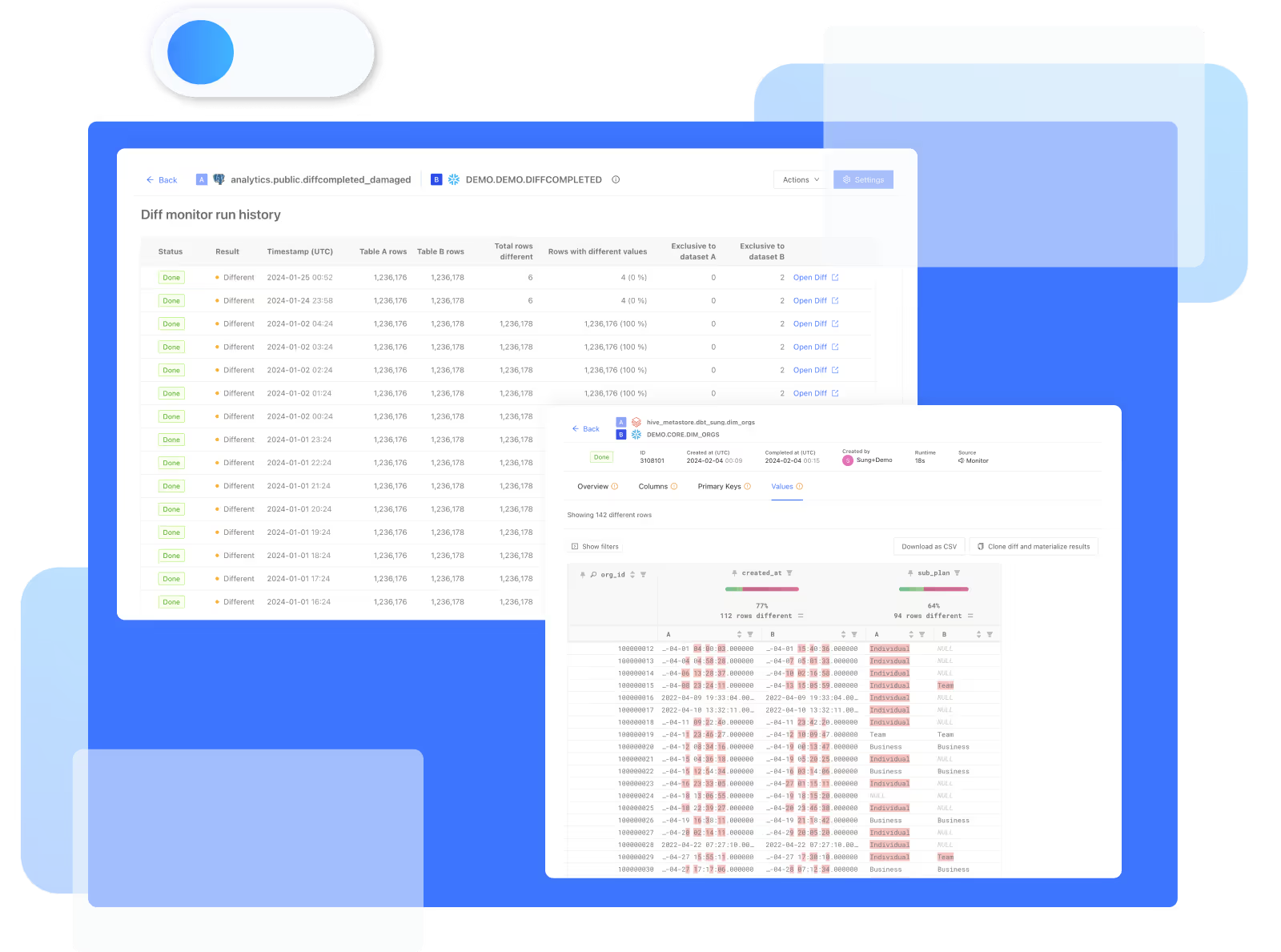

Introducing Monitors in Datafold: scheduled cross-database diffs for continuous source-to-target validation.

Today, we continue to commit to solving data quality issues in the data reconciliation process by addressing an often overlooked (and untested!) part of your data workflow: data replication.

Using Datafold’s cross-database diffing and new monitoring capabilities, your team can now perform continuous source-to-target validation for data replication pipelines.

With Monitors in Datafold, your team can quickly identify parity of tables across systems on an ongoing basis. Monitors support your ability to:

- Run data diffs for tables across databases on a scheduled basis.

- Receive alerts to Slack, PagerDuty, webhooks, or email when data diff results deviate from your expectations.

- Analyze at the value level how two tables match exactly.

We know how important it is for the data you’re replicating across databases to be right. We know this data is often mission-critical—powering core analytics work and machine learning models, and guaranteeing data reliability and accessibility is vital.

With Datafold, test your replication pipelines automatically and continuously. Validate consistency between source and target at any scale, and receive alerts about any discrepancies.

The challenges of ongoing data reconciliation

Your data replication pipelines are broken. (Ok, well maybe not right now, but they have been in the past, and they will in the future.)

Your data replication pipelines can break for a number of reasons, such as:

- Outages or errors in data movement and ETL/ELT tools

- Internal infra or backend engineering updates that break your EL process

- Custom ETL/ELT pipelines that break under data scale

Most importantly, we know that broken replication pipelines and their consequential data quality issues (missing, corrupted, incorrect, or untimely data) have been a persistent, unsolved pain for data engineers.

We’ve spent the past six months talking to data teams both on why they’re replicating data, and how they’re validating parity between databases. We heard several common themes:

- Comparing data across databases is technically challenging, and often does not offer the level of granularity or depth needed to actually identify and solve issues.

- There’s little to no testing on many replication pipelines. Writing tests for these pipelines can be challenging to account for data volume, growth, and replication speed, dbt source tests only capture known unknowns, and custom SQL and Python tests are often naive.

- When there are issues with data replication, there are often major (negative) repercussions to the business and data team.

For many of us, data replication is a vital part of our data’s workflow, there’s little to no visibility into performance or quality of these replication pipelines, and when things go wrong, it leads to major firefighting and wasted time.

Today, we’re excited to solve these issues by offering Monitors in Datafold: scheduled data diffs for tables across databases.

“We currently replicate several copies of our data across many geographical regions and cloud providers. Our team was able to easily use the new cross-database diffing feature in Datafold to build automated jobs that continuously verify that all of our data is consistent across the many locations and give us confidence in our replication pipelines.”

- Joel Choo, Software Engineer at Allium

Monitors: Introducing scheduled cross-database data diffs

Monitors in Datafold are the best way to identify parity of tables across systems on a continuous basis. With Monitors, your team can:

- Run data diffs for tables across databases on a scheduled basis.

- Set error threshold for the number or percent of rows with differences between source and target.

- Receive alerts with webhooks, Slack, PagerDuty, or email when data diff results deviate from your expectations.

- Investigate data diffs—all the way to the value-level—to quickly troubleshoot data replication issues.

Datafold will also keep a historical record of your Monitor’s data diff results, so you can look back on replication pipeline performance and keep an auditable trail of success.

Even if tables are millions or billions of rows, Datafold’s unique data diffing algorithm scales to perform diffs fast (seconds/minutes).

Datafold also integrates with 13 SQL databases, so you can ensure parity of tables across the databases you care about.

“Since we started using this feature, Datafold has shipped performance improvements to the diffing algorithm that have resulted in the diffs running orders of magnitude faster, which helped to us to save on cloud spend and allowed us to run more comprehensive verifications more frequently.”

- Joel, Allium

Demo

Watch Solutions Engineer Leo Folsom walk through how data replication testing works in Datafold.

Get visibility into your data replication pipelines

If you’re ready to learn more about how Datafold is changing the way data teams test their data during data reconciliation, there are a few options to getting started:

- Request a personalized demo with our team of data engineering experts. Tell us about your replication pipelines, tech stack, scale, and concerns. We’re here to help you understand if data diffing is a solution to your data reconciliation efforts.

- Join me at our next webinar on April 3rd, where our team will be giving a live demo of our replication testing solution, so you can see the product experience for yourself.

- For those who are ready to start playing with cross-database diffing today, we have a free trial experience of Datafold Cloud, so you can start connecting your databases as soon as today.

Happy diffing!

.avif)

.avif)