How to Simplify Your Move from a Data Warehouse to a Data Lake

Accelerate data migrations with Datafold. Automate SQL translation, ensure data integrity, and transition from warehouse to lake seamlessly and efficiently.

Moving from a data warehouse to a data lake can make you feel like a fish upgrading from a goldfish bowl to the Pacific Ocean — exciting, but terrifying. With a data lake, you get to store all kinds of data — structured, semi-structured, and unstructured — in one massive, accessible pool. This setup is perfect for diving deep into modern analytics, machine learning, and getting real-time insights. But, despite the awesome potential for bigger scalability, cost savings, and more flexibility, the path to get there can be full of twists and turns.

Handling SQL dialect differences and complex data pipeline dependencies during a data migration is often tricky. These technical challenges can disrupt your daily operations and put critical business activities at risk. Your team needs to tackle these issues head-on with tools and strategies that simplify the process and reduce risks without making your life more difficult.

How to tackle data migration complexities head-on

It would be nice if you could export your data warehouse and feed it straight into a data lake, but unfortunately, it doesn’t work that way. The two platforms have distinctly different architectures and storage formats. Simply transferring data from one to the other can lead to compatibility issues, like lost metadata and broken schemas. To do it seamlessly, you need to transform and optimize what comes out of your data warehouse while preserving its integrity and structure.

Some aspects of transitioning to a data lake can be challenging:

- SQL dialect mismatches: Exporting data from BigQuery to a Delta Lake can cause issues when BigQuery’s STRUCT types are used, as Delta Lake requires flattening or reformatting complex nested structures.

- Complex dependency chains: A retail company’s pricing system might rely on multiple nested SQL queries that pull data from various tables such as inventory, sales, and discounts. When migrating these queries to a data lake environment, you need to reconstruct the sequence and dependencies of these queries to ensure the pricing system functions correctly.

- Risk of disrupting business-critical data: During migration, a healthcare provider’s patient records system may need to be operational 24/7. Any disruption can lead to incomplete patient records being fetched during critical treatments or medical consultations. Ensuring data availability and integrity during migration is crucial to avoid potential risks to patient care.

Despite these hurdles, the allure of data lakes — with their vast scalability and cost efficiency — remains compelling. It’s easy to see why: data lakes allow companies to efficiently manage and analyze vast amounts of diverse data, quickly boosting their ability to make smart decisions. Plus, for any company trying to get into generative AI, data lakes are the biggest game in town for training complex models.

Bridging the SQL dialect gap

Migrating from one platform to another often involves navigating the complexities of SQL dialects. Each platform has its own variant of SQL, requiring more than simple word substitutions to translate your code effectively. When you move complex stored procedures into a modern framework like dbt, it's easy to slip up and introduce frustrating errors.

For example, during a migration, you might notice discrepancies because SQL functions don’t behave the same in every system. Consider the DATE_FORMAT() function — its behavior in MySQL versus PostgreSQL can vary quite a bit. If you don’t carefully adjust for these differences when transitioning to dbt, it could lead to reporting errors that adversely affect your business decisions.

You can usually address these differences with SQL translators. Unfortunately, most tools struggle with complex queries, forcing you to resort to time-consuming manual corrections. You have to comb through migration scripts line by line to keep an eye on potential misses. Any discrepancies can slow down the migration, erode the trust of your stakeholders, and make you want to bang your head on your desk.

Untangling the web of complex, interconnected data

Some people say data migrations are like moving boxes from one house to another. We think of it more like untangling a massive, interconnected web of relationships, dependencies, and mysteries you didn’t know existed.

Data models, reports, and pipelines all depend on one another. Even a small misstep can ripple downstream. Add to this delicate balance the chaos of poorly named fields and inconsistent logic in legacy frameworks and it’s easy to see why migrations feel overwhelming. Misaligned KPIs, as Rocket Money experienced during their migration, can disrupt operations and erode stakeholder trust. They used Datafold to manage these complexities and successfully finished their project without shedding any tears.

Increasing stakeholder confidence in the move

Earning downstream trust is the bedrock of any successful migration. If your data consumers don’t like or agree with the data they’re getting from the new system, their confidence erodes and slows any momentum you might have had. Without effective validation tools, handling data integration can feel like navigating in the dark — any gaps in data visibility only compound the challenge.

If the data doesn’t validate, the entire transition to a modern platform can grind to a halt, jeopardizing the anticipated benefits. You may find yourself painstakingly combing through data line by line, trying to pinpoint the discrepancies that stall progress and frustrate everyone involved.

For example, consider how Dutchie, a prominent player in the cannabis industry, enhanced stakeholder trust during their migration. After integrating Datafold's Data Diff into their CI/CD workflows, they proactively identified and resolved data quality issues before impacting production. Taking a preemptive approach ensured their data was reliable, boosting stakeholder confidence and easing the transition to their new system.

The hidden costs of taking too long

Dragging out a migration drains budgets and holds back growth. Running legacy and modern systems side by side racks up costs, and you can find yourself mired in manual reconciliations rather than advancing high-impact projects. Missed service-level agreements (SLAs), operational inefficiencies, and lagging behind competitors are just a few of the headaches you might experience. Every delay adds up, making the shift to modern infrastructure expensive and incredibly frustrating.

A great example of this challenge is Thumbtack, a local services platform that used Datafold to streamline their migration process and prevent costly delays. Automating their data validation and review allowed Thumbtack to save over 200 hours of workload each month. They significantly cut down on manual testing and the time spent fixing errors, making their migration smoother and more cost-effective.

5 ways Datafold redefines data migration success

If you want a stable, verifiable migration with an on-track schedule, you need to take a look at Datafold. We simplify how you handle data migration, using advanced features to turn challenging processes into streamlined operations. Here’s how Datafold can make a big difference in your approach to data migration.

1. Automate your way to faster migrations

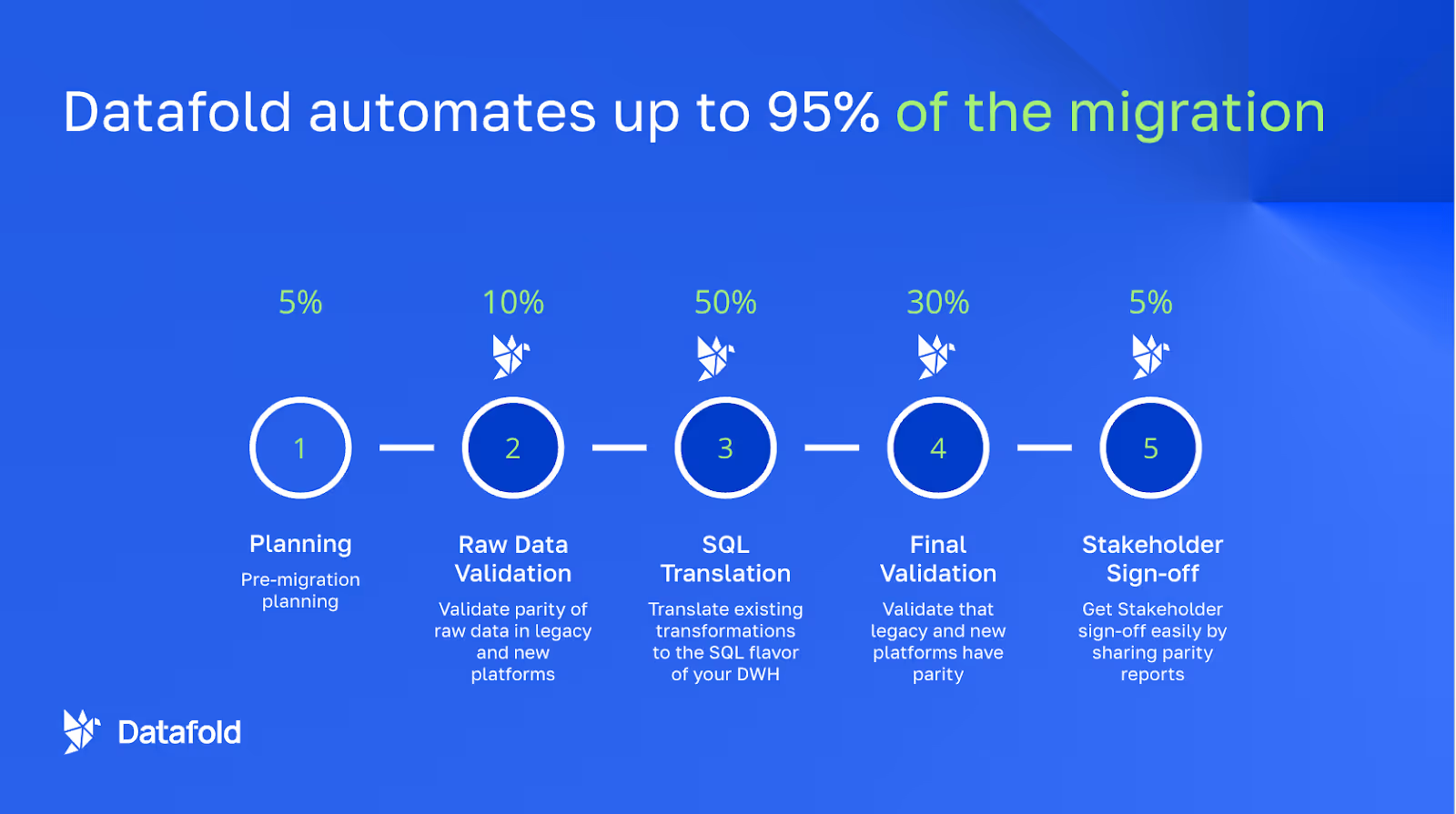

Traditional migrations often involve a lot of manual work, from SQL translation to data validation — a process that can drag on for months or even years. Datafold's AI-driven SQL and code translation intelligently converts SQL scripts across different dialects and frameworks, automatically correcting errors as it goes. Basically, the Datafold Migration Agentdoes both code translation and validation. If the validation fails, the translating AI fixes the issue and tries again until parity between your legacy data warehouse and new data lake are met. This feedback loop allows Datafold to achieve exceptional accuracy quickly and on a large scale.

Automating 95% of the migration workload allows Datafold to shrink what used to be months-long projects into just a few weeks. The extra time means you can suddenly manage your multi-petabyte migrations, freeing up your team to focus on what really matters: driving business value and innovation.

2. Build stakeholder trust with data validation tools

For your migrations to succeed, you need everyone to trust the accuracy and reliability of your new system. Datafold's Data Migration Agent (DMA) makes this possible by blending AI-driven code translation with thorough, detailed validation using Cross-Database Diffing (XDB).

XDB Diffing digs deep to find even the smallest discrepancies by directly comparing datasets across both legacy and modern systems, ensuring nothing is missed even with billions of rows. Together, DMA and XDB Diffing offer a smooth, integrated solution that manages everything from code translation to validation and reconciliation.

3. No data migration scenario is too unique for Datafold

Migrations take all shapes and sizes. Datafold has no problem with that. From on-prem systems like Oracle to cloud platforms like Snowflake, Datafold adapts to fill your specific needs. It even makes shifting from GUI-based tools like Informatica or Matillion to modular SQL straightforward. Our highly flexible capabilities make it a top choice for modern data teams facing complex migration challenges.

4. Save big: Cost-efficient migrations without compromise

You don’t have to drain your budget with high consulting fees and endless manual work to achieve a successful migration. Datafold cuts those costs without sacrificing accuracy, streamlining the entire process. Beyond the migration itself, Datafold’s tools for ongoing monitoring and data quality provide lasting value, making the platform a reliable investment for your long-term data infrastructure needs.

5. Strategize for success: Proven approaches to optimize migration

The right strategy can make or break a migration. Datafold supports approaches that prioritize speed and efficiency without sacrificing accuracy. One proven method is the lift-and-shift strategy, which transfers data and logic as-is, ensuring quick deployment with minimal disruption. This step-by-step method avoids scope creep and unnecessary delays, allowing you to refine the system after migration when it’s stable. It also reduces risks during major transitions, keeping your project on track.

Real-world examples of seamless migrations

Migrations are often daunting, but with the right tools and strategies, you can manage them smoothly and effectively. Here’s how Datafold has simplified complex migration scenarios for real-world applications.

Migrating from Redshift to Snowflake using Datafold

Moving from Redshift to Snowflake involves addressing different storage architectures, schema changes, and SQL compatibility. Typically, this process requires extensive manual effort to ensure data accuracy. Datafold simplifies this process with automated SQL translation and data parity verification using Cross-Database Diffing (XDB), streamlining the process significantly.

For example, Faire used Datafold’s Data Diff to reduce their SQL testing efforts by 90%, quickly identify issues, and ensure a seamless migration with perfectly aligned datasets.

Transitioning stored procedures to dbt models

Moving stored procedures from legacy systems to modular frameworks like dbt can be time-consuming. Not only are there SQL nuances to account for between legacy and new systems, but adopting a framework like dbt also means adhering to new modeling principles (like macros, models, sources) and learning new best practices. This can be a considerable technical challenge for a data team to overcome in a timely manner.

Ensuring data accuracy during complex schema migrations

Eventbrite faced significant challenges migrating to a modern data stack, dealing with complex schema changes and data accuracy. They used Datafold's Cross-Database Diffing (XDB) to identify discrepancies early, avoiding costly errors. This automation of schema validation and reconciliation enabled a smooth migration, bolstering confidence in the reliability of their new system.

Modernizing transformations from GUI-based tools

GUI-based tools like Informatica often use proprietary XML-based transformation logic, creating migration roadblocks. Datafold excels in converting these workflows into modular SQL code, keeping the data logic intact while adding the flexibility needed for modern data platforms. This capability allows you to escape restrictive interfaces and adopt more open, scalable transformation frameworks, streamlining the entire process.

Each of these examples demonstrates how Datafold promotes seamless transitions across various platforms, reducing the time and complexity involved in migration projects. By automating key processes and addressing critical challenges head-on, Datafold helps companies leverage their data more effectively, ensuring successful migration efforts.

Modernizing transformations from GUI-based tools like Informatica to SQL

GUI-based tools like Informatica often use proprietary XML-based transformation logic, creating migration roadblocks. Datafold converts these workflows into modular SQL code, keeping the data logic intact while adding the flexibility needed for modern data platforms. This capability allows you to escape restrictive interfaces and adopt more open, scalable transformation frameworks, streamlining the entire process.

Migrate smarter, not harder with Datafold

Data migrations don’t have to be time-consuming and error-prone. Datafold lets teams tackle even the most complex migration projects with confidence, leveraging automation to reduce manual work, build trust through data validation, and realize significant cost savings. Datafold’s automation turns months-long projects into streamlined efforts that deliver results in weeks.

Modern data lakes like Databricks, Delta Lake, and Apache Iceberg are redefining what’s possible for advanced analytics and machine learning. These platforms provide scalable storage along with features like real-time performance, schema enforcement, and transactional integrity. Migrating to them can be complex, but Datafold helps make the process smoother, so teams can take full advantage of these powerful tools without getting bogged down by the usual challenges

If you’re ready to transform the way you approach data migrations, explore how Datafold can make your next migration faster, simpler, and more reliable. Book a demo or schedule a consultation to see the impact Datafold can have on your team’s success. Don’t let migration roadblocks slow you down — migrate smarter with Datafold today.

.avif)