Three practices to migrate to dbt faster

A data engineer’s guide to migrating quickly, avoiding common pitfalls, and building stakeholder trust with AI-driven solutions

Data teams are almost always involved in a migration at one point in their career. Whether it’s moving from a legacy warehouse to a cloud database, transitioning from stored procedures to dbt, or switching between BI tools, you’ll encounter a migration at some point in your career. But more than 80% of data migrations fail.

Recently, I spoke at Coalesce 2024 to share my vision for how AI can help data teams migrate faster and more efficiently. Through trial, error, and a few hard-won lessons, I’ve developed strong opinions about what actually makes a data migration successful.

These principles are rooted in my own experience–like the time I volunteered to lead a massive data migration at Lyft. I was keen to tackle the challenge head-on but made two major mistakes around the process and tools, which cost us months of delays.

Here’s what I learned about getting it right:

- Always optimize for speed with lift-and-shift

- Success is defined as proving data parity, not just migrated data

- The Data Migration Agent makes automating migrations reality

If you missed the session, check out the video recording or read on for a quick recap.

Prioritize speed with lift-and-shift

As data engineers, we’re often tempted to fix everything at once because migrations feel like a rare chance to tackle technical debt and inefficiencies that build up over time in legacy systems.

I fell into the same trap. I wanted to refine data models, clean up messy code, modernize architectures, optimize data performance, improve consistency, establish more efficient workflows–the list goes on. It felt like the perfect opportunity to create a ‘fresh start’ that would make future work easier and more scalable.

But what you don’t realize until it’s too late to reverse course is that trying to fix everything at once often leads to misaligned priorities, endless scope creep, and frustration from stakeholders who just want to wrap the migration up and move on to using the new system.

This is why I always recommend a "lift-and-shift" approach. Focus on moving your codebase to the new platform as quickly as possible. Speed matters here. Once the migration is done, you’ll have the freedom to address technical debt and other architecture improvements in a less pressured environment. You can turn off your legacy solution sooner, avoiding the costs of running two systems in parallel. More importantly, the migration will no longer be a bottleneck to delivering new value to the business.

Data parity as the key success metric

In my experience, data migrations often get bogged down by too many KPIs that don’t really move the needle. The reality is that a migration isn’t truly successful just because the data has been moved. It’s only successful when stakeholders trust that the migrated data is identical to the original, and data users actually use the new system.

Stakeholders don’t just want data moved; they want data they can trust. This is a really subtle but important distinction; if you can’t convince your stakeholders that there’s data parity between the legacy and new system, they won’t adopt it.

But validating data is incredibly tedious and time-consuming. How do you compare datasets across systems with different data structures, types, and storage formats? You can’t rely on simple row counts to catch discrepancies like mismatched field values.

This is where using a tool like Data Diff that can validate data at the row-level becomes critically important. With cross-database data diffing, you can validate parity with precision, just like using git diff for code changes. Datafold’s Data Diff compares data between source and target databases and identifies any discrepancies at the most granular level possible. This level of data validation is what ensures true data parity, giving stakeholders the confidence they need to adopt the new system and allowing you to turn off the old one.

Automate with the Data Migration Agent

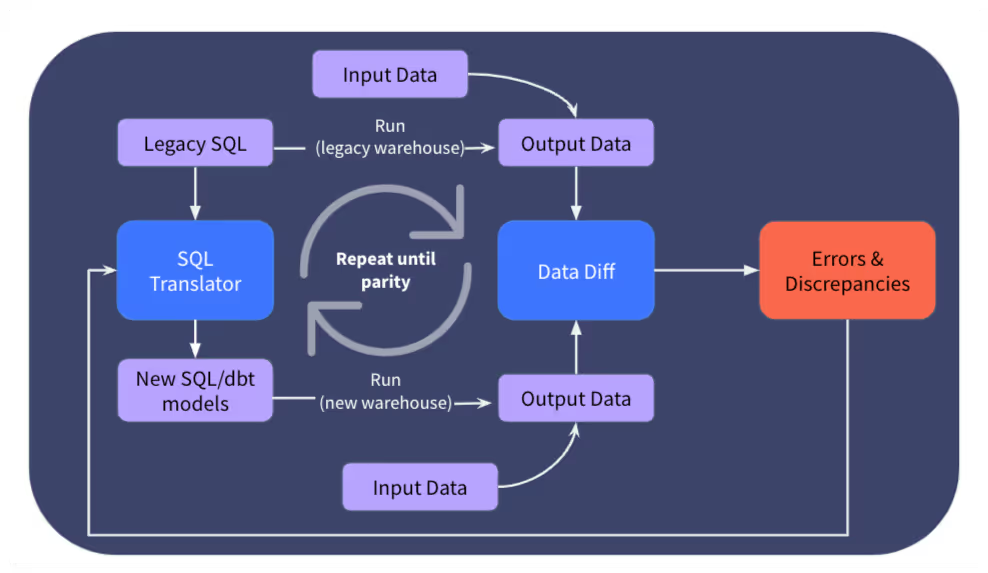

To automate data migrations, you need a solution that can tackle the two trickiest bits of the process: code translation and data reconciliation. Recent AI advancements have made this achievable by handling these complex tasks more efficiently.

But you can’t just throw an LLM at the problem. While LLMs can generate code, they lack the contextual understanding and verification needed to ensure data parity and accuracy across complex systems. Many migrations involve comparing data across different database platforms, each with unique data types, hashing functions, and storage procedures, which LLMs alone cannot fully handle.

LLMs are also prone to "hallucinations"–they may produce code that appears correct but doesn’t fully match the functionality of the legacy system.

With our fully automated Data Migration Agent (DMA), Datafold makes automated migrations a reality. DMA combines AI-driven code translation with an iterative validation process, powered by tools like Data Diff for cross-database diffing, to systematically verify data parity between legacy and new systems. With DMA, you get a comprehensive solution that handles code translation, validation, and reconciliation in one seamless process.

The agent is designed to catch and correct any discrepancies, ensuring that every detail of the data is accurate before finalizing the migration. This cohesive workflow allows teams to focus less on manual checks and more on strategic aspects of the migration, reducing overall project time and eliminating common errors.

One of America’s largest healthcare staffing firms, CHG, used this strategy to cut their MySQL-to-Snowflake migration time from 18 months to 3 months. With the AI-powered Datafold Migration Agent, they reduced costs by 80% while ensuring perfect data parity across 1,000+ custom queries.

A fully automated agent like DMA makes migrations far more efficient and error-free, freeing your data team to focus on delivering value instead of battling migration roadblocks. To learn more about how it works, check out our docs.

Final thoughts

The true cost of a migration isn’t just the direct engineering hours—it’s the opportunity cost of delaying high-impact projects and tying up valuable team capacity. Every hour spent on migration is an hour not spent on projects that drive growth and innovation.

By prioritizing speed with a lift-and-shift approach, focusing on data parity as your main KPI, and using AI-powered tools like the Data Migration Agent, you can keep these hidden costs to a minimum. This approach doesn’t just save time and resources; it frees up your team to move on to the next big thing.

If you’re ready to get started with converting and validating your migration to dbt, we’re here to help. We can provide a tailored assessment to show how quickly Datafold’s migration solution can translate and validate your data with accuracy. We’ll also outline the potential time and cost savings, so you have everything you need to make a compelling case to your stakeholders. Book a time with our team to kick off your evaluation today.

.avif)