9 Best Tools for Data Quality in 2024

Data quality is increasingly a top KPI for data teams, even as multiple sources of data are making it harder to maintain data quality and reliability. These tools can facilitate quality data at every step.

Choosing the right data quality tool is a tough process as it depends on various factors such as your organization’s data complexity, integration needs, budget, and long-term scalability. It's hard to say what's the best data quality tool since it varies by the specific requirements of each organization, such as data infrastructure, team expertise, industry regulations, and business goals.

Some companies may prioritize tools that excel in real-time monitoring and data observability, while others might need strong data governance, lineage tracking, or automated testing capabilities. Factors like ease of integration with existing systems, scalability, and budget constraints play a significant role in determining which data quality software is the best fit for a particular use case.

We believe achieving data quality is a multi-step process that involves various data quality checks along your pipeline. While perfectly high-quality data is not achievable, some tools in the modern data stack can help you boost your organization's data quality.

Our updated guide for 2024 looks at the nine very best tools across these five use cases for data quality management:

- Data transformation: for data cleaning, merging, and testing.

- Data catalog for organizing and discovering metadata.

- Instrumentation management: for ensuring accurate event tracking and data instrumentation.

- Data observability: to monitor and detect data quality issues.

- Machine learning: or monitoring data input and model performance.

The data quality toolkit

The sheer volume of data streaming in from various sources makes high data quality hard to maintain during analysis. A survey of data professionals carried out by Dimensional Research showed that 90 percent admitted: “numerous unreliable data sources” slowed their work. Meanwhile, in our State of Data Quality in 2021 survey, we found that data quality is the top KPI for data teams, underscoring just how crucial it is for organizations striving to be data-driven.

Ensuring high data quality becomes even more challenging when dealing with issues like inaccuracies, complex data structures, inconsistent data types, duplicates, and poorly labeled information. These problems often multiply as data pipelines grow, causing downstream effects that hinder decision-making processes across business units. Despite these challenges, achieving high-quality data is not a luxury—it's a necessity for any organization looking to gain actionable insights from their data.

Harvard Professor Dustin Tingley in Data Science Principles agrees that "to transform data to actionable information, you first need to evaluate its quality." Poor data quality not only undermines data analytics but also devalues the strategic potential of data initiatives. Without reliable data, organizations risk making faulty decisions based on erroneous insights, which could lead to inefficiencies, customer dissatisfaction, or even regulatory non-compliance.

The solution to these challenges lies in building a robust data quality toolkit—a set of software tools and methodologies designed to ensure accuracy, consistency, and reliability across your data ecosystem. These tools span various stages of the data lifecycle, from data ingestion and transformation to data observability and machine learning model validation.

Each data quality tool serves a unique purpose in ensuring that data remains accurate, traceable, and usable for decision-making. Whether through data cataloging, instrumentation management, or data version control, the toolkit supports data teams in proactively monitoring, testing, and validating data at every step.

Data transformation tools

Data transformation is the "t" in extract, transform, load (ETL) or extract, load, transform (ELT), and it is a stage where businesses clean, merge, and aggregate raw data into tables to later be used by a data scientist or analyst. Data transformation tools are not data quality tools, strictly speaking. Still, as the central piece to any data platform, the choice of the transformation framework can influence the data quality a lot. The best ETL frameworks come with built-in data testing features and facilitate sustainable patterns.

1. dbt

dbt (data build tool) is a tool that empowers data analysts to own the data analytics engineering process from transforming and modeling data to the deployment of code and generating documentation. dbt eases the data transformation workflow, making data accessible to every department in an organization.

How dbt improves data quality: As a data transformation tool, dbt elegantly facilitates version-controlled source code, separates development and production environments, and facilitates documentation. In addition, it comes with a built-in testing framework that helps you build testable and reliable data products from the ground up. dbt's native testing quickly identifies when the data in your environment misaligns with what is expected.

Through these functions, dbt protects your data applications from potential landmines such as null values, unexpected duplicates, incorrect references, and incompatible formats. dbt tests can be incorporated into your continuous integration and deployment process and also run in production to prevent bad data from being published to your stakeholders.

2. Dagster

Dagster is an open-source data orchestration framework for ETL, ELT, and ML pipelines. Dagster allows you to define data pipelines that can be tested locally and deployed anywhere. It also models data dependencies in every step of your orchestration graph. Dagster has a rich UI for debugging pipelines with ease. It is a go-to tool for building reliable data applications. Dagster CEO Nick Schrock explains in this video how "Dagster manages and orchestrates the graphs of computations that comprise a data application."

How Dagster improves data quality: Dagster allows for data dependencies between tasks to be defined (unlike Airflow — the mother of all modern ETL orchestrators), which greatly helps with ensuring reliability. Much like dbt (which is SQL-centric and therefore runs tests in SQL), Dagster offers a Python API to define tests inside the data pipelines.

Data catalog tools

A data catalog is an organized inventory of an organization’s metadata offering search and discovery. Finding and validating the source of data to use can be really challenging. Mark Grover, co-founder and CEO of Stemma, describes how the disparity of knowledge between data producers and data consumers is “the biggest gap in data-driven organizations”. Data catalogs power better data discovery through a Google-like search, boost trust in data, and facilitate data governance. They enable everyone on the team to access, distribute, and search for data easily.

3. Amundsen

Amundsen is a data discovery and metadata platform with a lightweight catalog and search UI originally developed at Lyft and written primarily in Python. The architecture includes a frontend service, search service, metadata service, and a data builder.

4. DataHub

DataHub is also an open-source metadata platform originally developed at LinkedIn. It has a different architecture than Amundsen, with a real-time metadata stream powered by Apache Kafka at the center, with a metadata service and index appliers written in Java, an ingestion framework written in Python, and a no-code annotation-based metadata modeling approach. With an emphasis on free-flowing metadata, DataHub allows integrating downstream applications through real-time strongly typed metadata change events.

How data catalogs improve data quality: In a world where having tens of thousands of tables and millions of columns in a data warehouse is not uncommon, companies cannot expect data scientists and analysts to simply know what data to use and how to use it correctly in their work. Data practitioners need catalogs with data profiling to find the right data to trust. While data catalogs typically don't validate or manage the data themselves, they are important in ensuring that the data users rely on trusted, high-quality data in their decisions.

Instrumentation management tools

Most analytical data comes from instrumented events - messages that capture relevant things happening in your business - such as the user clicking a button or an order being scheduled for delivery. The more complex and bigger the business, the more varied the events that organizations need to track, which often leads to an unmanageable mess of raw data.

To organize the instrumentation, product, analytics, and engineering teams create shared tracking plans that define what, why, where, and how events are tracked. Often existing in spreadsheets, such tracking plans are hard to manage, let alone validate the data against them at scale. Luckily, there are specific tools that automate the process from defining events and properties to validating them in development and production, helping you ensure data quality from the very beginning of the data value chain.

5. Avo

Avo is a collaborative analytics governance tool for product managers, developers, engineers, and data scientists. Avo describes itself as "a platform and an inspector that enables different teams to collaborate and ship products faster without compromising data quality."

6. Amplitude

Amplitude allows data and product teams to track high-quality data by providing automated governance tools, real-time data validation, proactive monitoring, and identity resolution.

How they improve data quality: Once bad data enters the warehouse, it quickly cascades through numerous pipelines and becomes hard to clean up. Ensuring that the raw data (events) is clearly defined and tested, and the change management process is structured is a very effective way to improve data quality throughout the entire stack. Both of these tools (Avo and Amplitude) through their collaborative tracking plans, instrumentation SDKs, and validation that can be embedded in the CI/CD process, help ensure the quality of analytical instrumentation.

Data observability tools

As the modern data stack becomes increasingly complex, organizations need a way to manage the complexity of data infrastructure and observe the quality and availability of data assets such as tables, Business Intelligence (BI) reports, and dashboards; data observability refers to monitoring, tracking, and detecting issues with data to avoid data downtime. Monitoring data is vital because it keeps you in check with the health of your data in your organization.

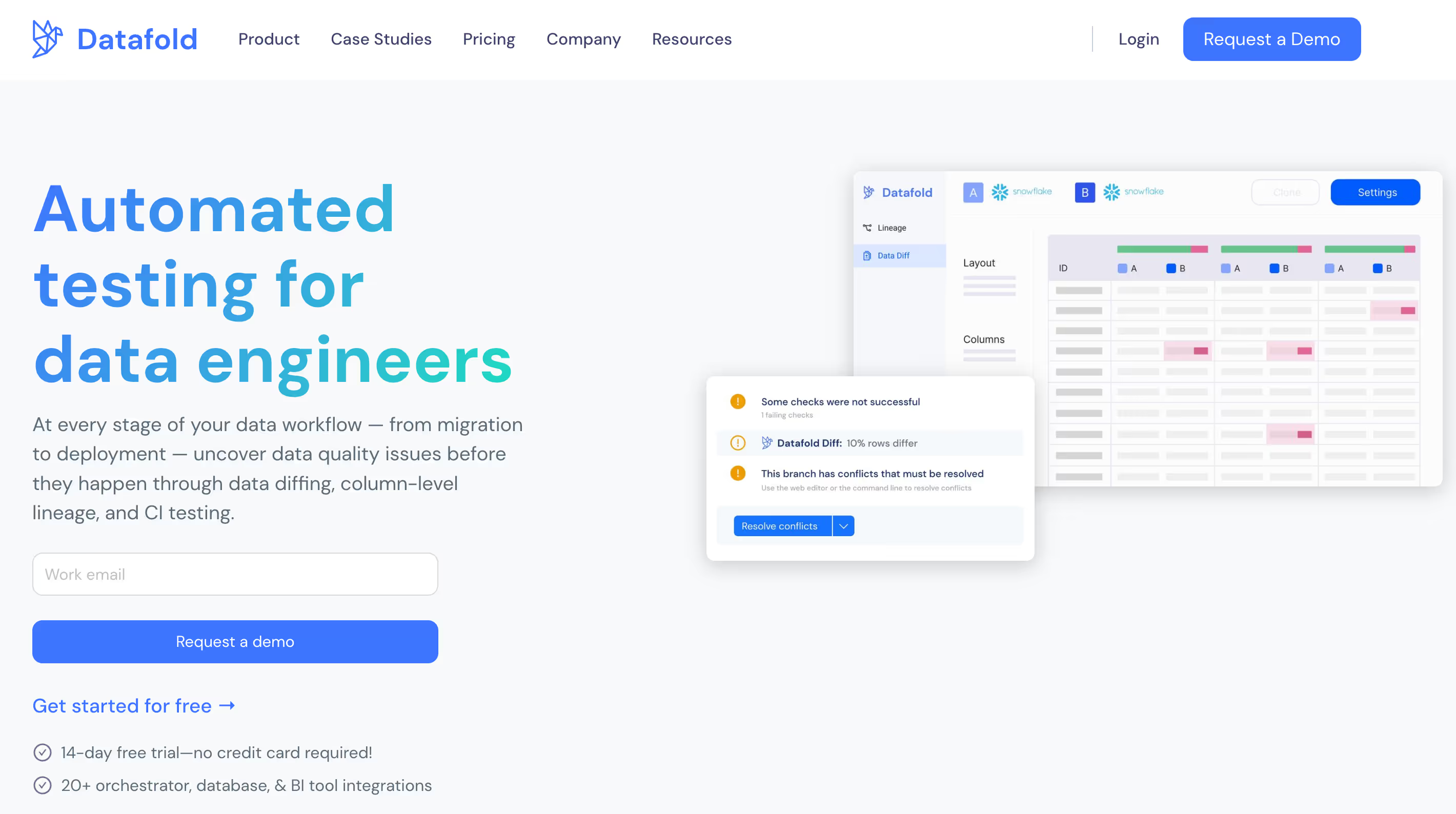

7. Datafold

Datafold is a proactive data quality platform that combines automated data testing and observability to help data teams prevent data quality issues and accelerate their development velocity. As a data observability tool, not only does Datafold help ensure better data quality but it also automates mundane tasks for data developers allowing data teams to focus on truly creative work, ship data products faster while having high confidence in their data quality.

By integrating deeply into the development cycle to prevent bad code deploys, Datafold enables data teams to prevent data quality issues instead of merely reacting to them by detecting data regressions before corrupted data gets into production. To achieve that, Datafold integrates with ELT frameworks such as dbt and automates testing as part of CI/CD process, serving as a gatekeeper.

How Datafold improves data quality:

Value-level Data Diffs automates regression testing of any change to SQL code by providing detailed visibility into the impact of every change in the code on the resulting data. As ETL codebase grows into thousands of lines of code with sprawled dependencies, testing even the slightest change may require hours of manual validation. Data Diff can be used to automate regression testing for code changes, code reviews, data transfer validations, and ETL migrations:

Column-level data lineage enables data users to quickly trace dependencies between tables, columns, and other data products such as BI dashboards and ML models. Understanding the flows of data on such a granular level massively simplifies the change management process, helps avoid breaking things for downstream data users, and speeds up incident resolution:

Monitors helps detect unexpected changes and address issues early. Datafold can track any SQL-defined metric with an ML model that learns the typical behavior (even if it's very noisy) of the metric and alerts relevant stakeholders over email, Slack, PagerDuty, or other channels. Using one of our four monitor types, data teams can easily detect issues such as an abnormal number of rows appended to a dataset or an increase in NULL values in an important column.

Data quality in machine learning tools

No matter how advanced and sophisticated a machine learning model is, its effectiveness and accuracy are highly dependent on the quality of input data. In this article by Harvard Business Review, Thomas Redman acknowledges how bad data can affect your machine learning tools and make them useless. Monitoring the quality of input data in conjunction with the model performance becomes and integral part of deploying any production ML system.

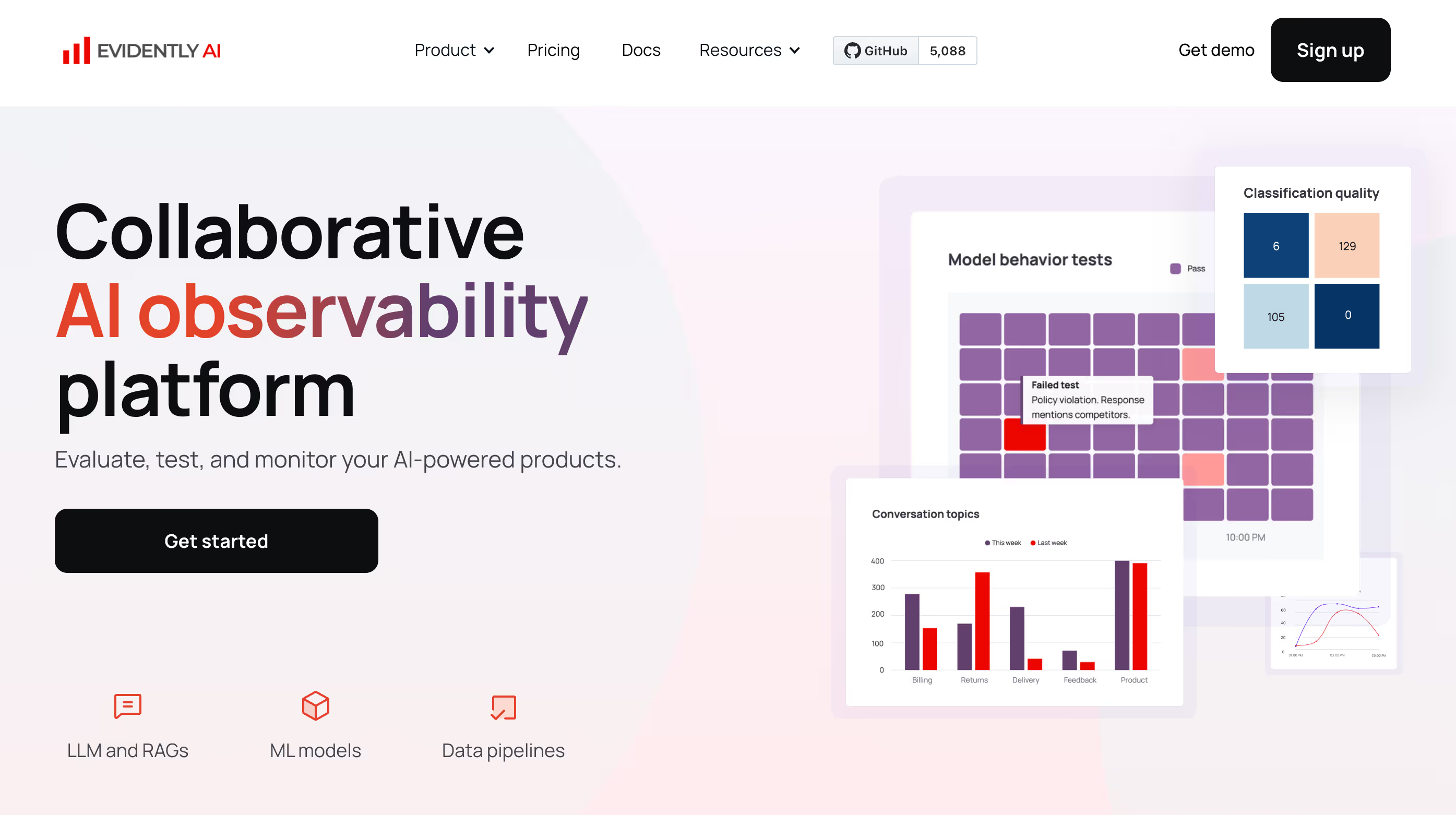

8. Evidently

Evidently is an open-source machine learning monitoring tool that generates interactive visual reports by analyzing machine learning models during validation and production. The core concept compares two datasets, the reference, and the current dataset, to give a performance report. Evidently applies six report cases for ML models: data drift, numerical target drift, categorical target drift, regression model performance, classification model performance, and probabilistic classification model performance.

How Evidently improves data quality: When loading data to a machine learning model, many issues can occur including lost data, data processing problems, and changes in the data schema. It is vital to catch these issues in time or deal with failures in production. For example, if a change in feature values or a model decay occurs, the data drift report detects changes in feature distributions using statistical tests and produces interactive visual reports. Using Evidently, you can also summarize and understand the quality of your classification models.

9. Data Version Control

DVC is an open-source version control system for tracking ML models, feature sets, and associated performance metrics with a git-like interface. DVC is compatible with any git repository or git provider like GitHub or GitLab. It uses cloud service providers like Amazon S3, Google cloud storage, etc. for storing data. It also supports low friction branching and metric tracking and a built-in ML pipeline framework.

How DVC improves data quality: Just like version control in software development creates a better codebase, having your models and datasets managed and version-controlled allows for reproducibility and collaboration. It also provides for easy modification, reverting errors, and fixing bugs. DVC Studio lets you analyze git histories by extracting information about your ML experiments' data sets and metrics. With DVC, you can also track failures in your models in a reproducible way.

Choose data quality tools based on your use case

Data quality, much like software quality, is such a multidimensional and complex problem that no single tool can solve it for an organization. Instead of looking for a unicorn tool that does (or most likely, pretends to do) “everything,” start strategically with a targeted approach of specifying the issues. For example, “people break data when making changes to SQL code” or “ingest from our data vendor is unreliable,” and then look for specialized solutions that solve each problem well.

Datafold helps your team stay on top of data quality by providing data diff, lineage, profiling, catalog, and anomaly detection. This helps you stay proactive about potential issues with data integration from multiple sources while also eliminating breaking changes by automating regression testing for ETL and BI applications.

While there are multiple testing techniques, those require significant time investments to achieve code coverage and are always a moving target. Datafold is the only solution that allows you to go from 0 to 100% test coverage in a day. As a result, you'll build trust with business users and showcase how data management is ensuring the best data reliability.

If you're interested in learning more about data quality tooling and how Datafold’s data diffing can help your team proactively detect bad code before it breaks production data:

- Book time with one of our solutions engineers to learn more about Datafold and which data quality tool make sense for your specific data environment and infrastructure.

- If you're ready to get started, sign up for a 14 day free trial of Datafold to explore how data diffing can improve your data quality practices within, and beyond, data teams.

.avif)