Code review best practices for Analytics Engineers

Code review health is something teams should monitor and iterate on over time. Learn the best strategies for data teams to improve their code review process.

Code review is a quality assurance process in which your teammates read and evaluate code. In the data world this also means evaluating the impact of the code on the underlying and downstream data.

While this process is necessary to prevent errors from happening, teammates will skip code review for a number of reasons:

- 🤔 Lack of context.

- 😳 Insecurity around providing feedback.

- 😅 Just too busy!

You may add screenshots, data test results, and comments to help the reviewer understand the goal of the change and the impact to the data, but this is a lot of manual work.

Luckily there are best practices that automate the mundane parts of your data team's code review process:

- 👭 Auto assignment of code review

- 🧹 Implement a SQL linter

- ✅ Data Diff in CI

Auto assignment of code review

“When everyone owns something, nobody owns it, and nobody has a direct interest in maintaining or improving it’s condition” - Abraham Lincoln

Code review culture is one of those shared resources that is never quite anyone’s top priority, but can be critical to a team’s success. In practice, the burden of code review can fall disproportionately on the shoulders of a few overachievers, or even worse, it might be neglected entirely.

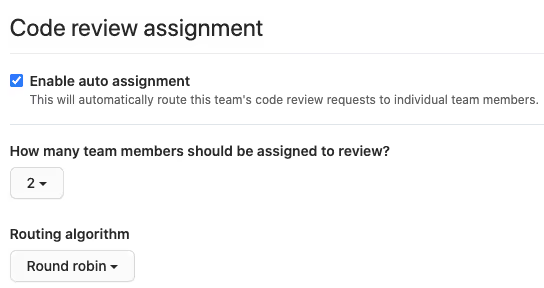

GitHub offers an auto assignment feature that can be used to automatically tag reviewers on pull requests. This helps take the mandate away from authors or reviewers to seek review.

GitHub has a two routing options:

- Round Robin: Chooses reviewers based on who’s received the least recent review request.

- Load Balance: Chooses reviewers based on each member’s total number of recent review requests. It tries to ensure that team members review an equal number of pull requests in any 30 day period.

As needed, you can opt to exclude teammates from review. You can also do something fancy like assign a senior and junior team member to each pull request. If a subject matter expert is truly required, they can always be summoned 🪄🧙 in addition to the default assignees.

Auto assignment of code review is a low effort way to spread knowledge, share the burden, and to reduce the cognitive load of deciding who should be assigned.

Implement a SQL linter

Too often, code review can focus on superficial decisions that have little or no impact on performance. By implementing a SQL linter like sqlfluff, we can do away with reviewing SQL formatting in pull requests once and for all! Syntax and formatting decisions can be made in advance, in the linter, and will be universally applied to all SQL in your project.

By letting the linter arbitrate the age-old debates of leading commas and capitalization of keywords, they’re simply no longer a topic of discussion on pull requests. Questions that were previously ambiguous (contentious, even!) now have a clear answer, and don’t need to be discussed.

Data Diff in CI

Reviewers often lack detailed context around a given pull request, and can be flying blind when it comes to really understanding the impact of proposed changes. To merge with increased confidence, a thorough reviewer might checkout the feature branch, run models locally, and manually compare to production. However, this is a tedious manual process that most reviewers simply won’t do. Apologies that you had to hear it from me, but last I checked:

- Santa isn’t real. 😭

- Reviewers don’t test your data models locally. 😬

Faced with the prospect of 1) move fast and break stuff, vs 2) move slow, carefully review, and (maybe) break less stuff, most reviewers will default to the first option, and “LGTM” their way through the day.

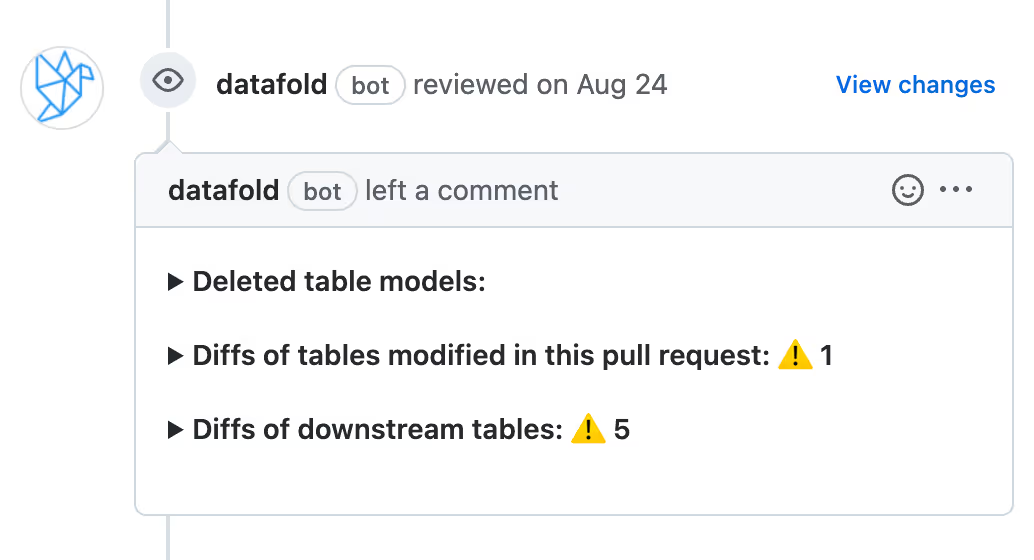

Here’s where Data Diff in CI fits in. Once plugged into the CI process, Datafold’s integration will stamp your pull request with a tidy summary of impacted models.

With a quick glance, and a click or two, the reviewer instantly knows exactly which models are impacted by the changes proposed in the pull request. Is this a standalone model with no dependencies? Or are there 700 models downstream? By describing the blast radius of a pull request, the reviewer is given key data points that allow them to calibrate for risk.

Change management is all about risk assessment. For low risk changes, teams can afford to, and benefit from, moving quickly. Most small changes can quickly be reverted and have low impact. The value of moving quickly outweighs the cost it would take to methodically review each change. For high risk changes, such as a pull request that touches your `key_revenue_metrics` model, more rigorous review is warranted.

With Data Diff in CI, reviewers can quickly assess the risk associated with the PR, and scope their review accordingly. It acts as a traffic light 🚦 enabling reviewers to go when they should go, and stop when they should stop.

Summary

A perfect code review culture is aspirational, with no clear finish line. Rather, code review health is something teams should monitor and iterate on over time. With the workflow improvements covered in this post, you can automate away some of the mundane bits, and leave more bandwidth for your team to provide proper code review.

What does code review look like on your team? What has your team done to improve code review?

.avif)