Hadoop to Databricks Migration: Challenges, Best Practices, and Practical Guide

If you’re planning a Hadoop migration to Databricks, use this guide to simplify the transition. We shed light on moving from Hadoop's complex ecosystem to Databricks' streamlined, cloud-based analytics platform. We delve into key aspects such as architectural differences, analytics enhancements, and data processing improvements.

We’re going to talk about more than just the tech side of things and tackle key business tactics too, like getting everyone on board, keeping an eye on the budget, and making sure day-to-day operations run smoothly. Our guide zeros in on four key pillars for nailing that Hadoop migration: picking the right tools for the job, smart planning for moving your data, integrating everything seamlessly, and setting up strong data rules in Databricks. We've packed this guide with clear, actionable advice and best practices, all to help you steer through these hurdles for a transition that's not just successful, but also smooth and efficient.

Lastly, Datafold’s powerful AI-driven migration approach makes it faster, more accurate, and more cost-effective than traditional methods. With automated SQL translation and data validation, Datafold minimizes the strain on data teams and eliminates lengthy timelines and high costs typical of in-house or outsourced migrations.

This lets you complete full-cycle migration with precision–and often in a matter of weeks or months, not years–so your team can focus on delivering high-quality data to the business. If you’d like to learn more about the Datafold Migration Agent, please read about it here.

Common Hadoop to Databricks migration challenges

In transitioning from Hadoop to Databricks, one of the significant technical challenges is adapting current Hadoop workloads to Databricks' advanced analytics framework and managing data in a cloud-native environment. It’s possible to achieve this by reconfiguring and optimizing Hadoop workloads, which were originally designed for a distributed file system and batch processing, to leverage the real-time, in-memory processing capabilities of Databricks.

You’ll also have to think about managing data in a cloud-native space. The way it’s done in Databricks is vastly different from the way it works for Hadoop. It may sound overwhelming, but it's totally doable. You’ll have to rework and fine-tune your Hadoop workloads, originally crafted for distributed file systems and batch processing, to really make the most of Databricks' speedy, in-memory processing. This work involves carefully adjusting these workloads to effectively align with the new environment provided by Databricks.

To get started, we need to talk about architecture.

Architecture differences

Hadoop and Databricks have distinct architectural approaches, which influence their data processing and analytics capabilities.

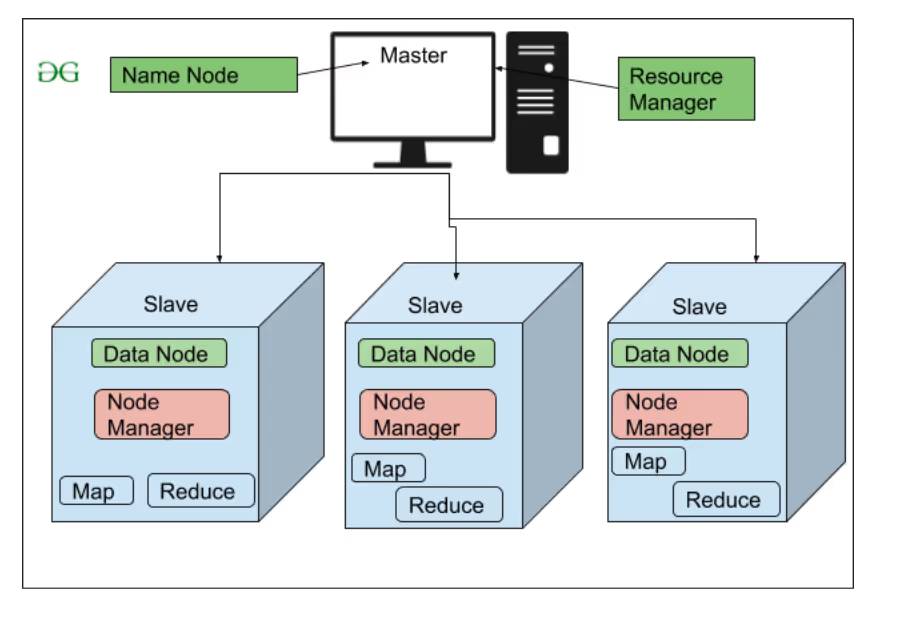

Hadoop, famed for its ability to handle vast volumes of data via its distributed file system and MapReduce batch processing, operates across multiple hardware systems. While its design is robust for large-scale data handling, it's fairly complex. It demands hands-on setup and management of clusters and typically relies on extra tools for in-depth data analytics, striking a balance between power, complexity, and hosted on-premise hardware.

Databricks, on the other hand, offers a unified analytics platform built on top of Apache Spark. As a cloud-native solution, it simplifies the user experience by managing the complex underlying infrastructure. Its architecture facilitates automatic scaling and refined resource management. Hadoop’s approach leads to enhanced efficiency and accelerated processing of large-scale data, making it a robust yet user-friendly platform for big data analytics.

Hadoop’s architecture explained

Hadoop's design is deeply rooted in the principles of distributed computing and big data handling. It's crafted to manage enormous data sets across clustered systems efficiently. The system adopts a modular approach, dividing data storage and processing across several nodes. Its architecture not only enhances scalability but also ensures robust data handling in complex, distributed environments.

While Hadoop’s architecture excels in storing and processing large-scale data, it faces challenges in scalability, performance, and integration with newer, cloud-based technologies.

Scalability: Hadoop was initially crafted for batch processing using affordable, standard hardware, employing a horizontal scaling approach. Managing a substantial on-prem Hadoop setup isn’t easy, often demanding considerable investment and operational efforts. As the data and processing demands escalate, scaling an on-premises Hadoop cluster can turn into a major hurdle. The complexity here stands in stark contrast to the more fluid and scalable cloud-native solutions offered by Databricks

Performance challenges: Hadoop’s performance largely hinges on how effectively MapReduce jobs are executed and the management of the Hadoop Distributed File System (HDFS). Although Hadoop is capable of processing large datasets, batch processing times can be slow, particularly for intricate analytics tasks. Fine-tuning performance in Hadoop demands an in-depth grasp of its hardware and software intricacies. Additionally, the system's distributed nature can lead to latency issues during data processing.

Integration with evolving technologies: Hadoop, developed in the mid-2000s, occasionally struggles to integrate seamlessly with newer, cloud-native technologies. The framework's introduction marked a major advancement in handling large datasets, but its inherent complexity now necessitates specialized skills for effective management.

Following the challenges of Hadoop integration, the data processing landscape witnessed the emergence of data lakes. This new concept, building upon and extending Hadoop's capabilities, marked a turning point in handling large datasets. Modern platforms like Databricks, which embody cloud-native principles and provide managed services, are at the forefront of this transformation. They represent a pivotal shift towards more agile, efficient, and user-friendly big data processing, offering a stark contrast to the rigidity of traditional Hadoop environments.

Databricks architecture explained

At the heart of Databricks lies its cutting-edge, cloud-native framework, anchored by the Databricks Lakehouse Platform. The platform enhances current capabilities in data analytics and artificial intelligence. It's a fusion of the best of both worlds – combining the versatility of data lakes with the strength and reliability of data warehouses. The integration offers a well-rounded solution for handling big data challenges. Transitioning to the Databricks Lakehouse Platform allows for more efficient and faster processing of big data.

Central to this architecture is Apache Spark, an open-source, distributed computing system renowned for fast, in-memory data processing. Databricks takes Spark to the next level. It simplifies what's typically complex about Spark, providing a managed service that makes managing clusters a breeze. The result is better performance, more efficiency, and less hassle for everyone involved. It makes Spark work smarter, not harder.

Another key aspect of Databricks is its ability to integrate with cloud-based storage. It manages and processes large volumes of data very efficiently while offering security and as much storage scalability as you need. Compared to traditional on-prem Hadoop setups, it's far superior in managing and processing data.

Databricks goes beyond mere storage and processing capabilities; it introduces cutting-edge tools like Delta Live Tables. The tables make it easy to set up and manage reliable data pipelines. Plus, Delta Live Tables are equipped with automated data quality checks and offer hassle-free maintenance, streamlining the entire data handling process.

Dialect differences between Hadoop SQL and Databricks SQL

Hadoop and Databricks have notable differences in SQL syntax, especially when it comes to managing complex data types and advanced analytics functions. For data engineers and developers, understanding these differences is a critical part of the transition process. SQL differences play a major role in how smoothly we can migrate and reshape our data workflows between these platforms.

Let's talk about Hadoop's SQL. It mainly uses Hive (HiveQL) and Impala, which are like extended versions of the standard ANSI SQL, but they've been tweaked to handle big data processing. HiveQL, for instance, comes packed with functions specifically made for big data analytics. It includes unique Hadoop-specific extensions for those tricky complex data types and meshes well with other parts of the Hadoop ecosystem, like HDFS and YARN.

Databricks SQL, based on Apache Spark, is an ANSI SQL-compliant language tailored for big data and cloud environments. It brings advanced analytics to the table and handles complex data types with ease. Databricks also has the ability to manage both batch and stream processing, making it a powerhouse for manipulating data in modern, data-heavy applications.

Traditional translation tools often struggle with these complexities, turning what might seem like a straightforward task into a months- or even years-long process.

Datafold’s Migration Agent simplifies this challenge by automating the SQL conversion process, seamlessly adapting Teradata SQL code—including stored procedures, functions, and queries—for Snowflake. This automation preserves critical business logic while significantly reducing the need for manual intervention, helping teams avoid the lengthy, resource-intensive rewrites that are typical in traditional migrations.

Dialect differences between Hadoop and Databricks: Data types

The SQL dialect differences between Hadoop and Databricks stem from their distinct approaches to big data. Hadoop's HiveQL is specifically designed for batch processing within distributed systems. In contrast, Databricks' SQL, grounded in Apache Spark and adhering to ANSI SQL standards, excels in both batch and real-time processing. Its advanced capabilities in analytics and machine learning provide users with a more versatile approach to data analysis.

Example Query: Hadoop SQL and Databricks SQL

In HiveQL, a common operation is to handle complex data types like arrays of structs. The following query uses the explode function, a basic operation for array data types:

This query transforms each element of an array into a separate row, a straightforward operation in HiveQL.

Spark SQL, while offering similar syntax, also provides advanced functions for complex data types. An example of this is the inline function used for arrays of structs, which is not available in HiveQL:

The query above demonstrates the handling of arrays of structs, where each struct is transformed into a separate row with its fields becoming columns. The inline function is part of Spark SQL's extended functionality for complex types, showcasing its ability to handle nested data more effectively.

While both HiveQL and Spark SQL use the explode function for array types, Spark SQL offers additional capabilities like the inline function for more complex scenarios involving nested data structures. Spark SQL's advanced data processing capabilities underscores its suitability for more sophisticated data manipulation tasks, especially when dealing with nested and structured data types in large datasets.

Validation as a black box

Validation can become a “black box” in migration projects, where surface-level metrics like row counts may align, but hidden discrepancies in data values go undetected.

Traditional testing methods often miss deeper data inconsistencies, which can lead to critical issues surfacing only after the migration reaches production. Each failed validation triggers time-intensive manual debugging cycles, delaying project completion and straining resources.

Business challenges in migrating to Hadoop to Databricks

Migrating from Hadoop to Databricks presents several business challenges, which are important to consider for a successful transition. The four key challenges are:

- The never-ending “last mile”: Even when a migration is near completion, the “last mile” often proves to be the most challenging phase. During stakeholder review, previously unidentified edge cases may emerge, revealing discrepancies that don’t align with business expectations. This phase often becomes a bottleneck, as each round of review and refinement requires time and resources, potentially delaying full migration and user adoption.

- Cost implications: Migrations aren’t fast, cheap, or easy, so you can’t overlook the costs. There’s a mix of initial investment for making the transition and the ongoing operational expenses. You should thoroughly evaluate and plan the budget, weighing the potential savings from reduced infrastructure management and increased efficiency, against the costs associated with Databricks' usage-based pricing.

- Skills and training requirements: While Hadoop relies heavily on understanding its ecosystem, including components like MapReduce, Hive, and HDFS, Databricks is centered around Apache Spark and cloud-based data processing. Achieving a smooth transition may require additional training and upskilling of existing staff. In some cases, it might even require bringing in new talent equipped with these specific skills. Upgrading both can be both time-consuming and costly.

- Data governance and compliance: Migrating to a new platform like Databricks means taking a fresh look at how we handle data governance and compliance. It's not just a technical shift; it's about making sure our data governance policies are adapted and that the new system complies with industry regulations and standards. Databricks has advanced security and governance features, but it's not just about the tools. You’ll need to be strategic in your planning, allocating the right resources and managing the change effectively to guarantee a smooth and successful transition.

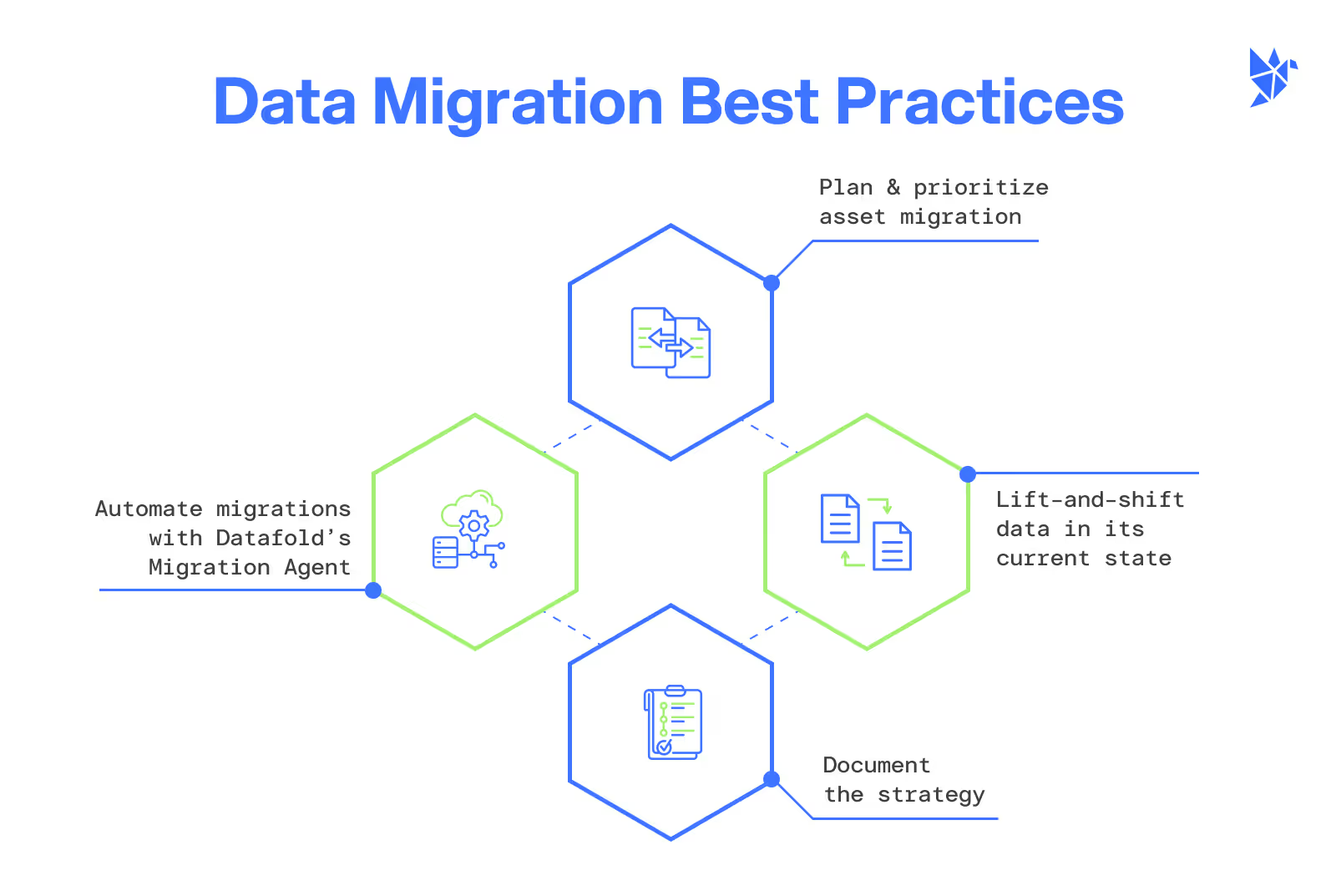

4 best practices for Hadoop to Databricks migration

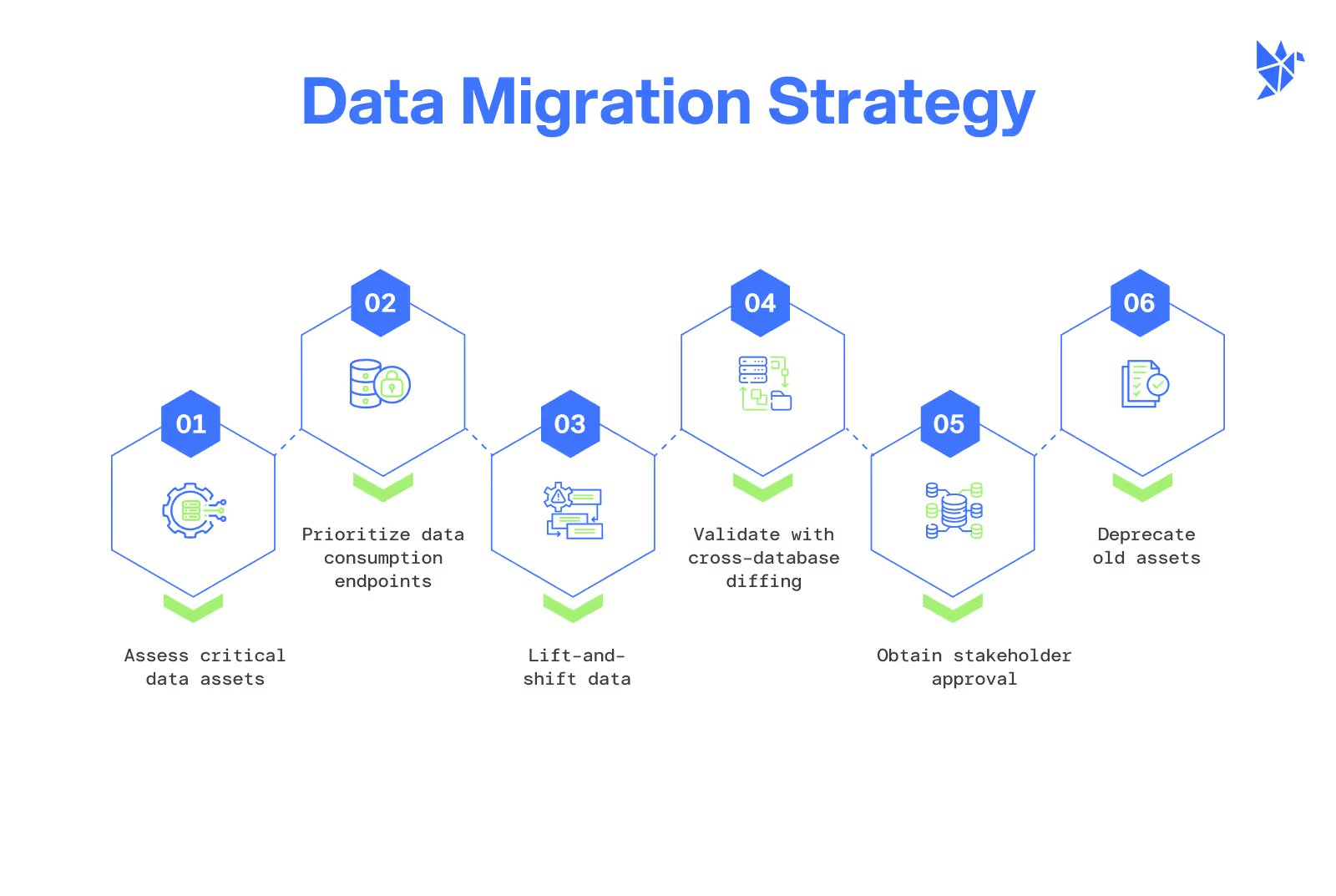

We've got a set of best practices tailored specifically for migrating from Hadoop to Databricks. They offer a clear, structured way to handle the change, making sure you hit all your technical needs while also keeping your business goals front and center. Here is how to make your move from Hadoop to Databricks smooth and efficient:

- Plan and prioritize asset migration

Effective migration planning involves using column-level lineage to identify and rank vital data assets, followed by migrating data consumption endpoints before data production pipelines to ease the transition and reduce load on the old system.

- Lift and shift the data in its current state

Leverage Datafold’s DMA for the initial lift-and-shift, automatically translating Hadoop to Databricks' syntax, which minimizes manual code remodeling and speeds up migration.

- Document your strategy and action plan

Establish a roadmap for the Hadoop-to-Databricks migration that outlines goals, timelines, and resource allocation. Integrate Datafold’s DMA to streamline SQL translation, validation, and documentation for a unified strategy.

- Automating migrations with Datafold’s DMA

Datafold’s Migration Agent (DMA) simplifies the complex process of migrating SQL and validating data parity across Hadoop and Databricks. It handles SQL dialect translation and includes cross-database data diffing, which expedites validation by comparing source and target data for accuracy.

By automating these elements, DMA can save organizations up to 93% of time typically spent on manual validation and rewriting.

Putting it all together: Hadoop to Databricks migration guide

Getting from Hadoop to Databricks without issue requires a plan that's both smart and well-organized. You’ll need to mix technical expertise with solid project management. Follow the best practices we've laid out in our guide, and you'll experience a smooth transition for your team. Here’s how to put these strategies into action:

- Develop a comprehensive migration plan

First, draw up a detailed migration plan. It should cover timelines, who's doing what, and how you'll keep everyone in the loop. Next, you really need to get under the hood of your current Hadoop setup. Understand how your data flows, and pinpoint exactly what you want to achieve with the move to Databricks. You'll start by moving the less critical data first, then gradually work your way up to the more important components.

Proceeding this way will help you manage the risks better. It's essential to know the strengths and weaknesses of your Hadoop environment as this knowledge will shape your goals and strategies for a smooth transition to Databricks.

- Prioritize data consumption endpoints first

It’s better to migrate data consumption endpoints before data production pipelines. Start by adjusting your data processing workflows to work in conjunction with Spark's features. Following this step is vital for the migration. It involves shifting from Hadoop’s HiveQL or similar query languages to Spark SQL, which is a big part of Databricks’ powerful data processing setup. Then, begin by transferring the less critical Hadoop workloads over to Databricks. Make sure they're compatible and well-optimized for performance within Databricks’ Spark environment.

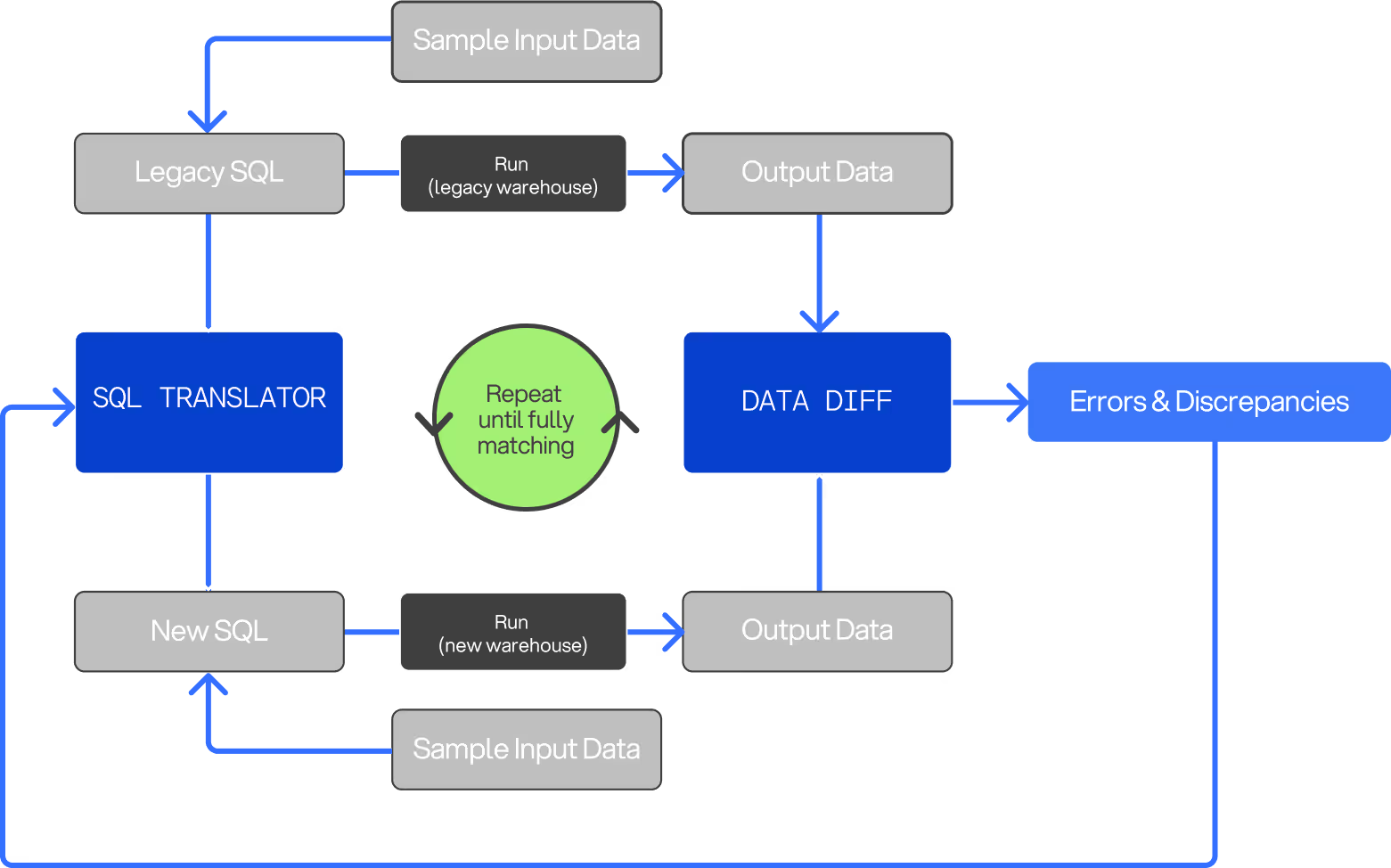

- Leveraging lift-and-shift for Hadoop to Databricks

Adopt a lift-and-shift strategy in the initial migration phase to simplify the transition, accommodating the architectural and SQL dialect differences between Hadoop and Databricks. Lift-and-shift data in its current state using a SQL translator embedded within Datafold’s DMA which automates the SQL conversion process.

- Validate with cross-database diffing

Then, use Datafold’s cross-database diffing to verify data parity, enabling quick and accurate 1-to-1 table validation between the Teradata and Snowflake databases.

- Get stakeholder approval

Keep everyone in the loop and on board. To facilitate a smooth transition to Databricks, equip your team with the necessary training and tools. Introduce them to innovative solutions like Datafold's data diffing, which significantly enhances data integrity and consistency, especially when transitioning from Hadoop to Databricks.

Demonstrating the tangible improvements in data processing with Databricks, supported by the robust capabilities of tools like Datafold, will garner widespread support, making the overall shift more seamless and effective for everyone involved.

- Finalize and deprecate old systems

Once the migration achieves stability and meets its objectives, it's time to say goodbye to the old Hadoop setup. Let everyone know you've successfully moved to Databricks and be there to help them get used to the new system.

Conclusion

As you tackle the challenge of moving from Hadoop to Databricks, remember this: it's all about thoughtful planning, strategic action, and using the right tools. If you're curious about how specialized solutions can smooth out your migration path, reach out to a data migrations expert and tell us about your migration, tech stack, scale, and concerns. We’re here to help you understand if data diffing is a solution to your migration concerns.

At Datafold, our aim is to assist in making your migration to Databricks as seamless and efficient as possible, helping you leverage the full potential of your data in the new environment.