SQL Tools on the Command Line

A review SQL tools for performing data analysis from the command line covering ease of installation, supported data and file formats, SQL functionality, and benchmarking on a common query.

In this post, we’ll review SQL tools for performing data analysis from the command line. We’ll cover the following five tools:

For each of these tools, we’ll focus primarily on these aspects:

- Ease of installation

- Supported data and file formats

- SQL functionality

- Benchmarking of a common query

clickhouse-local

The first tool comes from ClickHouse, the creators of the open-source, column-oriented database management system. Their CLI tool, clickhouse-local, must be installed with the entire ClickHouse binary, and you can follow the instructions here for installation. Alternatively, the official Docker image can be found on Docker hub. The tool is available for Linux and macOS.

Clickhouse-local uses the ClickHouse SQL dialect, which is close to ANSI SQL and supports most common SQL operators. With clickhouse-local, a developer can output the results of SQL queries to either a local “table” or directly to a filesystem.

Because the creator of clickhouse-local is a large engineering team, the documentation is better than most of the other CLI tooling, and it provides a large range of feature flags for customizable use. Such strong development support for the CLI tool makes it a strong option over other open-source tools, which have less support for bug fixes and feature requests.

Supported data formats

Clickhouse-local supports the following major data and file types:

- CSV/TSV/Flat Files

- Parquet

- JSON

- Avro

- ORC

Command-line usage

When running the tool from the command line, you can include the following major arguments to control operation and functionality:

- <span class="code">structure</span> (table structure of input data)

- <span class="code">input-format</span> (format of incoming data)

- <span class="code">file</span> (path to data)

- <span class="code">query</span or <span class="code">queries-file</span> (queries/SQL to execute)

In addition to the above arguments, you can include other flags to control things like logging, stack traces, and configurations.

Example usage for benchmarking

For our benchmark operation, we’ll use the Divvy Bikes open-source data set, which includes CSV files. Our task will be to read data from January 2022 and count the number of bike rides per day for that month.

Here is the clickhouse-local command to complete this action.

The most time-consuming part of processing a CSV file with clickhouse-local is building the <span class="code">--structure</span< command to identify the columns and data types. The SQL query itself is straightforward and easy to use. The above command results in the following output:

Overall, clickhouse-local is easy to use. However, it requires inputting the data types for the data being processed, and this can be time-consuming. One of the positive features of this tool is the availability of a pre-built Docker image, which simplifies the installation and use of clickhouse-local. In addition, clickhouse-local supports a wide range of file types, which will make it more useful to larger data teams working on complex architecture.

textql

Next up is textql. Textql is a smaller, open-source project on GitHub that is built with Golang. It can be installed on macOS with Homebrew or, alternatively, cloned from git and built using a Docker command. Like with clickhouse-local, the availability of the Docker image is a great feature that can simplify the installation and usage.

It’s worth noting that the documentation and examples for textql are slightly lacking. This might hinder usage and cause confusion regarding the style of SQL expected. Most of the usage instructions are found in the project repository README.

From the description, it appears that textql tries to follow closely with SQLite and likely uses SQLite in the background.

Supported data formats

Textql only supports the reading and queries of flat files (in CSV or TSV format), and not more complex files like Parquet or Avro. This may be problematic for those wanting to use a single CLI tool to inspect and query multiple file types.

Command-line usage

Similar in style to clickhouse-local, the following major command-line flags are used to control the usage of texql:

- <span class="code">dlm</span (input delimiter)

- <span class="code">header</span> (whether the input file includes a header)

- <span class="code">pretty</span> (formats the output)

- <span class="code">save-to file</span> (saves sqlite3 database to file)

- <span class="code">sql</span> (query to run)

The final argument to any textql command is the file name to be processed.

Example usage

Using the same open-source data set as above, we’ll use textql to read in data and query for the number of bike rides for each day in January 2022. The command looks like this:

As can be seen above, textql is a simpler command line to run, and the SQL format is closer to that of SQLite than other tools. By not requiring the specification of the data types for each column, the command for textql is simple and straightforward. Even then, textql was able to infer the timestamp values and still return the correct results. The date-time functions differ widely from clickhouse-local.

Using textql, we received the following output:

Overall, textql would be a good option for quick and simple text-file analysis.

q

q is another open-source project on GitHub, in a design similar to textql. It can be installed on macOS, Windows, and Debian Linux. Q was built to support SQL statements on tabular text data. This tool has a more extensive set of documentation and instructions than textql, making operation and use extremely clear.

Since q does not support complex data sources, this simplifies the interface and usage. Similar to textql, q follows the SQL syntax and style of SQLite. The queries and interface are also nearly identical to textql. This is another tool that could be adopted for quick and dirty text analysis.

Supported data formats

Q also only supports the reading and queries of CSV or TSV flat files.

Command-line usage

The tool has a few major flag options, specifically the ones that would be needed for flat file text data sources:

- <span class="code">H</span> (indicates the existence of header records)

- <span class="code">t</span> (to indicate a tab-delimited file)

- <span class="code">d</span> (specifically a special delimiter)

Example usage

To run the same query in q as we did for other tools, we use the following command:

Note the H flag to indicate the existence of headers and the d flag to set the delimiter as a comma. Similar to textql, Q is very easy to use and more straightforward than clickhouse-local. The output from the above command is:

dsq

Another open-source GitHub project, similar to textql and q, is dsq. Although dsq is similar, it provides more functionality and interfaces with more file types than just flat files. Also, dsq provides a wide range of command-line flags to turn on various extensions, ranging from caching to interactive mode.

Dsq also uses SQLite under the hood, so the syntax and functions will be familiar to most users. Dsq also supports JOINS on its data sets. The project README provides an overview, but the overall documentation is somewhat lacking.

Supported data formats

Dsql supports the following data and file types:

- CSV/TSV flat files

- JSON

- Parquet

- Excel

- Orc

- Parquet

- Avro

Dsq’s wide range of file type coverage (even Excel!) is impressive. In today's modern data stack environment, the ability to support many different, complex file types is important.

Command-line usage

Although dsq is slightly more advanced in its ability to read more formats, it doesn’t offer much concerning command-line flags for controlling the functionality.

- <span class="code">schema</span>

- <span class="code">pretty</span>

- <span class="code">cache</span>

- <span class="code">I</span> (interactive mode)

- <span class="code">convert-numbers</span> (converts strings with integers to integers)

Example usage

When using dsq to perform our same benchmarking query, we encounter some minor differences from the other tools. The file comes as the first argument, and the table name is left as an empty dictionary in the query. Otherwise, the dsq command is very short and to the point, and it’s less complex than clickhouse-local.

The result comes back nicely formatted when using the -p flag. This is different from the other tools and easy on the eyes.

roapi

The last tool we’ll review is roapi, and it is probably the most complex of all. However, it’s also the most feature-rich. This tool can be used via Docker or installed with Homebrew. Because of its complexity, roapi actually builds an HTTP API on top of your data, so its usage requires a few more command-line arguments than the other tools. In fact, roapi is so advanced, it even provides the ability to run GraphQL queries.

Since roapi builds an API for interaction, it’s important to note that the user will need to be familiar with curl or some other tool for sending and receiving HTTP requests and responses.

Supported data formats

Roapi supports the following data and file types:

- CSV/TSV flat files

- Parquet

- JSON

- … and several other databases.

Example usage

The additional complexity of roapi can be seen in the following example. First, we run the command that adds our CSV data as a table (which we will call <span class="code">trips</span>) and starts up the API server:

Once this is complete, we can use curl to send a query. For example:

Unfortunately, the documentation for roapi notes that the REST API query frontend currently supports the following query operators: columns, sort, limit, and filter. Without the support for <span class="code">GROUP BY</span> and aggregate functions, we can’t perform the benchmarking example query that counts the number of trips for each day of the month. This is unfortunate. While roapi does have a lot of things going for it, the lack of support for some of these querying features limits its usefulness.

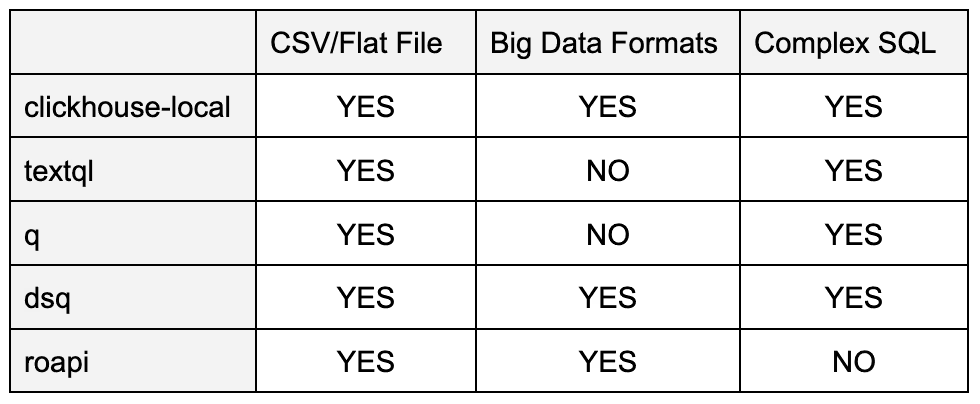

SQL CLI Tool Comparison Chart

With the rise of the modern data stack and the speed at which data teams need to move, a reliable command-line tool for inspecting files and running simple analytics locally would definitely bring greater convenience and efficiency. While it’s possible to load small datasets into large SaaS tooling, doing so requires a lot more work and overhead than a simple CLI tool.

Before adopting a specific tool, you should be clear about your needs. Are you looking for a tool that mostly focuses on flat files like CSV, or do you have more complex data, like Parquet or Avro? Not every tool supports these more common big data file types. The following table breaks down the tools we reviewed:

On the surface, these CLI tools all provide similar functionality and performance. However, it’s important to review the documentation, installation, and features available to ensure the tool can fit your requirements.

.avif)