Good Data: How Spotify, Shopify & Lyft approach data quality

Good Data: How Spotify, Shopify & Lyft approach data quality

We are thrilled to announce we are finally on Clubhouse! Our latest conversation of data, engineering, and data quality featured co-host George Xing, Zeeshan Qureshi, and Josh Temple. They joined us from Lyft, Shopify, and Spotify, respectively.

Below is a lightly edited transcript of our interview.

This is the first in a series of interviews about unlocking different approaches to data quality. If you enjoy the conversation and don’t want to miss other opportunities from Datafold, check out our Data Quality Meetups.

Can you tell us about your background and how it led you to a career in data quality?

George: My background is in analytics and data. I spent most of my early career at Lyft, where I joined as the first analytics hire. I spent about five years building out the team and overseeing some of the early business intelligence efforts. I have run into a lot of data quality issues over the years. More recently, I have been exploring a few directions in the data space, particularly about data quality issues; it is a fun yet painful topic.

Zeeshan: Thanks for having me! I have been at Shopify for five years. I started by building our analytics collection platform for storefront data. Over the last three years, I have led our team. We have an event streaming platform to schematize data collection.

Josh: Thank you for having me; I am Josh Temple. I am an Analytics Engineer at Spotify. I work on an ML platform to think about the way that we segment Spotify users. Over the past couple of years, I have been working on a new project called Spectacles. Spectacles is a continuous integration tool for Looker. It is an automated testing platform that started as an open-source CI and is now a hosted web app. I am excited to talk about this topic. As a self-taught data person with no formal engineering background, I did not realize at first how important quality and testing are or how interested I would be in this domain. It has been such a big part of my journey over the past few years!

How do you manage to democratize the process of building pipelines while maintaining reliability and ensuring that people do not break things?

Zeeshan: I’ve thought a lot about CI and testing of data pipelines and I’ve had the opportunity to implement reliable and approachable data engineering practices with dbt at Shopify.To explain, I’d like to share some context of how we started collecting our data. In 2015, Shopify’s data collection process was completely un-schematized. We had a library called Trecky where you could track and send any data.

The engineering team thought that they were collecting the data for data scientists without understanding its usability and figured the data scientists would notify engineers when things break or if things are useful or not. We found that the iteration and data quality cycle were in the order of months. There would be outages and we would find out months later that data is lost or missing. We realized this was not a sustainable practice as we were growing as a company.

So what did we do? We pushed all the data contracts as close to the engineering team as possible to enable the team to work with the data directly. We effectively made the engineering team collect data for their own team and maintain the data infrastructure. And by focusing on data collection, our engineering team was able to automate the provisioning of Kafka topics and table creation in Hive.

So in 2017, we came up with a system that included a very strict guideline. Everything was automated in the sense that we even forced users to create schema versions, forced to create minor versions, and checked the immutability of schemas. So whenever engineers defined a new event, for example, “Add to Cart”, we provisioned everything else. We published all the libraries for them, and the biggest part was adding testing helpers for them. So a lot of our work was focused on how we can make Shopify engineers collect, test, and break the data as soon as possible. This really helped us and led to a more reliable journey within our data collection process.

What is Continuous Integration in Business Intelligence and why is that important for data quality?

Josh: Many BI tools like Periscope, Mode, Looker, and open-source players like Redash and Metabase contain a lot of business logic and information that ultimately dictates what users end up seeing on their dashboards and reports. One thing that Zeeshan mentioned earlier that resonates with me is thinking about Continuous Integration, not just from a software best practice standpoint, but as a step within data governance.

When you think about a BI tool, the end-users are typically analysts. They are using the information to drive business decisions.It’s harder to govern a tool that’s used by analysts as it’s not as tightly controlled by engineers that might govern things like a company's website or a data engineering pipeline.

At Spectacles, we are enabling the benefits of continuous integration so that it’s easier to catch mistakes, apply standards in the development process, and monitor for unexpected events. We’re trying to make these CI benefits easily accessible in the BI layer so that analysts can also have a governable solution.We are starting with Looker. Looker has always been developer-friendly and embraces software engineering principles, for example, versioning and unit testing that come baked-in. At Spectacles, we’re able to provide the scaffolding around Looker so that analysts with limited continuous integration experience can quickly get started and reap the benefits.

One of our customers is an analyst who sits within a centralized BI team that operates as a hub-and-spoke model. He was able to get ramped up quickly. He didn’t have to feel that he was an expert of the company's Looker’s instance right off the bat. Instead, he felt confident that Spectacles via CI was catching the basic mistakes like typos in SQL and violations of company-created guardrails. Now he could focus on business logic, data design, and surfacing information in a user-friendly way. Spectacles is good at allowing humans to focus on what humans do best and not worrying about what a computer can catch for them.

At Spectacles, we are also excited about working on a DBT integration. When data engineers make schema adjustments to tables inside of a data warehouse, analysts and data scientists will receive notifications about the downstream implications in Looker. These notifications will include details on broken tables and ETAs on data fixtures. We are confident this will help close the loop and bridge the gap between data engineers and analysts across BI tools.

How do you go about enforcing data quality standards?

Zeeshan: We have multiple checks throughout our data to ensure there is high quality. One phase is right after the data is collected and before being modeled. It is similar to operational checks that you have within your Datadog production systems.Another phase is deviation monitoring. If someone is hammering traffic from a certain IP range, we can account for it so that it doesn’t skew our data.

Once the data is in our warehouse, we have another phase called Correctness. This phase is similar to unit testing. Correctness makes our system not allow changes to break downstream models. As the data within our dataset increases, we continue to grow checks to ensure it’s more reactive to the end-user. Our goal is that as soon as engineers check something in the data warehouse, end-users (analysts and data scientists) get pinged on Slack or email with a data quality report and a sample of the data.

Josh: This is an interesting topic because of how different companies operate. Many companies try to be very nuanced around introducing guidelines and tooling and also try to maintain balance for the company to move fast but also have standards so that they don’t break things that could hurt the business.

Spotify has fewer checks in place because our data is far more decentralized. We follow the philosophy that if you build it, you maintain it and are responsible for it. The way it works is when you create a data pipeline, you generally work off of a templated project, and those projects have best practices baked into them with test coverages that are required. The understanding is that you'll use those best practices to build a maintainable system over time.

Data quality for Spotify enables recommendations, advertising, label, and artist payments, among others

How do you see “source of truth” being solved with either processes or tools today?

George: With things like unit testing and continuous integration, when things break, it’s typically black and white; we know when things are wrong and when they are right. This is something that we can quantify in unit testing assertions, but one of the big challenges that the industry faces is the problem of multiple “sources of truth”. So essentially the more democratized the data is, the more enabled people are to create data sets that are outside of the central data engineering teams.

When this happens, other teams are computing the same metrics, for example, user retention, or cost per acquisition, in different ways, so ultimately teams end up having multiple “sources of truth” that need to be reconciled at the decision-maker level. This is a difficult problem that data teams are constantly struggling with, technically, but also in terms of the organizational structure and politically because at some point that can become a really big challenge if you have different data points that are telling your business to move in different directions.

Data quality problems cost US businesses $600B+ a year!

The answer is not necessarily to have multiple tools for discoverability but with stricter ownership around the metadata. This allows different teams to use whichever tool they feel most comfortable with to arrive at the same conclusion. For example, data scientists can continue to use Jupyter Notebook, sales teams can continue to work off of Salesforce, and finance teams can use Spreadsheets.

What is the difference of keeping the business logic in the transformation layer (e.g. dbt) vs. in BI tools like Looker?

In the world of SQL, there are many vendors, like dbt and Dagster, that are making it easy to do data transformation in the warehouse, and then there are BI tools like Looker and Tableau, that are creating their own domain-specific language for defining metrics. Looker’s domain specific-language, LookML, has come the closest to providing the right type of interface for consumers. The challenge with Looker is that it’s not for everyone. If there was a LookML that was interoperable for all kinds of tools across all kinds of companies, I think that could be very powerful.

With respect to dbt, it has done a lot of the leg work, but they are very focused on producing data sets and batched modeling as the final output.I think the reality is that different users use different tools and will want more flexibility to cut the data in different ways. Users want the flexibility and expressiveness in SQL but they don’t want to have the same query with different filters which could lead to inconsistency. If there’s a way to templatize parts of SQL that can be reusable and adhere to design principles and take that to BI tools and decision-makers, you could complete the loop which can be very powerful.

What is a current trend in the data world that you are excited about?

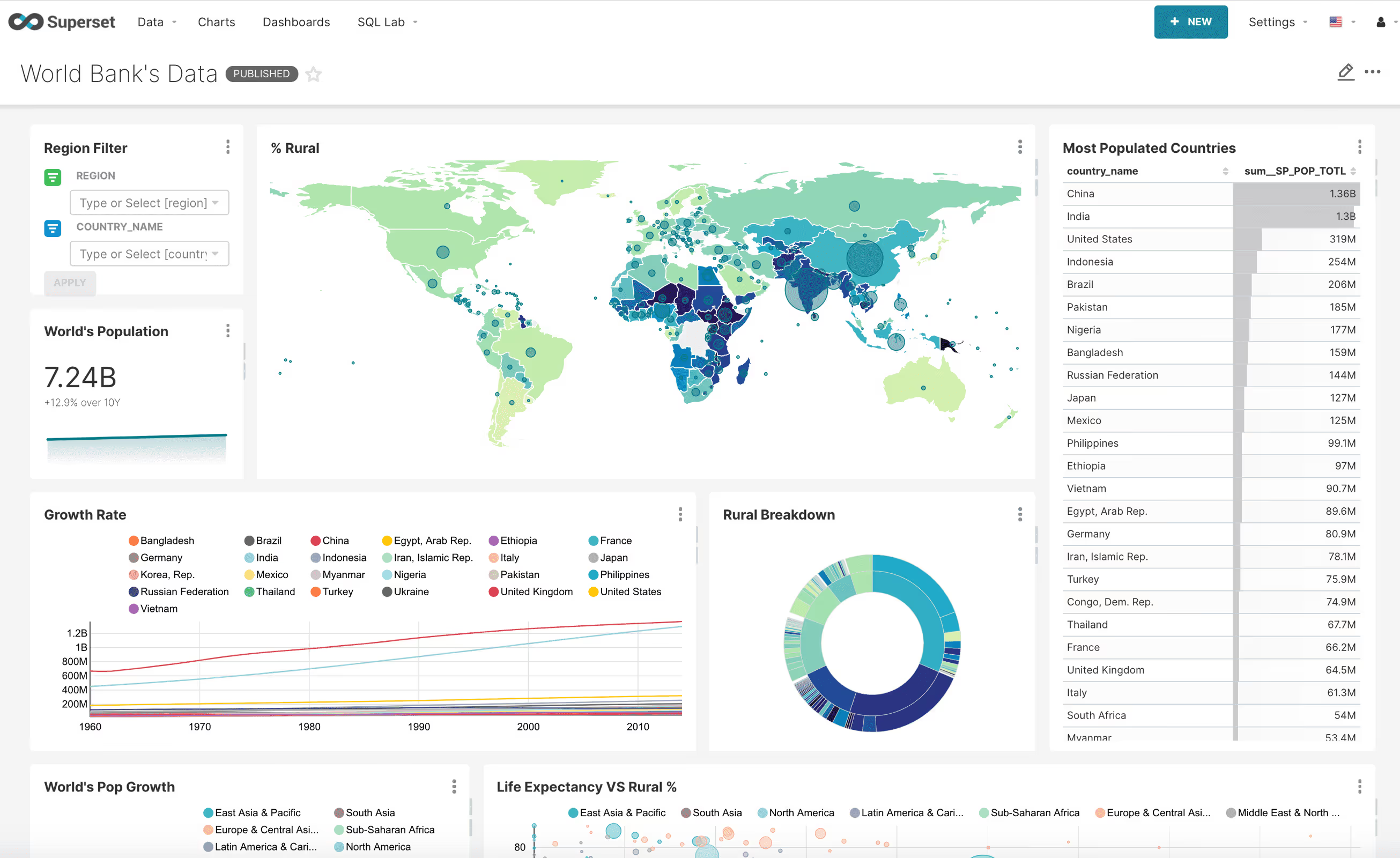

Zeeshan: I have been interested in streaming and real-time interactiveness of data serving platforms. I have been diving into Druid, Pinot, and Pleco over the last year. As Superset has matured, a step-level change for a data scientist has allowed individuals to be able to explore the interactiveness of data in real time, and not have to wait in the order of minutes to get an idea of what they are working with. If you’re looking into large storefront data you’ll be able to slice-and-dice by dimensions in less than ten seconds, which allows you to explore the data a lot faster. The result may not be one hundred percent accurate however if it is directionally correct then it gives you the idea of needing to dig into the data more.

Josh: I am interested in thinking about data quality less from a rules-based standpoint and more from a statistical modeling standpoint. So this idea of a more unstructured approach to data quality; is not me defining a rule. One way is by adding some stats that flags these issues, in advance, in an efficient manner. Larger organizations have tons of data to get at data quality problems. Using statistical modeling, it will allow us to see the downstream and upstream dependencies in warehouses and we’ll be able to understand the impact and the potential source of the problem.

Apache Superset. Source: https://superset.apache.org/gallery

.avif)