Using the dbt semantic layer to easily build semantic models

Explore how the dbt Semantic Layer is evolving the way data teams define and expose key business metrics.

In the "good ol’ days," data was just something you collected and stored in a relational SQL database cluster to power apps and a handful of business systems. They were simpler times — just a handful of first-party data sources with some third-party data sprinkled in on occasion. Nowadays, data is a production asset, part of a large ecosystem used by the entire business, dev teams, vendors, apps, partners, and AIs that probably won’t take over the world.

Managing data as a product is an entirely different practice than it was a few years ago, and the transition hasn’t been clean or easy. In fact, it’s created big problems for data because there are so many stakeholders and consumers to think about. It’s led to versioning, productizing, and transforming data in real time using the power of cloud computing. Wild!

The world of data engineering and analytics has evolved, leading us to powerful tools like dbt. It helps us to sort, clean, organize, transform, share, and version control our data so we can build queryable models. Essentially, dbt lets us treat and work with data like the business asset it is. To make data even more trustworthy, reliable, and queryable, we now have the dbt Semantic Layer and it’s important that we understand what it is and how it works.

Understanding the dbt Semantic Layer

Data is hard to manage. The more you have, the harder it gets to manage it. As complexity grows, so does the challenge of getting consistent, trustworthy outputs. This is a major challenge for large companies. It’s not uncommon for five people to run the "same" report five times and get five different results.

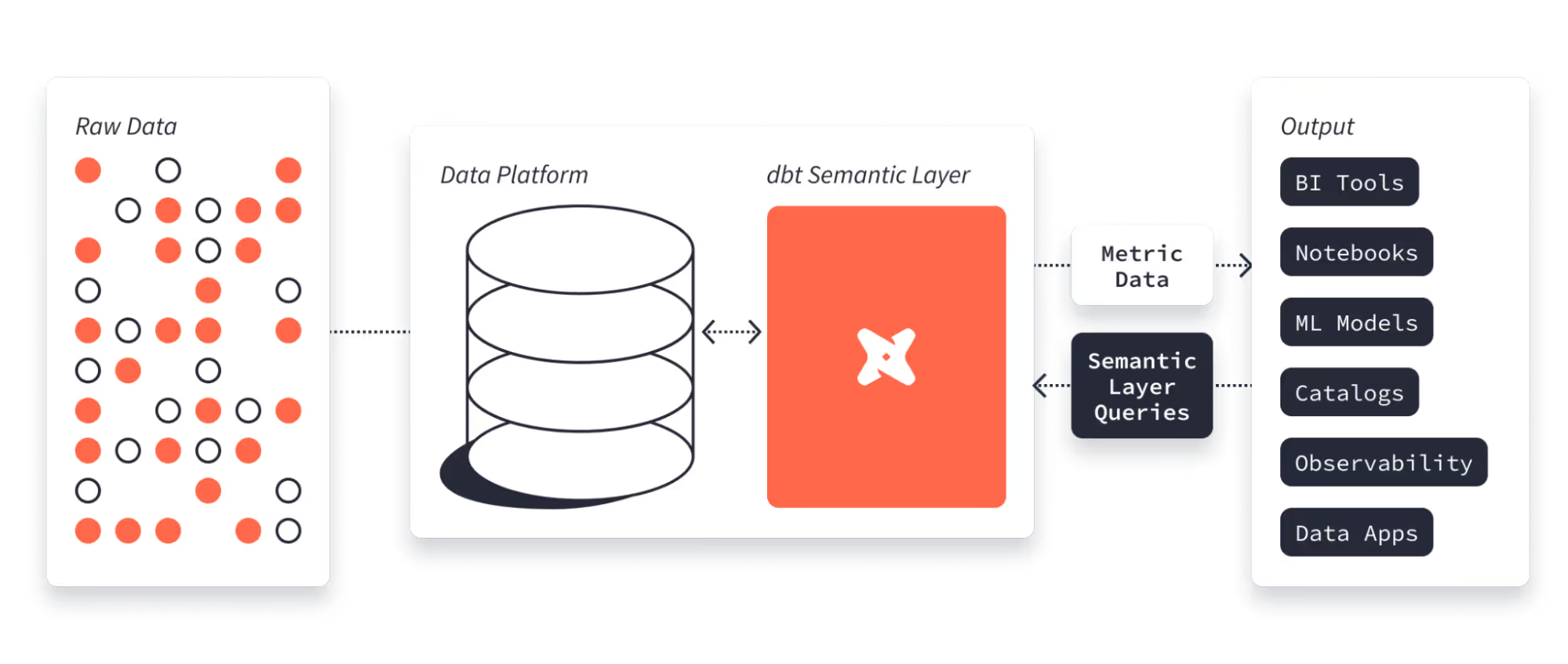

The dbt Semantic Layer seeks to fix that. It acts as an intermediary that bridges the gap between complex raw data and the end-user, turning highly detailed data into clear, usable information. The big idea is to bring consistent metrics in a simple way to business users, no matter what they’re using to query.

For example, you might use the Semantic Layer to provide API-like endpoints describing monthly active users (MAU), growth rates for particular metrics, or revenue numbers. No matter who queries the endpoint and no matter how they run the query, they get the same results.

With the dbt Semantic Layer, you can define semantic models — core business metrics — and query them within BI and data app integrations (like Mode, Hex) and APIs. This means you can use any API-friendly technology to get the data you need in whatever way works best for you and the tech you’re using. Cool!

It’s important to note that the dbt Semantic Layer is part of the dbt Cloud experience; dbt Core users can use the existing MetricFlow model to define entities and metrics, but the Semantic Layer is the, well, layer that is used to expose those metrics to third party integrations.

The key components of the dbt semantic layer include:

- Semantic models with MetricFlow: How metrics and entities are defined in the dbt Semantic Layer, handling SQL query construction and spec definition for semantic models and metrics

- APIs and integrations: JDBC and GraphQL endpoints that integrate with MetricFlow

- Query generation: How MetricFlow writes optimized SQL on the fly to execute against the data platform

The dbt Semantic Layer sets itself apart from traditional metric calculations, which often tend to be defined (and hidden) in the BI layer (think like a LookML situation). Its added flexibility as metrics-defined-in-(version controlled)-code makes dbt much more adaptable to changes and user-friendly. It’s a new feature of dbt Cloud that helps data and analytics engineers better serve their business users.

Simplifying data analysis with dbt Semantic Layer

The dbt Semantic Layer is novel because it provides a relatively easy-to-use endpoint for a variety of business users, exposing consistent metrics that don’t require getting deep into the data ecosystem. You can think of it like exposing a custom API endpoint to your organization without exposing the often-complex data model itself. Several benefits follow as a result:

- Improves data consistency and accuracy: In data analysis, data consistency and accuracy are non-negotiables. The dbt semantic layer acts like a quality control system, ensuring that the metrics you expose to your business are data you work with is accurate and reliable — across all systems.

- Streamlines the metric creation process: One of the biggest perks of the dbt Semantic Layer is how it integrates with your existing dbt project. All metric definitions, like your dbt project, are version controlled, can have important metadata attached to them, and defined in code. This creates a singular environment where data teams can create, collaborate, and refine metrics while keeping a revision history.

- Enhances collaboration between data teams and stakeholders: An often-overlooked aspect of data analysis is the collaboration between data teams and stakeholders. The dbt Semantic Layer acts as a shared workspace that brings everyone onto the same page without all the access fiascos that typically come along with it.

It’s a metric layer that doesn’t get in the way of governance or data management while also providing consistent results to anyone who needs them.

Implementing the dbt Semantic Layer

We’ve spent a lot of time talking about how handy the dbt Semantic Layer is for business users, but it’s also quite useful for developers and data engineers. Instead of dealing with a constant barrage of JIRA tickets for access to data models, repositories, and databases, you can now provide accessible, documentable, and scalable data endpoints to your organization.

The main steps to implementation are:

- Build a semantic model: Define your semantic model in YAML, which includes setting up your entities, dimensions, measures, and configurations (see instructions).

- Build your metrics: Define your metrics in YAML, which includes naming and describing what you’re calculating and sharing with users (see instructions).

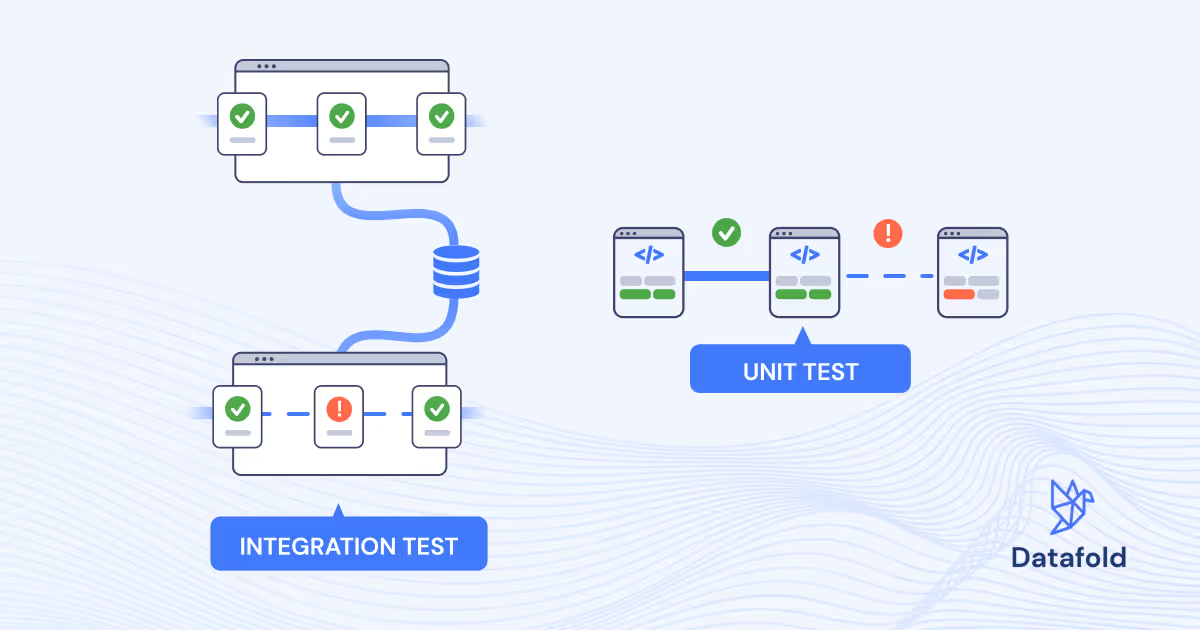

- Test your metrics: Validate the functionality of your semantic model and metrics in your integrations or with direct API requests.

- Tell the world your semantic model exists: Well, maybe don’t tell the world, but at least start telling your stakeholders. You may need to modify some existing BI reports that use different (older) metric definitions in favor of relying on the newer, dbt SL-powered metrics.

Of course, it gets a bit more complex, especially depending on the types of metrics you’re exposing and how they’re being calculated. But that’s the basic gist. Get up and running with MetricFlow, create a few YAML files, test it out, and you’re good to go.

dbt semantic models are the cheat code for quick and easy metrics

This is a very high-level overview of the dbt Semantic Layer. It’s a rich feature that makes data accessible to all sorts of people in your organization. Since it’s primarily YAML-based, it’s relatively easy to configure and get up and running. Once you’ve got your data models in place in dbt, you’re pretty much set for deploying semantic models.

We love that you can deploy a semantic model within minutes, get optimized SQL queries out of the box, and you don’t have to futz around with complex code implemented in different systems. Of course, there’s the prerequisite of having good data models in the first place, but that’s a whole different thing. With your models in place, it’s much easier to share that data to the people and apps that need them. The team at dbt even exposed a semantic model to an LLM, which is a great application of the tech.

Anyone who’s followed us for a while knows we’re huge fans of dbt and we think it’s a critical piece of the modern data stack. We use it extensively and it’s tightly integrated with Datafold Cloud. Use dbt to build the model, use Datafold to automate and test all the data going into your model, and use the dbt Semantic Layer to build consistent metrics to expose to whoever needs them.

.avif)