dbt lineage: What it is, and why it's important

dbt provides native data lineage capabilities. Learn how and why data teams leverage dbt lineage for data discovery and impact analysis.

Data lineage is the way your data moves its way through your data pipelines: the process of how raw source data is transformed into usable models of logic and metrics that can then be consumed by end business users. Most data lineage is represented in a visual format called a DAG (directed acyclic graph) and supported with cataloging and metadata, so data teams have full knowledge into how and why their data moves in a certain way.

Many modern data and analytics engineers leverage dbt, the open source and SaaS cloud version, to transform their data and leverage dbt's native data lineage to perform data discovery and impact analysis.

In this blog, we will unpack why dbt lineage is useful for data teams, the limitations to dbt's native lineage, and how data teams can support dbt lineage with robust column-level lineage and proactive impact analysis testing.

Why data lineage is important to dbt projects

Data and analytics engineers need data lineage tools for two primary reasons:

1. Understanding downstream lineage to prevent bad deploys (and broken dashboards!)

2. Leveraging upstream lineage for data discovery

Downstream lineage for data quality

By leveraging lineage information, data teams can help prevent breaking deploys to downstream assets and if incidents do happen (because let's be honest, they still happen), use lineage to identify the root cause of the problem.

Impact analysis during deployment testing

Scenario: You are bout to make a change to a core dbt model to my business and you want to prevent any negative impact (broken downstream data models, stakeholders surprised by the changed definition, etc.). You explore your data lineage and trace the dependencies to figure out:

- What assets are downstream

- How important are they (e.g. are known high-priority tables/dashboards on the downstream path)

- Are they going to be affected based on the context of the change you made

Impact analysis and root cause analysis during incident resolution

Scenario: There is an active data incident, e.g. an event stopped firing/ an important column is NULL in your raw data starting yesterday. You are doing downstream impact analysis to identify which data models, Looker assets, and key business users may be affected so you can proactively communicate with them, as well as assess the criticality of the incident.

Upstream lineage: Data discovery

Scenario: You are a business user and you're about to use a dashboard created by the data team to make as business-critical decision, so you need to understand its meaning, quality, and trustworthiness.

I was asked by the CEO to calculate weekly active users. I found a Looker dashboard that mentions it. I want to understand what is the source of that metric is and how it’s calculated. Are lossy front-end or reliable backend events are used? What defines “active”? Are internal users filtered out? By tracing the lineage graph from the Looker dashboard upstream and exploring SQL for each table along the way, I can answer these questions.

When you're put in this decision, using data lineage can help better understand the metadata around the asset, the upstream dependencies (and their quality), and ultimately, how reliable that asset is for your use cases.

What data lineage features are available dbt Core and Cloud

dbt Core—dbt's open source framework—ships with a single-project table-level lineage. The lineage graph is constructed by relying on the ref(‘model’) templating to identify upstream dependencies. With dbt Core, you can run some simple commands (dbt docs generate && dbt docs run) to view dbt docs and the data lineage graph (called the DAG) for your project on a local host. The DAG populated by dbt is also easily navigable through the use of selectors to filter on upstream assets and downstream dependencies.

![dbt exposures: A powerful [and underused] feature](https://cdn.prod.website-files.com/66e408a2a1c53743a642f485/66feb046b42ef43600fd471a_66fa1797e790e2bdb8e93456_663be9e52eda75f50fc8b6ef_633f3eadfa9bfd6dcaca52f6_MOzMYc97LYjqPuH1TleK40WyuLPONrHGoSIW2iUzWhFbT_vBv8a3l_JM3ZzIbk8e7-SdyOihBxQ_lkhfkRpjjz2g_ZEOw5ZngBPF_UNF4H4nrEg3k8P7yu8k89ct6cYLrw4Rg_c6OtlLm4UhYoUGDbW3nD7QBQwJ0du8KqtPhz7CM4IzeMP5JphOUg.avif)

Other teams using dbt Core choose to host the production-version of their dbt docs on a static website, such as hosting it through S3.

dbt Cloud relies on actual parsing of the compiled dbt project’s code to compute lineage. This allows it to provide lineage on a columnar-level, but only within the dbt project and without connections to downstream BI tools. This means that, for example, a table built with Airflow or Dagster that is referenced by a dbt model or a Tableau dashboard querying a dbt model wouldn’t be coveted by dbt’s lineage. dbt Cloud users are able to view their lineage via the DAG in the dbt Explorer, the data catalog part of the dbt Cloud product experience. dbt Cloud users can also leverage the Discovery API to programmatically query their DAG and lineage.

Both dbt Core and dbt Cloud users can choose to define metadata (e.g., description, owners) for their dbt models, dbt tests, and dbt exposures. Much of this dbt metadata would be visible in the dbt docs site.

Does dbt's lineage support column-level lineage?

dbt Core does not currently support column level lineage. However, dbt Cloud Enterprise users have access to their new column-level lineage feature. All dbt users have model-level lineage accessible.

Limitations of native dbt data lineage

The fact that dbt ships with basic lineage is great and can be immensely useful for teams running smaller dbt projects. While lineage provided by dbt is a step forward relative to the earlier-generation orchestrators, the lineage methods available in both dbt Core and dbt Cloud’s have three major limitations that are especially important at scale:

#1: Lineage scope is limited to dbt-only

dbt lineage is limited only to whatever data assets are produced by dbt. Anything produced outside of dbt, e.g. other data orchestrators like Airflow or Dagster, stored procedures, etc. are not covered. While this may be fine for teams that use dbt exclusively, it may be substantially limiting for larger data platforms that combine multiple orchestrators given that dbt is a SQL-centric framework.

#2: Lack of automatic tracking of BI dependencies

Knowing the lineage between models is useful, but ultimately what matters is that the data we produce as a team is successfully leveraged by the business. This usually happens with BI and data apps such as Looker, Tableau, Hightouch, etc. Tracking the lineage from dbt models to data apps is essential to data discovery and quality workflows. While dbt exposures attempt to solve this problem, it requires all the dependencies to be defined and kept up-to-date manually which is a tall order for a large team.

#3: Lack of automatic impact analysis in CI

As we defined earlier, one of the key use cases for lineage in a dbt workflow is preventing breaking data and BI applications downstream when making changes to the dbt code.

For that, automation and insertion in the CI (continuous integration) workflow is essential. While having lineage accessible ad hoc is helpful, at scale it’s not feasible to rely on each developer to look up lineage when proposing or reviewing code changes in a pull request. The only way to reliably prevent breaking changes is to run impact analysis in CI and automatically highlight breaking changes and impacts on data.

Using dbt + Datafold for automated and comprehensive column-level lineage

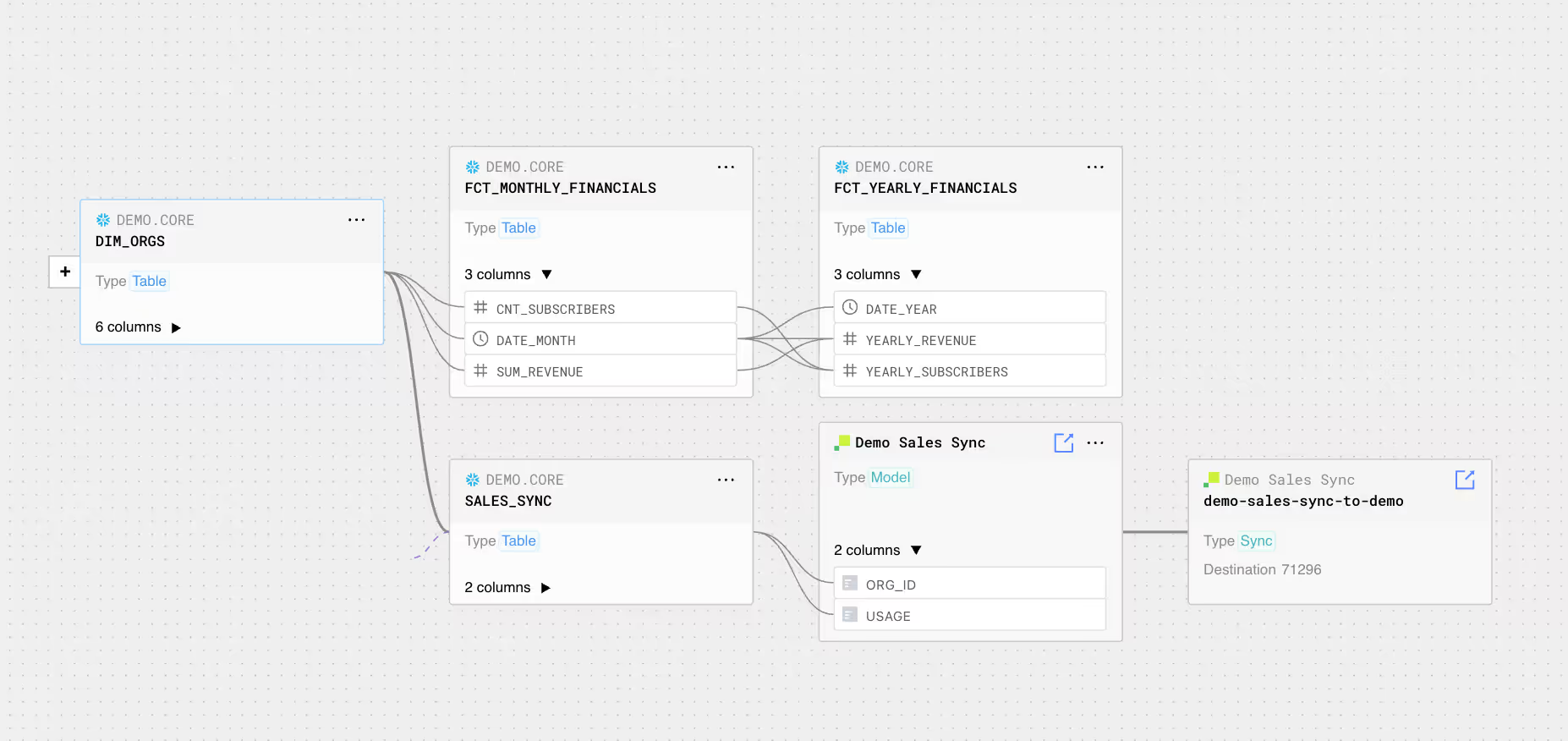

Datafold complements dbt with the automated column-level lineage that covers all major BI tools. Unlike dbt exposures (that need to be defined manually) and dbt Core/Cloud lineage (that covers only dbt code), Datafold relies on full semantic parsing of SQL logs from the warehouse and combining that with metadata from BI tools to form a complete dependency graph that covers the entire warehouse, including, but not limited to, source data, dbt models, and data app assets (e.g., BI dashboards, reverse ETL sync, machine learning models).

And Datafold is not just a data lineage tool! Integrated in your CI process, Datafold automatically computes data diffs showing how the data changes when the changes to dbt code are made, and identifies impacted downstream applications such as reverse ETL syncs in Hightouch.

.avif)

Conclusion: Comparing three lineage options for your dbt project

Data lineage is a core part of data management, and there are many ways data teams can access lineage for their dbt projects. Below is a table summarizing data lineage options used by data engineers to understand the movement of data across their dbt project and larger organization.

.avif)