Datafold: From Breaking Data to Series A

Datafold Founder and CEO, Gleb Mezhanskiy, shares what prompted Datafold's creation, how it has grown, and plans for the future.

Datafold, a data reliability platform, raised $20M Series A to help more teams deliver high-quality data products faster. And we are hiring!

The data domain is at an inflection point. With the rapid adoption of data at every level of decision-making by both people (BI) and machines (ML), data quality becomes product quality – one impacts the other directly. And data quality is not in a great state: it is the major problem for data teams and consistently their top focus area. Without a significant change in how we approach data reliability, further progress will be linear at best. Datafold is on a mission to solve this bottleneck.

I saw the data quality problem first-hand in my career. As a Data Engineer at Lyft, I got a chance to both break data on a massive scale and then build internal tooling that helped avoid such mistakes. A few other mega tech companies also invested millions into building internal data quality products. However, most data teams in the industry still don't have specialized tools to help them.

So we started Datafold to enable every data team to deliver high-quality data products.

One of the major causes of poor data quality, besides the sheer volume and complexity of data, is the huge amount of manual work in data teams’ workflows. This doesn’t mean that they are doing calculations by hand, of course. Instead, they have to do tasks that can be automated such as writing boilerplate code to get a basic understanding of the data, visually checking charts for anomalies, and reading thousands of lines of code to trace dependencies.

There is a reason why data observability and quality tooling haven’t evolved sooner: to move the needle, you need to solve lots of complex problems at scale — these range from static analysis of code to providing data lineage to machine learning for anomaly detection.

But the hardest part of data reliability is human: even the most technically advanced tools will fail to have an impact unless they deeply integrate into and improve existing workflows.

It's not helpful to have a tool that bombards you with dozens of notifications about data anomalies. If working with a data warehouse of over 30K tables and 1M+ columns, which is not unusual these days, at least a few hundred things will seem broken every day.

We believe that tools should remove work and not add more of it to already overloaded data teams. So we started with a somewhat different question: how can we prevent data quality issues in the first place, before they get into production, so there is less need to react to what is already broken?

Since all data is processed by code – SQL, Scala, R, etc. – written by people, most data quality issues happen because of bugs introduced in the code. And bugs slip through because, for the developer, it's difficult to fully assess the impact of a change to source code on the data. And so, our first tool for proactive data testing – Data Diff – was born. It helps teams achieve 100% test coverage without sacrificing development speed by providing detailed impact analysis for every code change. Although we initially thought that such a product would be most valuable for larger data teams, it got as much traction among teams as lean as one person. The sentiment was common: move fast with confidence in your data.

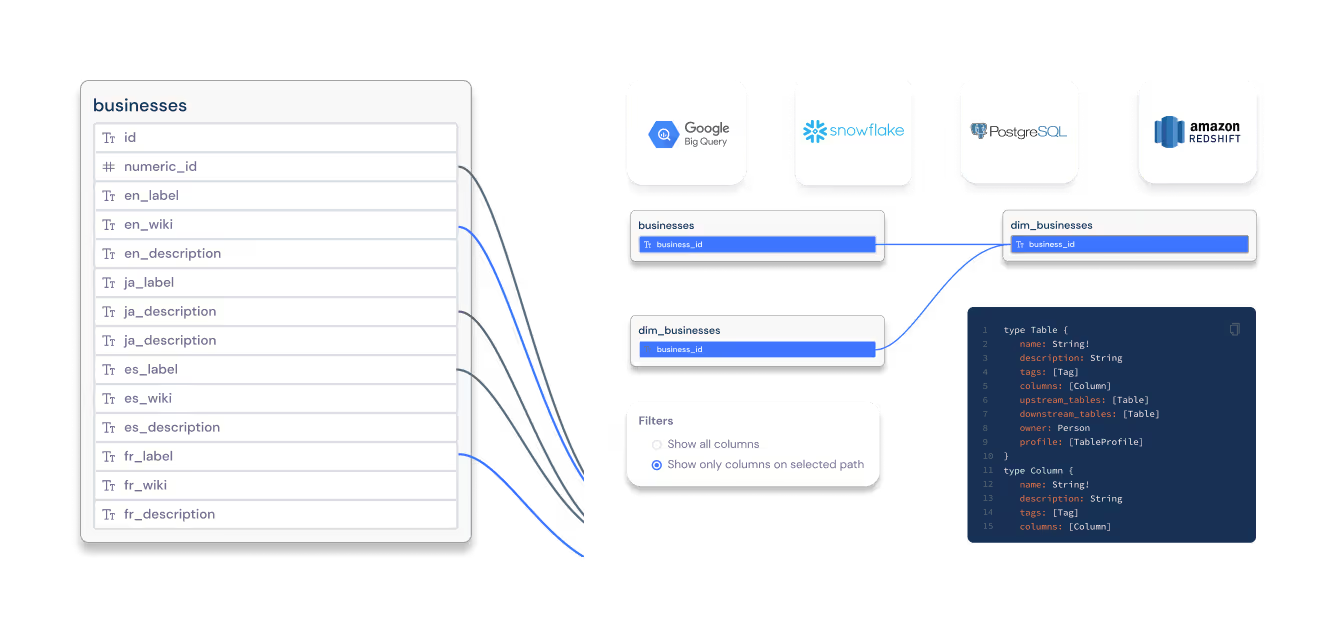

Since launching Data Diff in Summer 2020, we built other fundamental features for data quality and observability – Catalog, Column-level Lineage, and Data Monitoring. Together, they enable us to support all major workflows in data engineering, including finding trustworthy data, understanding its sources and correctly using it, testing data transformations code, and monitoring pipelines in production.

We are thankful to our early adopters, including Patreon, Thumbtack, Faire & Dutchie – some of the world's leading data teams. They identified with our vision, trusted Datafold to be the gatekeeper of their data, and advised our roadmap as we built out the core of the Datafold platform.

Our progress would also not be possible without our Data Quality Meetup community of over 1200 members, many of whom contributed invaluable ideas and opinions to advance best practices of data reliability.

.avif)

Since I announced our Seed round almost exactly a year ago, Datafold evolved from a team of three to an international team of 18. As we started building MVP a week before the first wave of lockdowns, we embraced all-remote work from the very beginning.

Given our goal to become a go-to solution for ensuring data quality, it was essential for us to partner with investors who believe in our somewhat contrarian and unique approach to the problem and who have deep expertise and a proven track record in the data space.

And so today, I am excited to announce that Datafold raised a $20M Series A round led by NEA and joined by Amplify Partners – firms behind such foundational data products as Databricks and dbt. Peter Sonsini, NEA General Partner, is joining our board. We couldn't think of better investment partners to accelerate our progress.

Building tools is exciting because you have tremendous leverage: you can elevate all data teams at once, especially when solving such a massive bottleneck as data reliability.

We are very excited about what comes next and invite you to the next chapter of our journey. You can follow our product evolution in our Changelog and subscribe to our weekly newsletter, Folding Data.

One of our first plans with the new funding is to expand our team. If you love building tools or are passionate about finding ways to do data better – join us!

.avif)