Teradata to Snowflake Migration: Challenges, Best Practices & Practical Guide

Embarking on a migration from Teradata to Snowflake? Let's make it simpler for you. Our guide breaks down the process, spotlighting the differences between Teradata's on-premises system and Snowflake's cloud-based approach. We explain what these differences mean for your data strategy, focusing on key areas like storage, processing, and scalability, as well as crucial business aspects like stakeholder alignment, budgeting, and minimizing downtime. Plus, we share four essential tips for a smooth migration, from choosing the right tech and prioritizing assets to efficient data validation.

Lastly, Datafold’s powerful AI-driven migration approach makes it faster, more accurate, and more cost-effective than traditional methods. With automated SQL translation and data validation, Datafold minimizes the strain on data teams and eliminates lengthy timelines and high costs typical of in-house or outsourced migrations.

This lets you complete full-cycle migration with precision–and often in a matter of weeks or months, not years–so your team can focus on delivering high-quality data to the business. If you’d like to learn more about the Datafold Migration Agent, please read about it here.

Common Teradata to Snowflake migration challenges

Technical challenges

In transitioning from Teradata to Snowflake, data engineers encounter a range of technical hurdles that demand careful attention. We look at two technical challenges, architecture, and SQL dialect differences, which can significantly impact the migration process. Understanding these core architectural differences is crucial for a successful migration, influencing everything from data distribution strategies to query performance and overall system scalability.

Architecture differences

While there are many differences between Teradata and Snowflake, a major architectural difference that affects the migration approach is the storage-compute coupling.

Teradata's traditional, (typically) on-premises architecture contrasts sharply with Snowflake's cloud-based data warehouse model. This shift from a physical infrastructure to a service-oriented architecture demands a fundamental change in managing data scalability, storage, and computing resources.

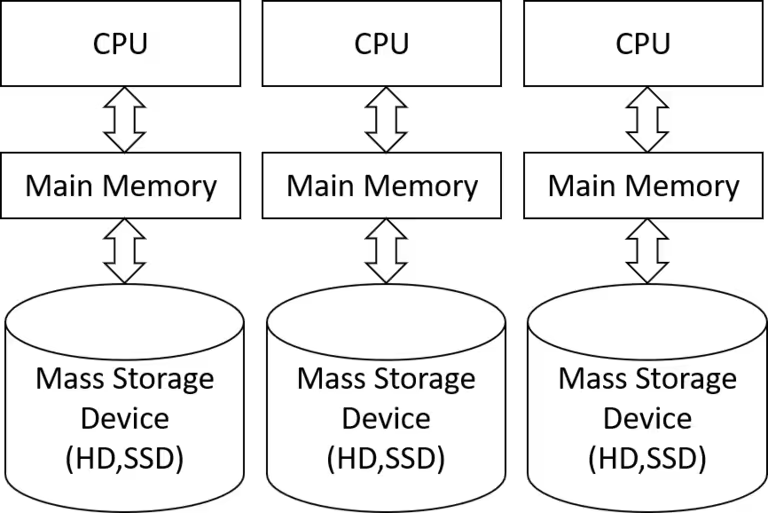

Specifically, Teradata employs a Massively Parallel Processing (MPP) architecture, distributing data across numerous nodes for parallel processing. This approach tightly integrates data storage with computational resources.

Teradata’s architecture explained

A Teradata deployment consists of a cluster of Access Module Processors (AMPs), which are the workhorses behind its data processing capabilities. Each AMP is deployed on a server instance, taking on a portion of both compute and storage responsibilities for the entire cluster. This setup means that storage and compute are coupled, with a specific segment of data being stored and processed on the same physical machine.

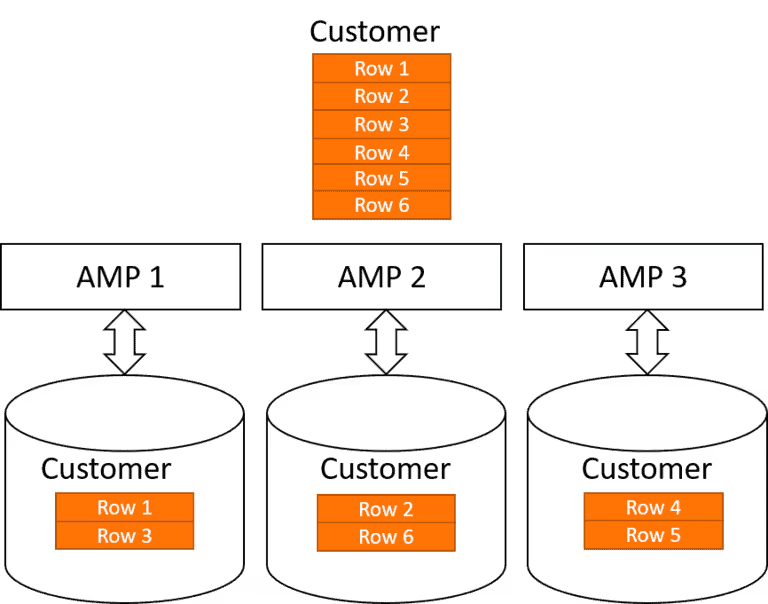

This coupling has profound implications for how Teradata manages data and query performance. Similar to other Massively Parallel Processing (MPP) systems like AWS Redshift, Teradata employs a hashing algorithm to distribute data across its AMPs:

This method is efficient but introduces three challenges for scalability and performance:

- Dependence on hashing key for query performance: Query performance depends on whether joins and filters in a query are done across the hashing key. Misalignment with the hashing key can lead to reduced query performance due to the additional overhead of data redistribution across AMPs.

- Scaling limitations: To scale storage or compute capabilities to process more queries, more nodes need to be added. This can be a costly operation that requires data redistribution among the new and existing nodes. Though the introduction of Teradata VantageCloud allows for compute-only clusters, they still depend on a Primary Cluster for storage. This dependency can quickly become a bottleneck, limiting scalability.

- Elasticity constraints in compute demand: The architecture of Teradata shows limited flexibility in scaling up or down in response to fluctuating business demands for query processing. Since a cluster storing data cannot be easily adjusted, this often leads to either underutilized resources or performance bottlenecks, impacting cost-efficiency.

Snowflake’s architecture explained

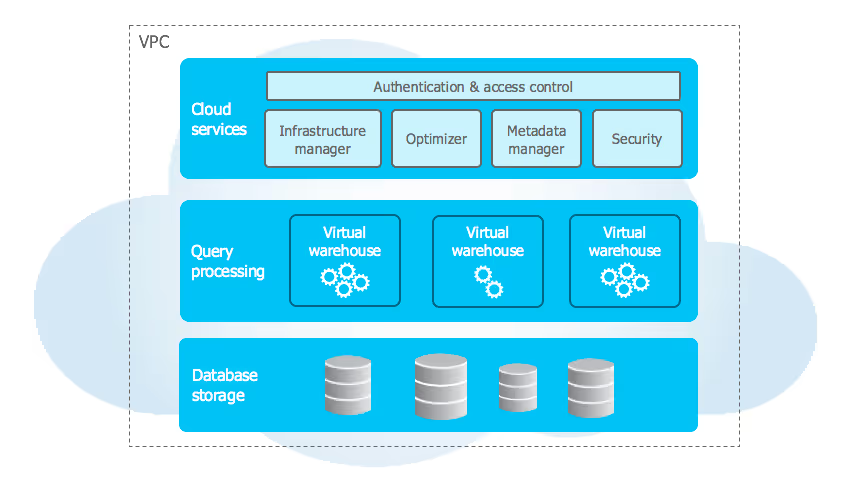

By contrast, Snowflake’s cloud-native, multi-cluster, shared data architecture separates compute and storage functions. This separation allows for more flexible scaling and efficient resource utilization.

Because Snowflake’s storage and compute layers are decoupled, either layer can be scaled independently and on demand. You can dynamically adjust compute resources; more virtual warehouses can be spun up in the morning when data analysts refresh dashboards and then scaled down overnight when data use is low.

This adaptability ensures high efficiency and a better match with varying business demands for data processing. Furthermore, managing these operations is user-friendly, with straightforward controls accessible via an API or UI, requiring minimal specialized knowledge.

Benefits of migration to Snowflake

- Reduced DevOps effort: Migrating to Snowflake significantly lessens the workload associated with DevOps. Unlike Teradata, with its numerous components and/or on-premise solution that need regular maintenance and optimization, Snowflake abstracts away internal complexities. This abstraction translates to substantial time savings, as users spend far less time on system maintenance and performance optimization.

- Shift from an ETL to ELT paradigm: Due to the scalability limitations of Teradata, teams commonly perform data filtering and transformations before loading into Teradata. This limits the range of data that can be queried by Teradata users. In contrast, Snowflake’s near-infinite scalability supports a more efficient ELT pattern where raw data can be ingested and efficiently stored in Snowflake to be used on demand.

- Simplified and more flexible table layout: Efficient data management in Teradata requires carefully selecting table layouts, indices, and strategies for data distribution across nodes. Snowflake handles much of this complexity automatically. It offers a clustering option for performance optimization in handling extremely large tables, but without the intricate setup requirements seen in Teradata. This simplicity allows for a more streamlined data organization and management process.

SQL dialect differences

The SQL variations between Teradata and Snowflake are more than just a matter of syntax; they reflect distinct approaches to data handling, which can cause significant compatibility issues during migration. Complex enterprise-level code, custom stored procedures, and dialect-specific features often require extensive manual rewrites to function correctly in Snowflake. Traditional translation tools often struggle with these complexities, turning what might seem like a straightforward task into a months- or even years-long process.

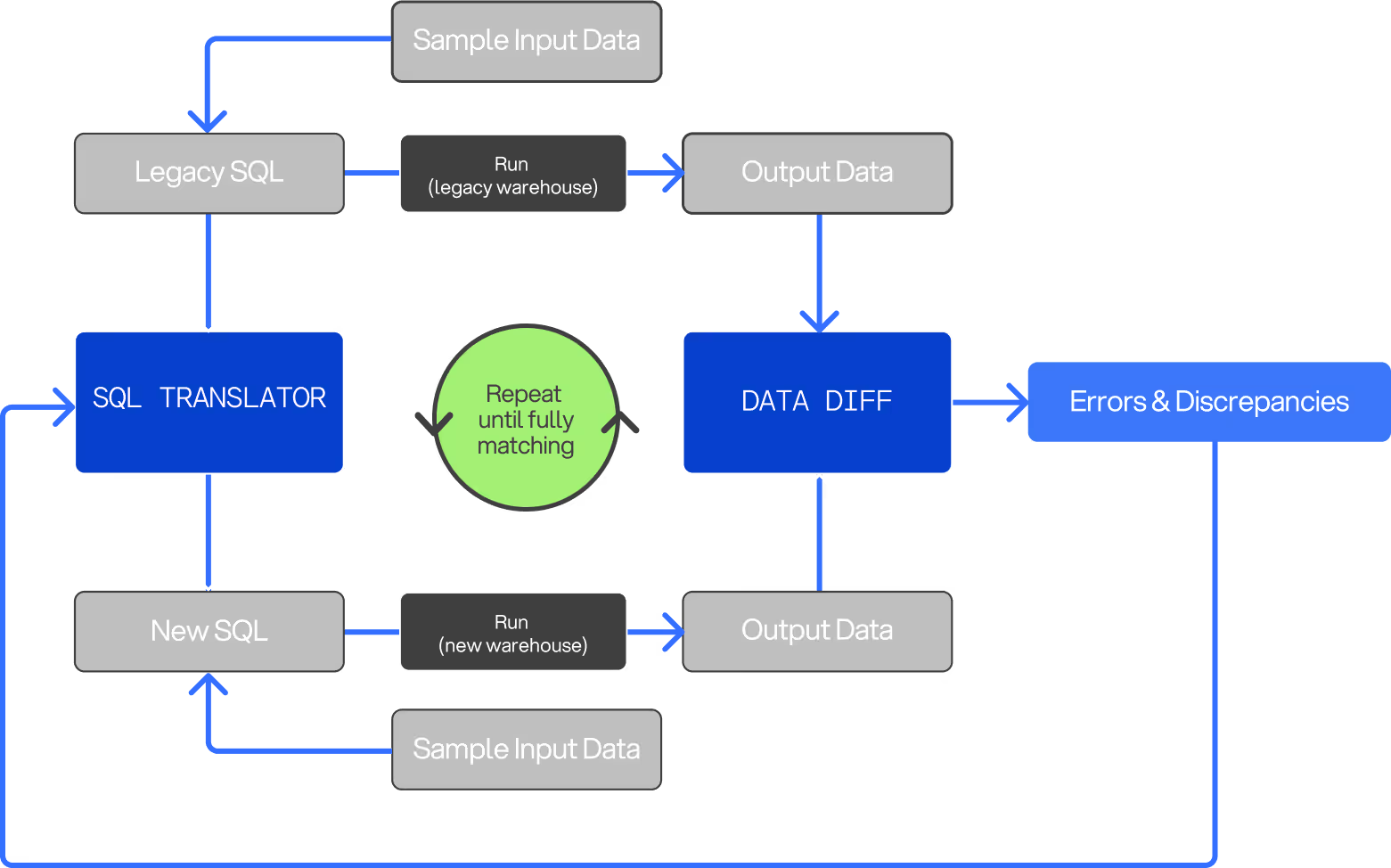

Datafold’s Migration Agent simplifies this challenge by automating the SQL conversion process, seamlessly adapting Teradata SQL code—including stored procedures, functions, and queries—for Snowflake. This automation preserves critical business logic while significantly reducing the need for manual intervention, helping teams avoid the lengthy, resource-intensive rewrites that are typical in traditional migrations.

Teradata SQL

Teradata utilizes its variant of SQL, known as Teradata SQL. It includes unique syntax and functions tailored to its architecture. For instance, Teradata features specialized join types like MERGE JOIN, optimized for its parallel processing environment. It also offers proprietary functions for data manipulation and analysis and specific syntax for managing its unique indexing and partitioning features.

Snowflake SQL

Snowflake SQL is based on ANSI SQL, with additional enhancements and modifications to complement its cloud-native architecture. While it supports most standard SQL functionality, Snowflake also introduces specific functions and features, such as the lateral FLATTEN function, designed to handle semi-structured modern data formats such as JSON, XML, and AVRO.

Example of dialect differences between Teradata and Snowflake: the TOP WITH TIES clause

A prominent feature in Teradata that differs in Snowflake is the use of the TOP WITH TIES clause returns the top N rows from a result set, along with any additional rows that tie for the last place in the top N based on the ordering criteria:

This following query returns the top 10 orders by OrderAmount, and if there are more orders with the same amount as the 10th order, those are included as well.

Query in Teradata SQL

Equivalent Query in Snowflake SQL

Snowflake SQL doesn't have a direct equivalent of TOP WITH TIES. Instead, you can use the RANK or DENSE_RANK window function in combination with a WHERE clause to achieve a similar result. Here's how you could replicate the above query in Snowflake:

In this Snowflake query, RANK() is used to assign a rank to each order based on OrderAmount. The WHERE clause then filters the results to include only those rows where the rank is 10 or less, effectively including ties for the 10th place.

Other notable SQL syntax differences include Teratada’s extensive DDL that is not Snowflake SQL compatible and Teratada’s support for the shortened SEL and DEL statements, which Snowflake does not support.

Validation as a black box

Validation can become a “black box” in migration projects, where surface-level metrics like row counts may align, but hidden discrepancies in data values go undetected.

Traditional testing methods often miss deeper data inconsistencies, which can lead to critical issues surfacing only after the migration reaches production. Each failed validation triggers time-intensive manual debugging cycles, delaying project completion and straining resources.

Business challenges

Migrating to Snowflake involves not just technical, but also business challenges. Stakeholder alignment, budget constraints, and the impact on ongoing operations are critical considerations. Ensuring minimal downtime and aligning the migration with business goals are paramount. We look at the top four concerns here.

The never-ending “last mile”

Even when a migration is near completion, the “last mile” often proves to be the most challenging phase. During stakeholder review, previously unidentified edge cases may emerge, revealing discrepancies that don’t align with business expectations. This phase often becomes a bottleneck, as each round of review and refinement requires time and resources, potentially delaying full migration and user adoption.

Stakeholder alignment

It is critical to ensure that all stakeholders are on the same page; involve stakeholders from various departments early in the planning process to foster buy-in and address any concerns or misconceptions about the migration. Communication is key to managing expectations and ensuring that the migration aligns with the broader business objectives.

Budget constraints

Budgeting for a migration project is a complex task that goes beyond mere tooling and resource allocation. It should include costs related to uptime of two databases at once, potential downtime, training of personnel, data migration services, and post-migration support and maintenance. A detailed cost-benefit analysis should be conducted to ensure the migration is financially viable and to prevent unexpected expenses.

Minimizing downtime

Downtime can have significant implications on business operations and revenue. Maintaining business continuity during the migration is vital. Strategies such as incremental data migration, where data is moved in phases, can help in minimizing downtime. Thorough testing before full-scale implementation is crucial to ensure a smooth transition with minimal disruptions. Also, the use of a lift-and-shift method will help ensure regular data access to end users, without worry of data integrity in the new system.

4 best practices for Teradata to Snowflake migration

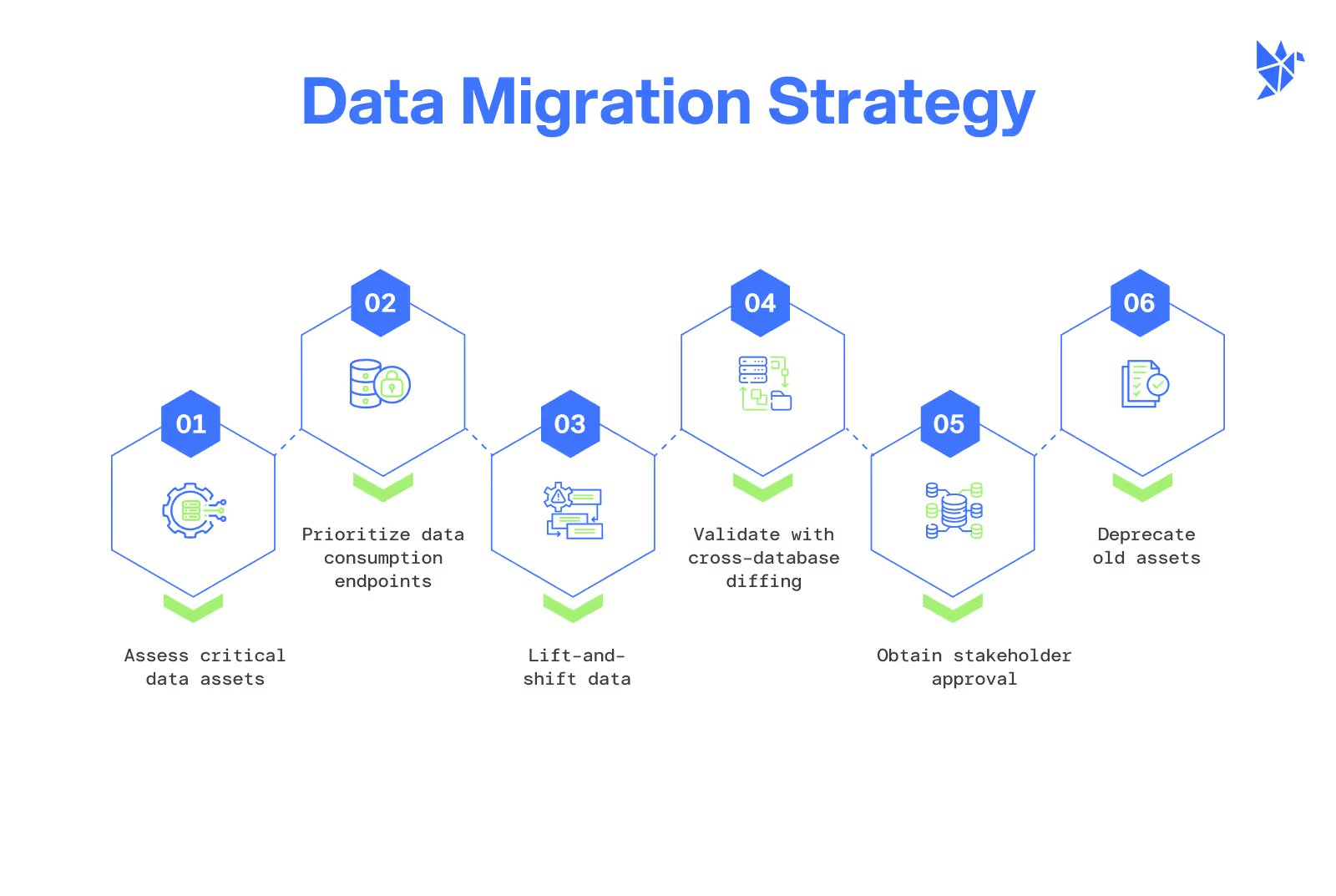

Navigating the complexities of database migration can be daunting, which is why we've compiled a comprehensive guide outlining essential best practices. This free resource is designed to streamline your migration process, ensuring it aligns with both your technical requirements and business objectives. Below, we highlight key insights from our guide, offering a snapshot of strategies to enhance the efficiency and effectiveness of your data migration from Teradata to Snowflake:

1. Plan and prioritize asset migration

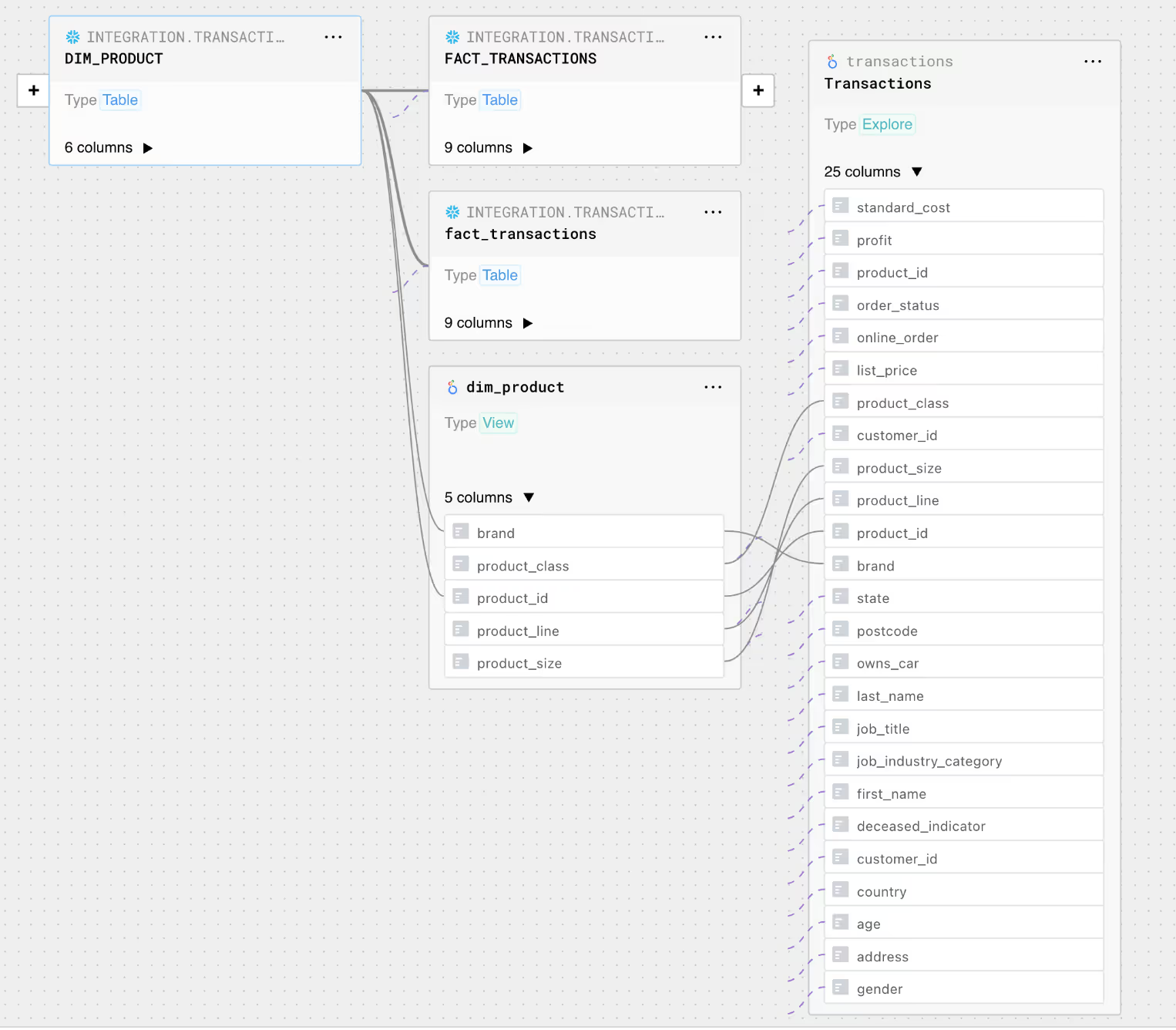

Effective migration planning involves using column-level lineage to identify and rank vital data assets, followed by migrating data consumption endpoints before data production pipelines to ease the transition and reduce load on the old system.

2. Lift and shift the data in its current state

Leverage Datafold’s DMA for the initial lift-and-shift, automatically translating Teradata SQL to Snowflake’s syntax, which minimizes manual code remodeling and speeds up migration.

3. Document your strategy and action plan

Establish a roadmap for Teradata-to-Snowflake migration that outlines goals, timelines, and resource allocation. Integrate Datafold’s DMA to streamline SQL translation, validation, and documentation for a unified strategy.

4. Automating migrations with Datafold’s DMA

Datafold’s Migration Agent (DMA) simplifies the complex process of migrating SQL and validating data parity across Teradata and Snowflake. It handles SQL dialect translation and includes cross-database data diffing, which expedites validation by comparing source and target data for accuracy.

Putting it all together: Teradata to Snowflake migration guide

Successfully migrating from Teradata to Snowflake requires a strategic approach, encompassing both technical proficiency and astute project management.

By aligning technical and business strategies and leveraging Datafold’s DMA, your team can navigate this transition more effectively. Here’s how to put it all together:

- Plan the migration from Teradata to Snowflake

Develop a detailed plan specific to Teradata-Snowflake migration, outlining timelines, resource management, and stakeholder communication. Assess your current Teradata setup, data dependencies, and set clear objectives for the migration to Snowflake.

Use Datafold’s Migration Agent (DMA) to prioritize critical data assets, starting with data consumption points like BI tools and dashboards to enable a smooth transition with minimal disruption.

- Prioritize data consumption endpoints first

It’s better to migrate data consumption endpoints before data production pipelines. Replicate the data in Teradata to Snowflake, and let data users and apps query from Snowflake.

- Leveraging lift-and-shift for Teradata data to Snowflake

Adopt a lift-and-shift strategy in the initial migration phase to simplify the transition, accommodating the architectural and SQL dialect differences between Teradata and Snowflake.

Lift-and-shift data in its current state using a SQL translator embedded within Datafold’s DMA which automates the SQL conversion process.

- Validate with cross-database diffing

Then, use Datafold’s cross-database diffing to verify data parity, enabling quick and accurate 1-to-1 table validation between the Teradata and Snowflake databases.

- Get stakeholder approval

Once the migration reaches a stable phase, seek approval from stakeholders. Use data diff tools to provide evidence of full parity between Teradata and Snowflake systems, reassuring stakeholders of the migration's success.

- Deprecate old assets

As a final step, send out deprecation notices for the old Teradata assets. Share the parity reports via a data diff with stakeholders to facilitate their transition to the new Snowflake system.

Conclusion

If you’re ready to learn more about how Datafold is accelerating data teams to work during a migration like one from Teradata to Snowflake, reach out to a data migrations expert and tell us about your migration, tech stack, scale, and concerns. We’re here to help you understand if data diffing is a solution to your migration concerns.

As mentioned at the start of this blog: migrations are complex, potentially years-long projects. Datafold is here to change that by automating the migration process as much as possible, so your team can focus on what matters: delivering high-quality data to your organization.